The Kelly Coin-Flipping Game: Exact Solutions

Decision-theoretic analysis of how to optimally play the Haghani & Dewey 2016 300-round double-or-nothing coin-flipping game with an edge and ceiling better than using the Kelly Criterion. Computing and following an exact decision tree increases earnings by $6.6 over a modified KC.

2016 experiment with a double-or-nothing coin-flipping game where the player starts with $25 (ie. $34.08$252016) and has an edge of 60%, and can play 300 times, choosing how much to bet each time, winning up to a maximum ceiling of $250. Most of their subjects fail to play well, earning an average $91, compared to the 2016 heuristic benchmark of ~$240 in winnings achievable using a modified Kelly Criterion as their strategy. The KC, however, is not optimal for this problem as it ignores the ceiling and limited number of plays.

We solve the problem of the value of optimal play exactly by using decision trees & dynamic programming for calculating the value function, with implementations in R, Haskell, and C. (See also Problem #14.) We also provide a closed-form exact value formula in R & Python, several approximations using Monte Carlo/random forests/neural networks, visualizations of the value function, and a Python implementation of the game for the OpenAI Gym collection.

We find that optimal play yields $246.61 on average (rather than ~$240), and so the human players actually earned only 36.8% of what was possible, losing $155.6 in potential profit. Comparing decision trees and the Kelly criterion for various horizons (bets left), the relative advantage of the decision tree strategy depends on the horizon: it is highest when the player can make few bets (at b = 23, with a difference of ~$36), and decreases with number of bets as more strategies hit the ceiling.

In the Kelly game, the maximum winnings, number of rounds, and edge are fixed; we describe a more difficult generalized version in which the 3 parameters are drawn from Pareto, normal, and beta distributions and are unknown to the player (who can use Bayesian inference to try to estimate them during play). Upper and lower bounds are estimated on the value of this game. In the variant of this game where subjects are not told the exact edge of 60%, a Bayesian decision tree approach shows that performance can closely approach that of the decision tree, with a penalty for 1 plausible prior of only $1.

Two deep reinforcement learning agents, DQN & DDPG, are implemented but DQN fails to learn and DDPG doesn’t show acceptable performance with default settings, indicating better tuning may be required for them to solve the generalized Kelly game.

The paper “Rational Decision-Making Under Uncertainty: Observed Betting Patterns on a Biased Coin”, by 2016 runs an economics/psychology experiment on optimal betting in a simple coin-flipping game:

What would you do if you were invited to play a game where you were given $34.08$252016 and allowed to place bets for 30 minutes on a coin that you were told was biased to come up heads 60% of the time? This is exactly what we did, gathering 61 young, quantitatively trained men and women to play this game. The results, in a nutshell, were that the majority of these 61 players didn’t place their bets well, displaying a broad panoply of behavioral and cognitive biases. About 30% of the subjects actually went bust, losing their full $34.08$252016 stake. We also discuss optimal betting strategies, valuation of the opportunity to play the game and its similarities to investing in the stock market. The main implication of our study is that people need to be better educated and trained in how to approach decision making under uncertainty. If these quantitatively trained players, playing the simplest game we can think of involving uncertainty and favourable odds, didn’t play well, what hope is there for the rest of us when it comes to playing the biggest and most important game of all: investing our savings? In the words of Ed Thorp, who gave us helpful feedback on our research: “This is a great experiment for many reasons. It ought to become part of the basic education of anyone interested in finance or gambling.”

More specifically:

…Prior to starting the game, participants read a detailed description of the game, which included a clear statement, in bold, indicating that the simulated coin had a 60% chance of coming up heads and a 40% chance of coming up tails. Participants were given $34.08$252016 of starting capital and it was explained in text and verbally that they would be paid, by check, the amount of their ending balance subject to a maximum payout. The maximum payout would be revealed if and when subjects placed a bet that if successful would make their balance greater than or equal to the cap. We set the cap at $340.8$2502016…Participants were told that they could play the game for 30 minutes, and if they accepted the $34.08$252016 stake, they had to remain in the room for that amount of time.5 Participants could place a wager of any amount in their account, in increments of $0.01$0.012016, and they could bet on heads or tails…Assuming a player with agile fingers can put down a bet every 6 seconds, that would allow 300 bets in the 30 minutes of play.

Near-Optimal Play

The authors make a specific suggestion about what near-optimal play in this game would be, based on the Kelly criterion which would yield bets each round of 20% of capital:

The basic idea of the Kelly formula is that a player who wants to maximize the rate of growth of his wealth should bet a constant fraction of his wealth on each flip of the coin, defined by the function (2 × p) − 1, where p is the probability of winning. The formula implicitly assumes the gambler has log utility. It’s intuitive that there should be an optimal fraction to bet; if the player bets a very high fraction, he risks losing so much money on a bad run that he would not be able to recover, and if he bet too little, he would not be making the most of what is a finite opportunity to place bets at favorable odds…We present the Kelly criterion as a useful heuristic a subject could gainfully employ. It may not be the optimal approach for playing the game we presented for several reasons. The Kelly criterion is consistent with the bettor having log-utility of wealth, which is a more tolerant level of risk aversion than most people exhibit. On the other hand, the subjects of our experiment likely did not view $34.08$252016 (or even $340.8$2502016) as the totality of their capital, and so they ought to be less risk averse in their approach to maximizing their harvest from the game. The fact that there is some cap on the amount the subject can win should also modify the optimal strategy…In our game, the Kelly criterion would tell the subject to bet 20% () of his account on heads on each flip. So, the first bet would be $5 (20% of $25) on heads, and if he won, then he’d bet $6 on heads (20% of $30), but if he lost, he’d bet $4 on heads (20% of $20), and so on.

…If the subject rightly assumed we wouldn’t be offering a cap of more than $1,000 per player, then a reasonable heuristic would be to bet a constant proportion of one’s bank using a fraction less than the Kelly criterion, and if and when the cap is discovered, reducing the betting fraction further depending on betting time remaining to glide in safely to the maximum payout. For example, betting 10% or 15% of one’s account may have been a sound starting strategy. We ran simulations on the probability of hitting the cap if the subject bet a fixed proportion of wealth of 10%, 15% and 20%, and stopping when the cap was exceeded with a successful bet. We found there to be a 95% probability that the subjects would reach the $250 cap following any of those constant proportion betting strategies, and so the expected value of the game as it was presented (with the $250 cap) would be just under $240. However, if they bet 5% or 40% of their bank on each flip, the probability of exceeding the cap goes down to about 70%.

This game is interesting as a test case because it is just easy enough to solve exactly on a standard computer in various ways, but also hard enough to defeat naive humans and be nontrivial.

Subjects’ Performance

Despite the Kelly criterion being well-known in finance and fairly intuitive, and the game being very generous, participants did not perform well:

The sample was largely comprised of college age students in economics and finance and young professionals at finance firms. We had 14 analyst and associate level employees at two leading asset management firms. The sample consisted of 49 males and 12 females. Our prior was that these participants should have been well prepared to play a simple game with a defined positive expected value…Only 21% of participants reached the maximum payout of $250,7 well below the 95% that should have reached it given a simple constant percentage betting strategy of anywhere from 10% to 20%.8 We were surprised that one third of the participants wound up with less money in their account than they started with. More astounding still is the fact that 28% of participants went bust and received no payout. That a game of flipping coins with an ex-ante 60:40 winning probability produced so many subjects that lost everything is startling. The average ending bankroll of those who did not reach the maximum and who also did not go bust, which represented 51% of the sample, was $75. While this was a tripling of their initial $25 stake, it still represents a very sub-optimal outcome given the opportunity presented. The average payout across all subjects was $91, letting the authors off the hook relative to the $250 per person they’d have had to pay out had all the subjects played well.

This is troubling because the problem is so well-defined and favorable to the players, and can be seen as a microcosm of the difficulties people experience in rational betting. (While it’s true that human subjects typically perform badly initially in games like the iterated prisoner’s dilemma and need time to learn, it’s also true that humans only have one life to learn stock market investment during, and these subjects all should’ve been well-prepared to play.)

Instead of expected earnings of ~$240, the players earned $91—forfeiting $149. However, if anything, the authors understate the underperformance, because as they correctly note, the Kelly criterion is not guaranteed to be optimal in this problem due to the potential for different utility functions (what if we simply want to maximize expected wealth, not log wealth?), the fixed number of bets & the ceiling, as the Kelly criterion tends to assume that wealth can increase without limit & there is an indefinite time horizon.

Optimality in the Coin-Flipping MDP

Indeed, we can see with a simple example that KC is suboptimal in terms of maximizing expected value: what if we are given only 1 bet (b = 1) to use our $25 on? If we bet 20% (or less) per the KC, then

0.6 × (25 + 5) + 0.4 × (25 − 5) = 26But if we bet everything:

0.6 × (25 + 25) + 0.4 × (25 − 25) = 30It’s true that 40% of the time, we go bankrupt and so we couldn’t play again… but there are no more plays in b = 1 so avoiding bankruptcy boots nothing.

We can treat this coin-flipping game as a tree-structured Markov decision process. For more possible bets, the value of a bet of a particular amount given a wealth w and bets remaining b-1 will recursively depend on the best strategy for the two possible outcomes (weighted by probability), giving us a Bellman value equation to solve like:

To solve this equation, we can explore all possible sequences of bets and outcomes to a termination condition and reward, and then work use backwards induction, defining a decision tree which can be (reasonably) efficiently computed using memoization/dynamic programming.

Given the problem setup, we can note a few things about the optimal strategy:

-

if the wealth ever reaches $0, the game has effectively ended regardless of how many bets remain, because betting $0 is the only possibility and it always returns $0

-

similarly, if the wealth ever reaches the upper bound of $250, the optimal strategy will effectively end the game by always betting $0 after that regardless of how many bets remain, since it can’t do better than $250 and can only do worse.

These two shortcuts will make the tree much easier to evaluate because many possible sequences of bet amounts & outcomes will quickly hit $0 or $250 and require no further exploration.

-

a state with more bets is always of equal or better value than fewer

-

a state with more wealth is always equal or better value than less

-

the value of 0 bets is the current wealth

-

the value of 1 bet depends on the ceiling and current wealth: whether $250 is >2× current wealth. If the ceiling more than twice current wealth, the optimal strategy with 1 bet left is to bet everything, since that has highest EV and there’s no more bets to worry about going bankrupt & missing out on.

Implementation of Game

A Python implementation (useful for applying the many reinforcement learning agent implementations which are Gym-compatible) is available in OpenAI Gym:

import gym

env = gym.make('KellyCoinflip-v0')

env.reset()

env._step(env.wealth*100*0.2) # bet with 20% KC

# ...

env._reset() # end of game, start a new one, etcDecision Tree

We can write down the value function as a mutual recursion: f calculates the expected value of the current step, and calls V to estimate the value of future steps; V checks for the termination conditions w=$0/$250 & b = 0 returning current wealth as the final payoff, and if not, calls f on every possible action to estimate their value and returns the max… This mutual recursion bottoms out at the termination conditions. As defined, this is grossly inefficient as every node in the decision tree will be recalculated many times despite yielding identical deterministic, referentially transparent results. So we need to memoize results if we want to evaluate much beyond b = 5.

Approximate Value Function

Implemented in R:

# devtools::install_github("hadley/memoise")

library(memoise)

f <- function(x, w, b) { 0.6*mV(min(w+x,250), b-1) + 0.4*mV(w-x, b-1)}

mf <- memoise(f)

V <- function(w,b) {

returns <- if (b>0 && w>0 && w<250) { sapply(seq(0, w, by=0.1), function(x) { mf(x,w,b) }) } else { w }

max(returns) }

mV <- memoise(V)

## sanity checks:

mV(25, 0) == 25 && mV(25, 1) == 30 && mV(0, 300) == 0Given our memoized value function mV we can now take a look at expected value of optimal betting for bets b 0–300 with the fixed starting payroll of $25. Memoization is no panacea, and R/memoise is slow enough that I am forced to make one change to the betting: instead of allowing bets in penny/$0.01 increments, I approximate by only allowing bets in $1 increments, to reduce the branching factor to a maximum of 250 possible choices each round rather than 25000 choices. (Some comparisons with decipenny and penny trees suggests that this makes little difference since early on the bet amounts are identical and later on being able to make <$1 adjustments to bets yield small gains relative to total wealth; see the Haskell implementation.)

Of course, even with memoization and reducing the branching factor, it’s still difficult to compute the value of the optimal strategy all the way to b = 300 because the exponentially increasing number of strategies still need to be computed at least once. With this R implementation, trees for up to b = 150 can be computed with 4GB RAM & ~16h.

The value function for increasing bets:

vs <- sapply(1:150, function(b) { round(mV(25, b), digits=1) }); vs

# [1] 30.0 36.0 43.2 45.4 48.0 53.7 54.6 57.6 61.8 62.6 66.8 68.2 70.6 74.1 75.0 79.1 79.7 82.5 84.7 86.2 89.8 90.3 93.8

# [24] 94.5 97.0 98.8 100.3 103.2 103.9 107.2 107.6 110.3 111.4 113.3 115.2 116.4 119.0 119.6 122.4 122.9 125.3 126.2 128.1 129.5 130.8 132.8

# [47] 133.6 136.0 136.5 138.8 139.4 141.4 142.3 143.8 145.2 146.3 148.0 148.8 150.6 151.3 153.1 153.8 155.5 156.3 157.7 158.8 159.9 161.2 162.1

# [70] 163.6 164.2 165.8 166.4 167.9 168.5 169.9 170.7 171.8 172.8 173.7 174.8 175.6 176.8 177.5 178.7 179.3 180.5 181.1 182.3 182.9 184.0 184.7

# [93] 185.6 186.4 187.2 188.1 188.8 189.8 190.3 191.3 191.9 192.8 193.4 194.3 194.9 195.7 196.3 197.1 197.8 198.4 199.1 199.7 200.5 201.0 201.8

# [116] 202.3 203.0 203.5 204.3 204.8 205.4 206.0 206.6 207.1 207.7 208.3 208.8 209.4 209.9 210.5 210.9 211.5 211.9 212.5 213.0 213.5 213.9 214.5

# [139] 214.9 215.4 215.8 216.3 216.8 217.2 217.6 218.0 218.5 218.9 219.3 219.7So as the number of bets escalates, our expected payoff increases fairly quickly and we can get very close to the ceiling of $250 with canny betting.

Monte Carlo Tree Evaluation

If we do not have enough RAM to expand the full decision tree to its terminal nodes, there are many ways to approximate it. A simple one is to expand the tree as many levels far down as possible, and then if a terminal node is reached, do exact backwards induction, otherwise, at each pseudo-terminal node, approximate the value function somehow and then do backwards induction as usual. The deeper the depth, the closer the approximation becomes to the exact value, while still doing optimal planning within the horizon.

One way to approximate it would be to run a large number of simulations (perhaps 100) taking random actions until a terminal node is hit, and take the mean of the total values as the estimate. This would be a Monte Carlo tree evaluation. (This forms the conceptual basis of the famous MCTS/Monte Carlo tree search.) A random policy is handy because it can be used anywhere, but here we already know a good heuristic which does better than random: the KC. So we can use that instead. This gives us as much optimality as we can afford.

Setting up a simulation of the coin-flipping game which can be played with various strategies:

game <- function(strategy, wealth, betsLeft) {

if (betsLeft>0) {

bet <- strategy(wealth, betsLeft)

wealth <- wealth - bet

flip <- rbinom(1,1,p=0.6)

winnings <- 2*bet*flip

wealth <- min(wealth+winnings, 250)

return(game(strategy, wealth, betsLeft-1)) } else { return(wealth); } }

simulateGame <- function(s, w=25, b=300, iters=100) { mean(replicate(iters, game(s, w, b))) }

kelly <- function(w, b) { 0.20 * w }

smarterKelly <- function(w, b) { if(w==250) {0} else { (2*0.6-1) * w } }

random <- function(w, b) { sample(seq(0, w, by=0.1), 1) }

evaluate <- function(w,b) { simulateGame(smarterKelly, w, b) }

mevaluate <- memoise(evaluate)

mevaluate(25, 10)

# [1] 35.30544906

mevaluate(25, 100)

# [1] 159.385275

mevaluate(25, 300)

# [1] 231.4763619As expected the KC can do well and is just as fast to compute as a random action, so using it will give much better estimates of the value function for free.

With the Monte Carlo value function set up, the original value function can be slightly modified to include a maximum depth parameter and to evaluate using the MC value function instead once that maximum depth is hit:

f <- function(x, w, b, maxDepth) { 0.6*mV(min(w+x,250), b-1, maxDepth) + 0.4*mV(w-x, b-1, maxDepth)}

mf <- memoise(f)

V <- function(w,b, maxDepth) {

if (b<=(b-maxDepth)) { mevaluate(w,b) } else {

returns <- if (b>0 && w>0 && w<250) { sapply(seq(0, w, by=0.1), function(x) { mf(x,w,b, maxDepth) }) } else { w }

max(returns) }}

mV <- memoise(V)

mV(25, 300, 100); mV(25, 300, 50)Optimal next Action

One is also curious what the optimal strategy is, not just how much we can make with the optimal strategy given a particular starting point. As defined, I can’t see how to make mV return the optimal choices along the way, since it has to explore all choices bottom-up and can’t know what is optimal until it has popped all the way back to the root, and state can’t be threaded in because that defeats the memoization.

But then I remembered the point of computing value functions: given the (memoized) value function for each state, we can then plan by simply using V in a greedy planning algorithm by asking, at each step, for the value of each possible action (which is memoized, so it’s fast), and returning/choosing the action with the maximum value (which takes into account all the downstream effects, including our use of V at each future choice):

VPplan <- function(w, b) {

if (b==0) { return (0); } else {

returns <- sapply(seq(0, w), function(wp) { mf(wp, w, b); })

return (which.max(returns)-1); }

}

mVPplan <- memoise(VPplan)

## sanity checks:

mVPplan(250, 0)

# [1] 0

mVPplan(250, 1)

# [1] 0

mVPplan(25, 3)

# [1] 25It’s interesting that when b is very small, we want to bet everything on our first bet, because there’s not enough time to bother with recovering from losses; but as we increase b, the first bet will shrink & become more conservative:

firstAction <- sapply(1:150, function(b) { mVPplan(25, b) }); firstAction

# [1] 25 25 25 10 10 18 17 18 14 14 14 9 10 11 7 11 7 10 10 10 9 6 9 7 9 8 9 8 7 8 7 8 7 8 7 8 7 7 7 7 7 6 7 6 7 6 7

# [48] 6 6 6 6 6 6 6 6 6 6 6 6 5 6 5 6 5 6 5 5 5 5 5 5 5 5 5 5 5 5 5 5 5 5 5 5 5 5 5 5 4 5 4 5 4 5 4

# [95] 5 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 3

# [142] 4 3 4 3 4 3 3 3 3Optimizing

The foregoing is interesting but the R implementation is too slow to examine the case of most importance: b = 300. The issue is not so much the intrinsic difficulty of the problem—since the R implementation’s RAM usage is moderate even at b = 150, indicating that the boundary conditions do indeed tame the exponential growth & turn it into something more like quadratic growth—but the slowness of computing. Profiling suggests that most of the time is spent inside memoise:

# redefine to clear out any existing caching:

forget(mV)

Rprof(memory.profiling=TRUE, gc.profiling=TRUE, line.profiling=TRUE)

mV(w=25, b=9)

Rprof(NULL)

summaryRprof()Most of the time is spent in functions which appear nowhere in our R code, like lapply or deparse, which points to the behind-the-scenes memoise. Memoization should be fast because the decision tree can be represented as nothing but a multidimensional array in which one does lookups for each combination of w/b for a stored value V, and array lookups ought to be near-instantaneous. But apparently there is enough overhead to dominate our decision tree’s computation.

R does not provide native hash tables; what one can do is use R’s “environments” (which use hash tables under the hood), but are restricted to string keys (yes, really) as a poor man’s hash table by using digest to serialize & hash arbitrary R objects into string keys which can then be inserted into an ‘environment’. (I have since learned that there is a hashmap library which is a wrapper around a C++ hashmap implementation which should be usable: Nathan Russell’s hashmap.) The backwards induction remains the same and the hash-table can be updated incrementally without any problem (useful for switching to alternative methods like MCTS), so the rewrite is easy:

## crude hash table

library(digest)

## digest's default hash is MD5, which is unnecessarily slower, so use a faster hash:

d <- function (o) { digest(o, algo="xxhash32") }

lookup <- function(key) { get(d(key), tree) }

set <- function(key,value) { assign(envir=tree, d(key), value) }

isSet <- function(key) { exists(d(key), envir=tree) }

## example:

tree <- new.env(size=1000000, parent=emptyenv())

set(c(25,300), 50); lookup(c(25, 300))

# [1] 50

tree <- new.env(size=1000000, parent=emptyenv())

f <- function(x, w, b) {

0.6*V(min(w+x,250), b-1) + 0.4*V(w-x, b-1) }

V <- function(w, b) {

if (isSet(c(w,b))) { return(lookup(c(w,b))) } else {

returns <- if (b>0 && w>0 && w<250) { sapply(seq(0, w, by=0.1), function(x) { f(x,w,b) }) } else { return(w) }

set(c(w,b), max(returns))

return(lookup(c(w,b)))

}

}

V(25,5)While the overhead is not as bad as memoise, performance is still not great.

Python

Alternative naive & memorized implementations in Python.

Unmemoized version (a little different due to floating point):

import numpy as np # for enumerating bet amounts as a float range

def F(w, x, b):

return 0.6*V(min(w+x,250), b-1) + 0.4*V(w-x, b-1)

def V(w, b):

if b>0 and w>0 and w<250:

bets = np.arange(0, w, 0.1).tolist()

returns = [F(w,x,b) for x in bets]

else:

returns = [w]

return max(returns)

V(25,0)

V(25,0)

# 25

V(25,1)

# 29.98

V(25,2)

# 35.967999999999996

V(25,3)Python has hash-tables built in as dicts, so one can use those:

import numpy as np

def F(w, x, b, dt):

return 0.6*V(min(w+x,250), b-1, dt) + 0.4*V(w-x, b-1, dt)

def V(w, b, dt):

if b>0 and w>0 and w<250:

if (w,b) in dict:

return dict[(w,b)]

else:

bets = np.arange(0, w, 0.1).tolist()

returns = [F(w,x,b,dt) for x in bets]

else:

returns = [w]

best = max(returns)

dict[(w,b)] = best

return best

dict = {}

V(25, 0, dict)

# 25Haskell

Gurkenglas provides 2 inscrutably elegant & fast versions of the value function which works in GHCi:

(!!25) $ (!!300) $ (`iterate` [0..250]) $ \nextutilities -> (0:) $ (++[250]) $

tail $ init $ map maximum $ (zipWith . zipWith) (\up down -> 0.6 * up + 0.4 * down)

(tail $ tails nextutilities) (map reverse $ inits nextutilities)

-- 246.2080494949234

-- it :: (Enum a, Fractional a, Ord a) => a

-- (7.28 secs, 9,025,766,704 bytes)

:module + Control.Applicative

(!!25) $ (!!300) $ (`iterate` [0..250]) $ map maximum . liftA2 ((zipWith . zipWith)

(\up down -> 0.6 * up + 0.4 * down)) tails (map reverse . tail . inits)

- 246.2080494949234

-- it :: (Enum a, Ord a, Fractional a) => a

-- (8.35 secs, 6,904,047,568 bytes)Noting:

iterate f x = x : iterate f (f x)doesn’t need to think much about memoization. From right to left, the line reads “Given how much each money amount is worth when n games are left, you can zip together that list’s prefixes and suffixes to get a list of options for each money amount at (n+1) games left. Use the best for each, computing the new utility as a weighted average of the previous. At 0 and 250 we have no options, so we must manually prescribe utilities of 0 and 250. Inductively use this to compute utility at each time from knowing that at the end, money is worth itself. Look at the utilities at 300 games left. Look at the utility of $25.” Inductively generating that whole list prescribes laziness.

Since it’s Haskell, we can switch to compiled code and use fast arrays.

Implementations in Haskell have been written by Gurkenglas & nshepperd using array-memoize & Data.Vector (respectively):

import System.Environment (getArgs)

import Data.Function (iterate)

import Data.Function.ArrayMemoize (arrayMemo)

type Wealth = Int

type EV = Double

cap :: Wealth

cap = 250

value :: [Wealth -> EV]

value = iterate f fromIntegral where

f next = arrayMemo (0, cap) $ \x -> maximum [go w | w <- [0..min (cap - x) x]] where

go w = 0.6 * next (min cap (x+w)) + 0.4 * next (x-w)

main :: IO ()

main = do [x, b] <- map read <$> getArgs

print $ value !! b $ xThe second one is more low-level and uses laziness/“tying the knot” to implement the memoization; it is the fastest and is able to evaluate memo 25 300 in <12s: the value turns out to be $246. (If we assume that all games either end in $250 or $0, then this implies that 246⁄250 = 0.984 or >98.4% of decision-tree games hit the max, as compared to Haghani & Dewey’s estimate that ~95% of KC players would.) The memoization is done internally, so to access all values we do more logic within the lexical scope.

import System.Environment (getArgs)

import Data.Function (fix)

import Data.Function.Memoize (memoFix2)

import Data.Vector (Vector)

import qualified Data.Vector as V (generate, (!))

import qualified Data.MemoUgly as U (memo)

type Wealth = Int

type Bets = Int

type EV = Double

cap :: Wealth

cap = 250

value :: (Wealth -> Bets -> EV) -> Wealth -> Bets -> EV

value next 0 b = 0

value next w 0 = fromIntegral w

value next w b = maximum [go x | x <- [0..min (cap - w) w]]

where

go x = 0.6 * next (min cap (w+x)) (b-1) + 0.4 * next (w-x) (b-1)

-- There are 4 possible ways aside from 'array-memoize':

-- 1. Direct recursion.

direct :: Wealth -> Bets -> EV

direct = fix value

-- 2. Memoized recursion.

memo :: Wealth -> Bets -> EV

memo w b = cached_value w b

where

cached_value w b = table V.! b V.! w

table :: Vector (Vector EV)

table = V.generate (b + 1)

(\b -> V.generate (cap + 1)

(\w -> value cached_value w b))

-- 3. Memoized recursion using 'memoize' library; slower.

memo2 :: Wealth -> Bets -> EV

memo2 = memoFix2 value

-- 4. Memoize using 'uglymemo' library; also slower but global

memo3 :: Wealth -> Bets -> EV

memo3 = U.memo . direct

main :: IO ()

main = do [w, b] <- map read <$> getArgs

print (memo w b)We can obtain all values with a function like

memo3 :: [Wealth] -> [Bets] -> [EV]

memo3 ws bs = zipWith cached_value ws bs

where

cached_value w b = table V.! b V.! w

table :: Vector (Vector EV)

table = V.generate (maximum bs + 1)

(\b -> V.generate (cap + 1)

(\w -> value cached_value w b))and evaluate:

λ> map round (memo3 (repeat 25) [1..300])

-- [30,36,43,45,48,54,55,58,62,63,67,68,71,74,75,79,80,82,85,86,90,90,94,94,97,99,100,

-- 103,104,107,108,110,111,113,115,116,119,120,122,123,125,126,128,130,131,133,134,

-- 136,136,139,139,141,142,144,145,146,148,149,151,151,153,154,155,156,158,159,160,

-- 161,162,164,164,166,166,168,169,170,171,172,173,174,175,176,177,177,179,179,181,

-- 181,182,183,184,185,186,186,187,188,189,190,190,191,192,193,193,194,195,196,196,

-- 197,198,198,199,200,200,201,202,202,203,204,204,205,205,206,207,207,208,208,209,

-- 209,210,210,211,212,212,213,213,214,214,214,215,215,216,216,217,217,218,218,219,

-- 219,219,220,220,221,221,221,222,222,222,223,223,224,224,224,225,225,225,226,226,

-- 226,227,227,227,228,228,228,228,229,229,229,230,230,230,230,231,231,231,231,232,

-- 232,232,232,233,233,233,233,234,234,234,234,234,235,235,235,235,236,236,236,236,

-- 236,236,237,237,237,237,237,238,238,238,238,238,238,239,239,239,239,239,239,239,

-- 240,240,240,240,240,240,240,241,241,241,241,241,241,241,241,242,242,242,242,242,

-- 242,242,242,242,243,243,243,243,243,243,243,243,243,243,244,244,244,244,244,244,

-- 244,244,244,244,244,244,245,245,245,245,245,245,245,245,245,245,245,245,245,245,

-- 246,246,246,246,246,246,246,246,246,246,246,246,246]Exact Value Function

We could go even further with zip [1..3000] (memo3 (repeat 25) [1..3000]) (which takes ~118s). Interestingly, the value stops increasing around b = 2,150 (V = 249.99009946798637) and simply repeats from there; it does not actually reach 250.

This is probably because with a stake of $25 it is possible (albeit extremely unlikely) to go bankrupt (gambler’s ruin) even betting the minimal fixed amount of $1. (The original Haskell code is modeled after the R, which discretized this way for tractability; however, since the Haskell code is so fast, this is now unnecessary.) If this is the case, then being able to bet smaller amounts should allow expected value to converge to $250 as exactly as can be computed, because the probability of going bankrupt after 2500 bets of $0.01 each is effectively zero—as far as R and Haskell will compute without special measures, 0.42500 = 0. We can go back and model the problem exactly as it was in the paper by simply multiply everything by 100 & interpreting in pennies:

cap = 25000 -- 250*100

-- ...

λ> zip [1..3000] (memo3 (repeat (25*100)) [1..3000])

[(1,3000.0),(2,3600.0),(3,4320.0),(4,4536.000000000002),(5,4795.200000000003),

(6,5365.440000000002),(7,5458.752000000004),(8,5757.350400000005),(9,6182.853120000005),(10,6260.488704000007),

(11,6676.137676800007),(12,6822.331453440009),(13,7060.11133132801),(14,7413.298296422411),(15,7499.673019514893),

(16,7913.219095166989),(17,7974.777338678492),(18,8250.07680018581),(19,8471.445742115113),(20,8625.387566014524),

(21,8979.7747405993),(22,9028.029532170909),(23,9384.523360172316),(24,9449.401095461177),(25,9699.670042282964),

(26,9882.821122181414),(27,10038.471393315525),(28,10323.078038637072),(29,10394.862038743217),(30,10760.507349554608),

(31,10763.85850433795),(32,11040.414570571116),(33,11141.364854687306),(34,11337.607662912955),(35,11524.013477359924),

(36,11648.117264906417),(37,11909.035644688667),(38,11968.56153182668),(39,12294.156320547045),(40,12296.062638839443),

(41,12554.172237535688),(42,12628.174503649356),(43,12820.701630209007),(44,12962.820770637838),(45,13095.979243218648),

(46,13298.241837094192),(47,13377.799870378874),(48,13632.949691989288),(49,13664.245374230446),(50,13935.591599024072),

(51,13953.650302164471),(52,14168.318904539414),(53,14244.57145315508),(54,14407.574739209653),(55,14535.761032800987),

(56,14651.76990919319),(57,14826.143029982914),(58,14899.500923102909),(59,15114.7924517843),(60,15149.530755496799),

(61,15399.983335449066),(62,15400.771450460255),(63,15604.908915203496),(64,15652.268440952908),(65,15813.476931576255),

(66,15903.186446379666),(67,16025.159151461572),(68,16152.79680966944),(69,16238.976728976708),(70,16400.466139297678),

(71,16454.05880056233),(72,16645.646128447275),(73,16669.63235112316),(74,16880.735724497223),(75,16885.012958972715),

(76,17058.916142797243),(77,17099.596358241968),(78,17239.339510457277),(79,17312.850756552616),(80,17421.275332110683),

(81,17524.309847338853),(82,17604.068867939182),(83,17733.56645905442),(84,17787.13463287746),(85,17940.266786525535),

(86,17969.95038247786),(87,18144.105153480315),(88,18152.051576292564),(89,18315.336828409476),(90,18333.026286703964),

(91,18467.997509430665),(92,18512.510521892633),(93,18621.43451689773),(94,18690.183932686265),(95,18775.180977557586),

(96,18865.765874427907),(97,18928.817685066606),(98,19039.0117965317),(99,19081.969208988696),(100,19209.70993405155),

(101,19234.300290845116),(102,19377.678277281033),(103,19385.51250899971),(104,19524.210291319585),(105,19535.341194745335),

(106,19651.63659936918),(107,19683.55258264968),(108,19779.19175398216),(109,19829.94117896368),(110,19906.559349360658),

(111,19974.327332744302),(112,20033.4549154762),(113,20116.554995203987),(114,20159.623419373274),(115,20256.489653646044),

(116,20284.836941737507),(117,20394.01642719967),(118,20408.892517902153),(119,20529.038285622184),(120,20531.61013289084),

(121,20639.865300258658),(122,20652.830860566657),(123,20744.14900653099),(124,20772.415137446053),(125,20848.093322557295),

(126,20890.24116222975),(127,20951.49506668366),(128,21006.203412595118),(129,21054.171837601196),(130,21120.21127127963),

(131,21155.960430614512),(132,21232.187753960352),(133,21256.71536121999),(134,21342.06833189189),(135,21356.30748944987),

(136,21449.7998427048),(137,21454.622738748447),(138,21545.893033861896),(139,21551.56090345761),(140,21629.60553083633),

(141,21647.034539300425),(142,21712.842497605183),(143,21740.96793155338),(144,21795.467123757197),(145,21833.2961358927),

(146,21877.356736609778),(147,21923.964087187007),(148,21958.401746908596),(149,22012.92577178374),(150,22038.504663866137),

(151,22100.143459100647),(152,22117.579175397976),(153,22185.586988586107),(154,22195.549289624447),(155,22269.23310835155),

(156,22272.348533907876),(157,22343.200726693787),(158,22347.9192078922),(159,22408.92268721723),(160,22422.211687202966),

(161,22474.09110290414),(162,22495.183774650206),(163,22538.619509420547),(164,22566.800095953415),(165,22602.430698828903),

(166,22637.031537177958),(167,22665.456036220217),(168,22705.854721234122),(169,22727.634820414445),(170,22773.2515209447),

(171,22788.913686133077),(172,22839.208606334214),(173,22849.246045182404),(174,22903.71702393249),(175,22908.591564315902),

(176,22966.44249475668),(177,22966.915677569094),(178,23017.91541566918),(179,23024.18913098028),(180,23068.1913376925),

(181,23080.387557725626),(182,23117.90218718125),(183,23135.491081806776),(184,23166.99717629234),(185,23189.48394853381),

(186,23215.431334191013),(187,23242.35418014611),(188,23263.165077204263),(189,23294.093255008785),(190,23310.163806371635),

(191,23344.695808912184),(192,23356.397530793947),(193,23394.159357087876),(194,23401.840515265318),(195,23442.484035635396),

(196,23446.47095075504),(197,23489.640293583914),(198,23490.270646383346),(199,23529.46834312825),(200,23533.224741609163),

(201,23567.312697440484),(202,23575.321437418483),(203,23604.666095269786),(204,23616.551745369172),(205,23641.497567737664),

(206,23656.90925341186),(207,23677.779921209272),(208,23696.38990746728),(209,23713.489458166325),(210,23734.991807798215),

(211,23748.60571568506),(212,23772.71501926872),(213,23783.111220502426),(214,23809.56139463541),(215,23816.991259707916),

(216,23845.534410064567),(217,23850.233666149958),(218,23880.639012115425),(219,23882.82861769509),(220,23914.391375329913),

(221,23914.76844952492),(222,23942.714790600083),(223,23946.0474787004),(224,23970.438187949254),(225,23976.66184026535),

(226,23997.74934793851),(227,24006.60933420138),(228,24024.631040582935),(229,24035.889282584474),(230,24051.068374275732),

(231,24064.50239633),(232,24077.048621078735),(233,24092.450650947027),(234,24102.561052984205),(235,24119.737170755707),

(236,24127.59678851838),(237,24146.366121052237),(238,24152.148649090945),(239,24172.342607735227),(240,24176.211024526496),

(241,24197.672583935127),(242,24199.779747244538),(243,24222.35471729034),(244,24222.851974583486),(245,24243.93438158678),

(246,24245.42607879143),(247,24263.972996501543),(248,24267.50154423283),(249,24283.6900658947),(250,24289.078871384656),

(251,24303.07565677869),(252,24310.159487219513),(253,24322.121319078917),(254,24330.74566159496),(255,24340.819980440137),

(256,24350.840429290023),(257,24359.165841053073),(258,24370.447517349417),(259,24377.154275094566),(260,24389.571277415347),

(261,24394.781738403053),(262,24408.216622744527),(263,24412.045682031203),(264,24426.388969625325),(265,24428.94447133704),

(266,24444.09418292577),(267,24445.477310292874),(268,24461.32346887292),(269,24461.644170708976),(270,24476.42136455785),

(271,24477.445726085083),(272,24490.501181704694),(273,24492.883289818776),(274,24504.335944986862),(275,24507.958757514363),

(276,24517.920620087607),(277,24522.6745531501),(278,24531.251041416166),(279,24537.03357887482),(280,24544.32387659046),

(281,24551.039168217816),(282,24557.136561611253),(283,24564.69504250772),(284,24569.687240115927),(285,24578.005270307505),

(286,24581.974706479406),(287,24590.97422968356),(288,24593.998352542163),(289,24603.606573136858),(290,24605.75811775705),

(291,24615.907195034095),(292,24617.254442557904),(293,24627.881197714756),(294,24628.488224763427),(295,24639.274079225856),

(296,24639.46077884001),(297,24649.128469095107),(298,24650.173797856685),(299,24658.72751316172),(300,24660.629317974468)

...

(1884,24999.999999999614),(1885,24999.99999999963),(1886,24999.999999999643),(1887,24999.999999999658),(1888,24999.99999999967),

(1889,24999.99999999968),(1890,24999.999999999694),(1891,24999.9999999997),(1892,24999.999999999716),(1893,24999.999999999727),

(1894,24999.999999999738),(1895,24999.99999999975),(1896,24999.99999999976),(1897,24999.999999999767),(1898,24999.99999999978),

(1899,24999.99999999979),(1900,24999.9999999998),(1901,24999.99999999981),(1902,24999.999999999818),(1903,24999.999999999825),

(1904,24999.999999999833),(1905,24999.999999999844),(1906,24999.999999999854),(1907,24999.99999999986),(1908,24999.99999999987),

(1909,24999.999999999876),(1910,24999.999999999884),(1911,24999.99999999989),(1912,24999.999999999898),(1913,24999.999999999905),

(1914,24999.999999999913),(1915,24999.99999999992),(1916,24999.999999999924),(1917,24999.999999999927),(1918,24999.999999999935),

(1919,24999.99999999994),(1920,24999.99999999995),(1921,24999.99999999995),(1922,24999.999999999956),(1923,24999.999999999964),

(1924,24999.999999999967),(1925,24999.99999999997),(1926,24999.999999999978),(1927,24999.999999999978),(1928,24999.999999999985),

(1929,24999.99999999999),(1930,24999.999999999993),(1931,24999.999999999993),(1932,25000.0),(1933,25000.0),

(1934,25000.0),(1935,25000.0),(1936,25000.0),(1937,25000.0),(1938,25000.0),

(1939,25000.0),(1940,25000.0),(1941,25000.0),(1942,25000.0),(1943,25000.0),

(1944,25000.0),(1945,25000.0),(1946,25000.0),(1947,25000.0),(1948,25000.0),

(1949,25000) ... ]With the exact penny version, the value of b = 300 is now $246.6062932 (taking ~3746s compiled), so the approximation costs an expected value of only $0.61. The full run reveals that convergence happens at b = 193293ya (which can be computed in ~48503s compiled), past which there is no need to compute further.

C/C++

FeepingCreature provides a vectorized C implementation of the exact penny game that runs in a few minutes (benefiting ~13% from profile-guided optimization when compiled with GCC).

He also notes that “top-down” memoization/dynamic programming is overkill for this problem: because almost all states ultimately get evaluated, the dynamic programming doesn’t avoid evaluating many states. Since each state depends on an immediate successor and forms a clean tree, it is possible to calculate the tree much more directly “bottom-up” by evaluating all the terminal nodes b = 0, looping over the previous nodes b+1, then throwing away the no longer necessary b, and evaluating b+2, and so on; only two layers of the tree need to be stored at any time, which can potentially fit into on-processor cache & have better cache locality. Another advantage of this representation is parallelism, as each node in the upper layer can be evaluated independently.

An implementation using OpenMP for threading can solve b = 300 in ~8s:

#include <stdlib.h>

#include <stdio.h>

#include <stdbool.h>

#include <math.h>

#define ROUND_LIMIT 300

#define MONEY_LIMIT 25000

#define MONEY_START 2500

#define MONEY_FACTOR 100.0f

// #define DATATYPE float

// #define F(X) X ## f

#define DATATYPE double

#define F(X) X

#define THREADS 8

// MEMOIZED METHOD

typedef struct {

bool known;

DATATYPE ev;

} CacheEntry;

static DATATYPE decide(CacheEntry * const table, int money, int round) {

if (round < 0) return money;

if (money == 0 || money == MONEY_LIMIT) return money;

int index = round * (MONEY_LIMIT + 1) + money;

// int index = money * ROUND_LIMIT + round;

if (table[index].known) return table[index].ev;

DATATYPE best_bet_score = -1;

for (int bet = 0; bet <= money; bet++) {

DATATYPE winres = decide(table, (money + bet > MONEY_LIMIT) ? MONEY_LIMIT : (money + bet), round - 1);

DATATYPE loseres = decide(table, money - bet, round - 1);

DATATYPE combined = F(0.6) * winres + F(0.4) * loseres;

best_bet_score = F(fmax)(best_bet_score, combined);

}

table[index] = (CacheEntry) { true, best_bet_score };

return best_bet_score;

}

void method1() {

CacheEntry *table = calloc(sizeof(CacheEntry), ROUND_LIMIT * (MONEY_LIMIT + 1));

printf("running.\n");

int best_bet = 0;

DATATYPE best_bet_score = -1;

for (int bet = 0; bet <= MONEY_START; bet++) {

DATATYPE winres = decide(table, MONEY_START + bet, ROUND_LIMIT - 1);

DATATYPE loseres = decide(table, MONEY_START - bet, ROUND_LIMIT - 1);

DATATYPE combined = F(0.6) * winres + F(0.4) * loseres;

if (combined > best_bet_score) {

best_bet = bet;

best_bet_score = combined;

}

}

printf("first round: bet %f for expected total reward of %f\n", best_bet / MONEY_FACTOR, best_bet_score / MONEY_FACTOR);

int count_known = 0;

for (int i = 0; i < ROUND_LIMIT * (MONEY_LIMIT + 1); i++) {

if (table[i].known) count_known ++;

}

printf("known: %i of %i\n", count_known, ROUND_LIMIT * (MONEY_LIMIT + 1));

}

// BOTTOM-UP METHOD

// given pass n in "from", compute pass n-1 in "to"

static void propagate(DATATYPE *from, DATATYPE *to) {

// for each money state...

#pragma omp parallel for num_threads(THREADS)

for (int a = 0; a < THREADS; a++) {

// distribute workload so that inner loop passes are approximately equal

// f(x) = 2x F(x) = x^2 = a/THREADS x = sqrt(a / THREADS)

int low = (int) (MONEY_LIMIT * sqrtf((float)a / THREADS));

int high = (int) (MONEY_LIMIT * sqrtf((a + 1.0f) / THREADS));

if (a == THREADS - 1) high = MONEY_LIMIT + 1; // precise upper border

for (int m = low; m < high; m++) {

DATATYPE max_score = 0.0;

// determine the bet that maximizes our expected earnings

// to enable vectorization we break the loop into two so that each half only gets one branch of the conditional

// DATATYPE winval = from[(m + b > MONEY_LIMIT) ? MONEY_LIMIT : (m + b)];

int high_inner = (m + m > MONEY_LIMIT) ? (MONEY_LIMIT - m) : m;

for (int b = 0; b <= high_inner; b++) {

DATATYPE winval = from[m + b];

DATATYPE loseval = from[m - b];

DATATYPE combined = F(0.6) * winval + F(0.4) * loseval;

max_score = F(fmax)(max_score, combined);

}

for (int b = high_inner + 1; b <= m; b++) {

DATATYPE winval = from[MONEY_LIMIT];

DATATYPE loseval = from[m - b];

DATATYPE combined = F(0.6) * winval + F(0.4) * loseval;

max_score = F(fmax)(max_score, combined);

}

to[m] = max_score;

}

}

}

void method2() {

DATATYPE *buf = malloc(sizeof(DATATYPE) * (MONEY_LIMIT + 1));

DATATYPE *buf_to = malloc(sizeof(DATATYPE) * (MONEY_LIMIT + 1));

// set up base case: making no bet, we have money of i

for (int i = 0; i <= MONEY_LIMIT; i++) buf[i] = i;

for (int i = ROUND_LIMIT - 1; i >= 0; i--) {

propagate(buf, buf_to);

DATATYPE *temp = buf;

buf = buf_to;

buf_to = temp;

}

int best_bet = 0;

DATATYPE best_bet_score = -1;

for (int b = 0; b <= MONEY_START; b++) {

DATATYPE winval = buf[MONEY_START + b];

DATATYPE loseval = buf[MONEY_START - b];

DATATYPE combined = 0.6 * winval + 0.4 * loseval;

if (combined > best_bet_score) {

best_bet_score = combined;

best_bet = b;

}

}

printf("first round: bet %f for expected total reward of %f\n", best_bet / MONEY_FACTOR, best_bet_score / MONEY_FACTOR);

}

int main() {

method2();

return 0;

}Some quick benchmarking on my 2016 Lenovo ThinkPad P70 laptop with 4 physical cores & 8 virtual cores (Intel Xeon E3-1505M v5 Processor 8MB Cache / up to 3.70GHz) shows a roughly linear speedup 1 → 3 threads and then small gains thereafter to a minimum of 7.2s with 8 threads:

for C in `ls coingame-*.c`; do

gcc -Wall -Ofast -fwhole-program -march=native -fopenmp $C -o coingame -lm &&

echo "$C" &&

(for i in {1..10}; do

/usr/bin/time -f '%e' ./coingame; done

);

doneFor levels of parallelism on my laptop from 1–8 threads, the average times are: 28.98s/15.44s/10.70s/9.22s/9.10s/8.4s/7.66s/7.20s.

Caio Oliveira provides a parallel C++ implementation using std::async; compiled with the same optimizations on (g++ -Ofast -fwhole-program -march=native -pthread --std=c++11 kc.cpp -o kc), it runs in ~1m16s:

#include <iostream>

#include <vector>

#include <map>

#include <set>

#include <bitset>

#include <list>

#include <stack>

#include <queue>

#include <deque>

#include <string>

#include <sstream>

#include <algorithm>

#include <cstdio>

#include <cstring>

#include <future>

#include <ctime>

using namespace std;

const int MAX_ROUND = 300;

const int MAX_WEALTH = 25000;

const int BETS_DELTA = 1;

double memo[MAX_WEALTH + 1][2];

inline double vWin(int wealth, int bet, int roundIdx) {

if(wealth + bet < MAX_WEALTH) {

return memo[wealth + bet][!roundIdx];

}

else {

return MAX_WEALTH;

}

}

inline double vLose(int wealth, int bet, int roundIdx) {

if (wealth - bet == 0) {

return 0;

}

else {

return memo[wealth - bet][!roundIdx];

}

}

inline double v(int wealth, int bet, int roundIdx) {

return .6 * vWin(wealth, bet, roundIdx) + .4 * vLose(wealth, bet, roundIdx);

}

template<typename RAIter>

void calc_round(RAIter beg, RAIter end, int roundIdx){

int len = distance(beg, end);

if(len < 1000) {

for(RAIter p = beg; p != end; ++p) {

int wealth = distance(memo, p);

(*p)[roundIdx] = v(wealth, wealth, roundIdx);

for (int bet = 0; bet < wealth; bet += BETS_DELTA) {

memo[wealth][roundIdx] = max(memo[wealth][roundIdx], v(wealth, bet, roundIdx));

}

}

}

else {

RAIter mid = beg + len/2;

future<void> handle = async(launch::async, calc_round<RAIter>, mid, end, roundIdx);

calc_round(beg, mid, roundIdx);

}

}

double calc_table() {

bool roundIdx = 0;

for(int i = 0; i <= MAX_WEALTH; ++i) {

memo[i][!roundIdx] = i;

}

for(int round = MAX_ROUND - 1; round >= 0; --round) {

calc_round(memo, memo + MAX_WEALTH, roundIdx);

roundIdx ^= 1;

}

return memo[2500][!roundIdx] / 100.;

}

int main() {

ios_base::sync_with_stdio(0);

cin.tie(0);

clock_t begin = clock();

double res = calc_table();

clock_t end = clock();

cout << "result: " << res << "(elapsed: " << (double(end - begin) / CLOCKS_PER_SEC) << ")" << endl;

}Given the locality of the access patterns, I have to wonder how well a GPU implementation might perform? It doesn’t seem amenable to being expressed as a large matrix multiplication, but each GPU core could be responsible for evaluating a terminal node and do nearby lookups from there. (And the use in finance of GPUs to compute the lattice model/binomial options pricing model/trinomial tree model, which are similar, suggests it should be possible.)

Exact Formula

Arthur Breitman notes that “you can compute U(w,b) in 𝒪(b) if you allow bets to be any fraction of w. It’s piecewise linear, the cutoff points and slopes follow a very predictable pattern”1 and provides a formula for calculating the value function without constructing a decision tree:

A short version in R:

V <- function(w, b, m=250) {

b <- round(b) # rounds left must be integer

j <- qbinom(w/m, b, 0.5)

1.2^b * 1.5^-j * (w + (m/2 * sum(1.5^(j:0) * pbinom(0:j-1,b,1/2)))) }(This is sufficiently fast for all values tested that it is not worth memoizing using the relatively slow memoise.)

Re-implementing in Python is a little tricky because NumPy/SciPy use different names and Python doesn’t support R’s range notation, but the following seems like it works; and then with a value function, we can implement a planner to choose optimal betting amounts, memoize it with repoze.lru for time-savings over multiple games, and then it is simple to set up a Gym environment and start playing the Kelly coin-flip game optimally (and indeed usually reaching the $250 limit):

from scipy.stats import binom

import numpy as np

from repoze.lru import lru_cache

def V(w, b, m=250):

if w>=250:

return 250

if w<=0:

return 0

if b==0:

return w

else:

try:

j = binom.ppf(float(w)/float(m), b, 0.5)

return 1.2**b * 1.5**-j * (w + m/2 *

sum(np.multiply(binom.cdf(map(lambda(x2):x2-1, range(0,int(j+1))),b,0.5),

map(lambda(x): 1.5**x, list(reversed(range(0, int(j+1))))))))

except ValueError:

print "Error:", (w,b,m)

@lru_cache(None)

def VPplan(w, b):

# optimization: short-circuit

if w<=0 or w>=250:

return 0

else:

if b==0:

return w

else:

possibleBets = map(lambda(pb): float(pb)/100.0, range(0*100,int((w*100)+1),1))

returns = map(lambda(pb): 0.6*V(w+pb, b-1) + 0.4*V(w-pb,b-1), possibleBets)

return float(returns.index(max(returns)))/100.0import gym

env = gym.make('KellyCoinflip-v0')

## play 500 games and calculate mean reward:

rewards = []

for n in range(0,500):

done = False

reward = 0

while not done:

w = env._get_obs()[0][0]

b = env._get_obs()[1]

bet = VPplan(w, b)

results = env._step(bet*100)

print n, w, b, bet, "results:", results

reward = reward+results[1]

done = results[2]

rewards.append(reward)

env._reset()

print sum(rewards)/len(rewards)

# 247.525564356Graphing the Value Function

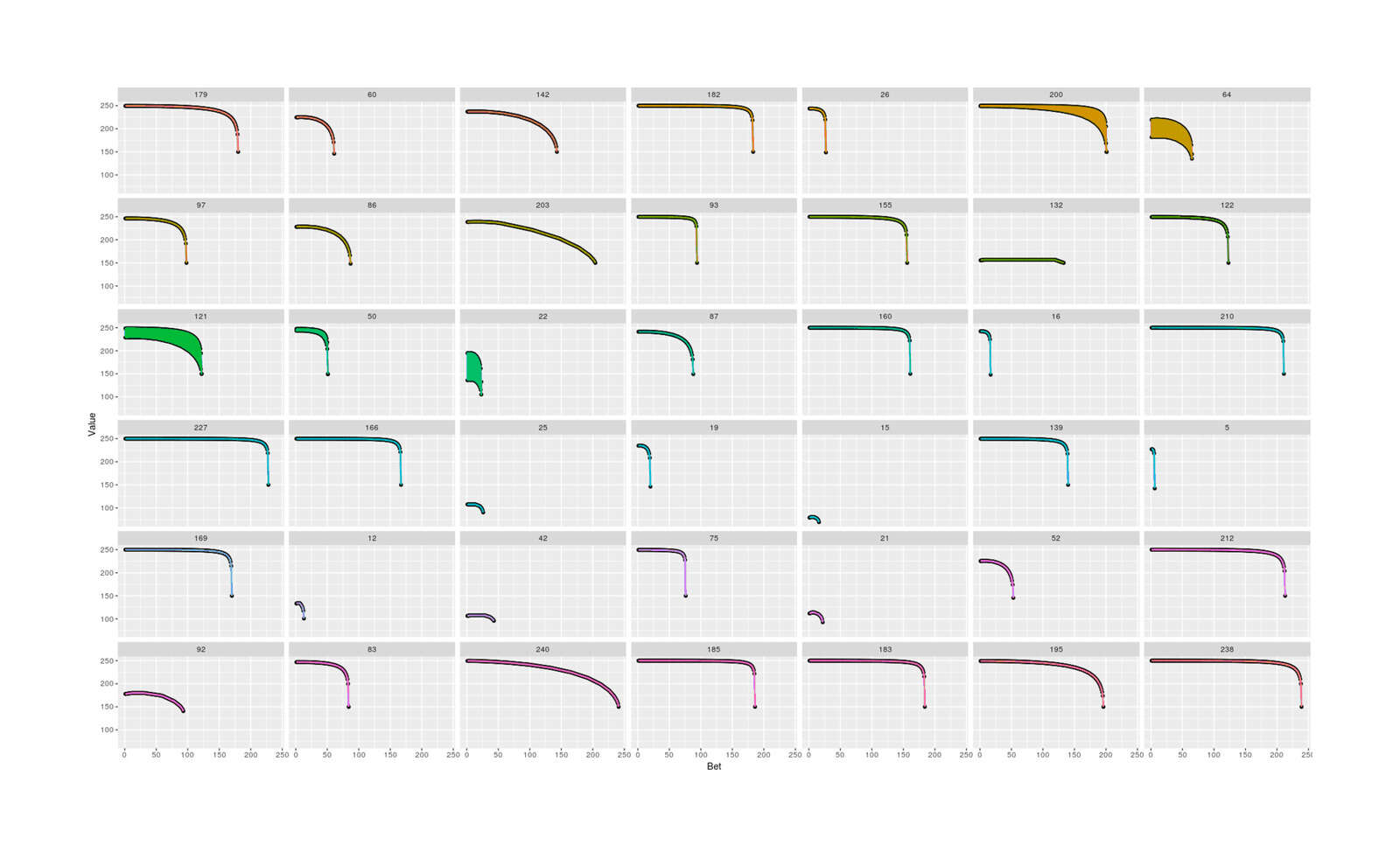

What does the range of possible bets in each state look like? Does it look bitonic and like an inverted V one could do binary search on? We can sample some random states, compute all the possible bets, and plot them by index to see if the payoffs form curves:

# return all actions' values instead of the max action:

VPplans <- function(w, b) {

if (b==0) { return (0); } else {

returns <- sapply(seq(0, w), function(wp) { mf(wp, w, b); })

return(returns); } }

plans <- data.frame()

n <- 47

for (i in 1:n) {

# omit the uninteresting cases of w=0/250 and b=0/300:

w <- round(runif(n=1, 0+1, 250-1))

b <- round(runif(n=1, 0+1, 300-1))

plan <- VPplans(w,b)

plans <- rbind(plans, data.frame(W=as.factor(w), B=as.factor(b), Bet=1:(length(plan)), Value=plan))

}

library(ggplot2)

p <- qplot(x=Bet, y=Value, data=plans)

p + facet_wrap(~W) + geom_line(aes(x=Bet, y=Value, color=W), size = 1) + theme(legend.position = "none")For the most part they do generally follow a quadratic or inverted-V like shape, with the exception of several which wiggle up and down while following the overall curve (numeric or approximation error?).

47 randomly-sampled states with all legal bets’ exact value plotted, showing generally smooth curves

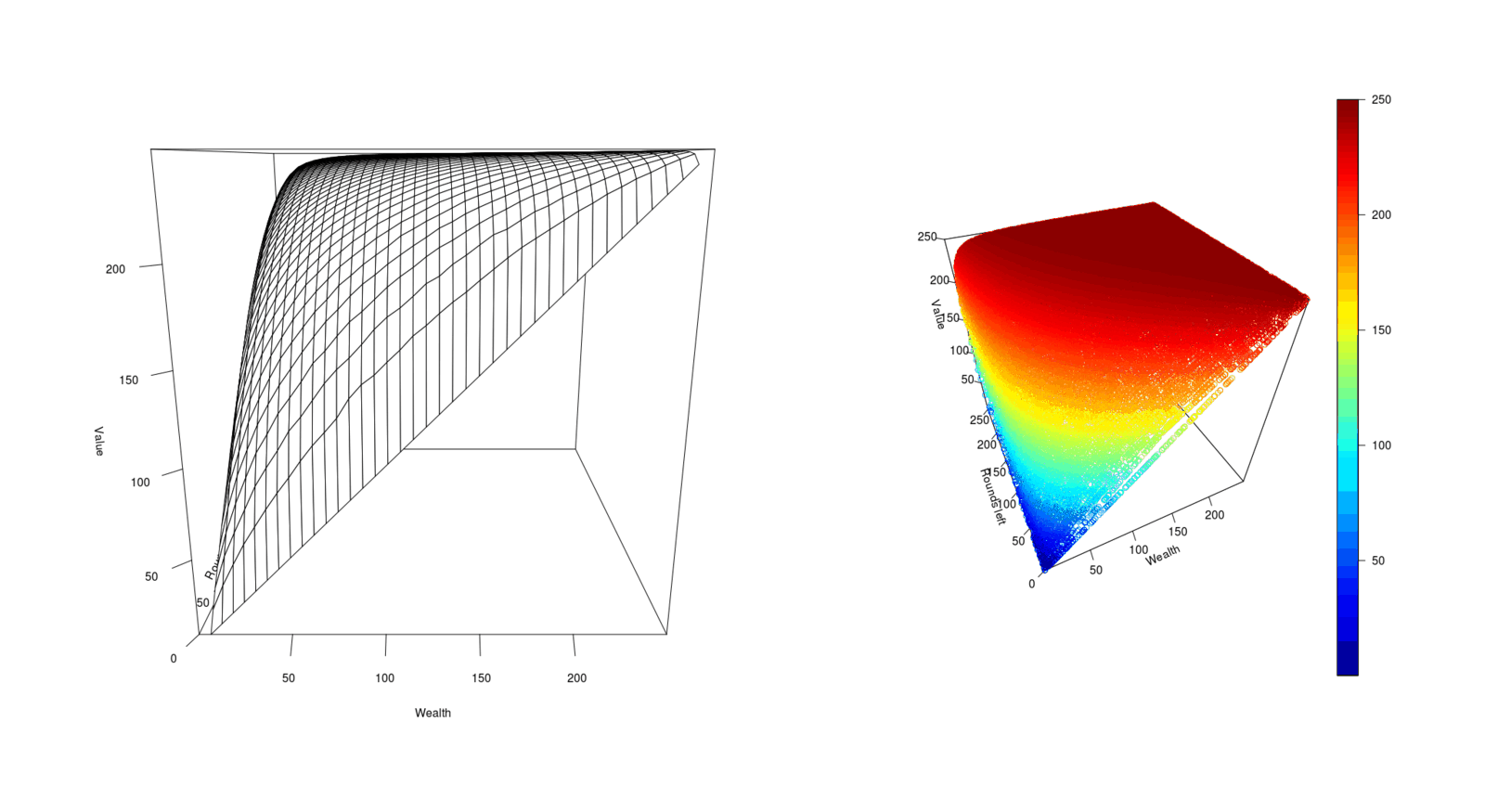

With an exact value function, we can also visualize it to get an idea of how the wealth/rounds interact and how difficult it would be to approximate it. We can generate many iterates, interpolate, and graph:

n <- 50000

w <- runif(n, min=0, max=250)

b <- sample(0:250, n, replace=TRUE)

v <- unlist(Map(V, w, b))

df <- data.frame(Wealth=w, Rounds=b, Value=v)

library(akima)

library(plot3D)

dfM <- interp(df$Wealth, df$Rounds, df$Value, duplicate="strip")

par(mfrow=c(1,2), mar=0)

persp(dfM$x, dfM$y, dfM$z, xlab="Wealth", ylab="Rounds left", zlab="Value", ticktype="detailed")

scatter3D(df$Wealth, df$Rounds, df$Value, theta=-25, xlab="Wealth", ylab="Rounds left", zlab="Value", ticktype="detailed")

3D plots of the value function varying with wealth available to bet & rounds left to bet in in order to reach the $250 maximum payout

Approximating the Exact Value Function

The value function winds up looking simple, a smooth landscape tucked down at the edges, which is perhaps not too surprising, as the optimal betting patterns tend to be small amounts except when there are very few rounds left (and one should bet most or all of one’s wealth) or one is nearing the maximum wealth cap (and should very gradually glide in).

A value function (or the policy function) computed by decision trees is bulky and hard to carry around, weighing perhaps gigabytes in size. Looking at this value function, it’s clear that we can do model distillation and easily approximate it by another algorithm like random forests or neural networks. (Usually, a NN has a hard time learning a value function because it’s a reinforcement learning problem where it’s unclear how actions are linked to rewards, but in this case, since we can already calculate it, it becomes the far easier setting of supervised learning in mapping a state to a value/policy.)

A random forest works fine, getting 0.4 RMSE:

library(randomForest)

r <- randomForest(Value ~ Wealth + Rounds, ntree=1000, data=df); r

# ...

# Mean of squared residuals: 1.175970325

# % Var explained: 99.94

sqrt((predict(r) - df$Value)^2)

# [1] 0.407913389It can also be approximated with NN. For example, in R one can use MXNet and a 1×30 or 2×152 ReLu feedforward NN will solve it to <4 RMSE:

## very slow

library(neuralnet)

n <- neuralnet(Value ~ Wealth + Rounds, data=df, hidden=c(20,20))

# prediction(n)

## GPU-accelerated:

library(mxnet)

mx.set.seed(0)

## 2×30 ReLu FC NN for linear regression with RMSE loss:

data <- mx.symbol.Variable("data")

perLayerNN <- 30

fc1 <- mx.symbol.FullyConnected(data, name="fc1", num_hidden=perLayerNN)

act1 <- mx.symbol.Activation(fc1, name="relu1", act_type="relu")

fc2 <- mx.symbol.FullyConnected(act1, name="fc2", num_hidden=perLayerNN)

act2 <- mx.symbol.Activation(fc2, name="relu2", act_type="relu")

fc3 <- mx.symbol.FullyConnected(act2, name="fc2", num_hidden=perLayerNN)

act3 <- mx.symbol.Activation(fc3, name="relu2", act_type="relu")

finalFc <- mx.symbol.FullyConnected(act3, num_hidden=1)

lro <- mx.symbol.LinearRegressionOutput(finalFc)

model <- mx.model.FeedForward.create(lro, X=as.matrix(subset(df,select=c("Wealth","Rounds"))), y=df$Value,

ctx=mx.gpu(), num.round=50000,

learning.rate=5e-6, eval.metric=mx.metric.rmse, array.layout="rowmajor")Some experimenting with fitting polynomial regressions did not give any decent fits for less than quintic, but probably some combination of logs and polynomials could compactly encode the value function.

Simulation Performance

The implementations here could be wrong, or decision trees not actually optimal like claimed. We can test it by directly comparing the performance in a simulation of the coin-flipping game, comparing the performance of mVPplan vs the 20% Kelly criterion and a simple bet-everything strategy:

library(memoise)

f <- function(x, w, b) { 0.6*V(min(w+x,250), b-1) + 0.4*V(w-x, b-1)}

mf <- memoise(f)

V <- function(w, b, m=250) { j <- qbinom(w/m,b,1/2);

1.2^b * 1.5^-j * (w+m/2 * sum(1.5^(j:0) * pbinom(0:j-1,b,1/2))) }

VPplan <- function(w, b) {

if (b==0) { return (0); } else {

returns <- sapply(seq(0, w), function(wp) { mf(wp, w, b); })

return (which.max(returns)-1); }

}

mVPplan <- memoise(VPplan)

game <- function(strategy, wealth, betsLeft) {

if (betsLeft>0) {

bet <- strategy(wealth, betsLeft)

wealth <- wealth - bet

flip <- rbinom(1,1,p=0.6)

winnings <- 2*bet*flip

wealth <- min(wealth+winnings, 250)

return(game(strategy, wealth, betsLeft-1)) } else { return(wealth); } }

simulateGame <- function(s, w=25, b=300, iters=5000) { mean(replicate(iters, game(s, w, b))) }

## Various strategies (the decision tree strategy is already defined as 'mVPplan'):

kelly <- function(w, b) { 0.20 * w }

smarterKelly <- function(w, b) { if(w==250) {0} else { 0.2 * w } }

maximizer <- function(w, b) { w; }

smarterMaximizer <- function(w, b) { if(w>=250) { 0 } else {w}; }

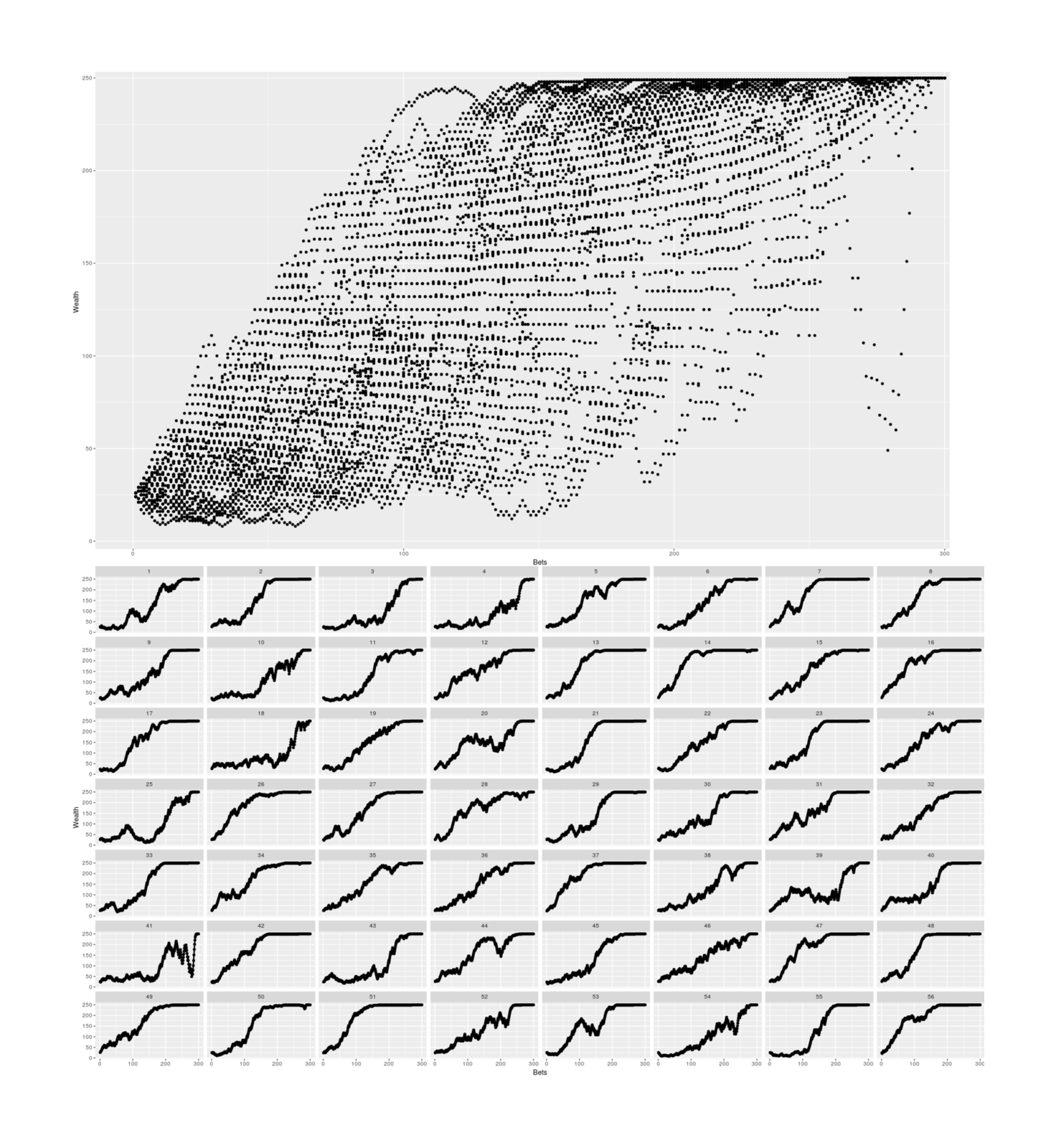

## examining a sample of how wealth evolves over the course of a sample of b=300 games:

library(parallel)

library(plyr)

i <- 56

games <- ldply(mclapply(1:i, function(ith) {

b <- 300

wealth <- 25

wealths <- numeric(b)

while (b>0) {

bet <- mVPplan(wealth, b)

wealth <- wealth-bet

flip <- rbinom(1,1,p=0.6)

winnings <- 2*bet*flip

wealth <- min(wealth+winnings, 250)

wealths[b] <- wealth

b <- b-1

}

return(data.frame(Game=ith, Bets=300:1, Wealth=wealths)) }

))

library(ggplot2)

p <- qplot(x=Bets, y=Wealth, data=games)

p + facet_wrap(~Game) + geom_line(aes(x=Bets, y=Wealth), size = 1) + theme(legend.position = "none")Simulating 56 games, we can see how while the optimal strategy looks fairly risky, it usually wins in the end:

Simulation of winnings over a sample of b = 300 games while using the optimal strategy.

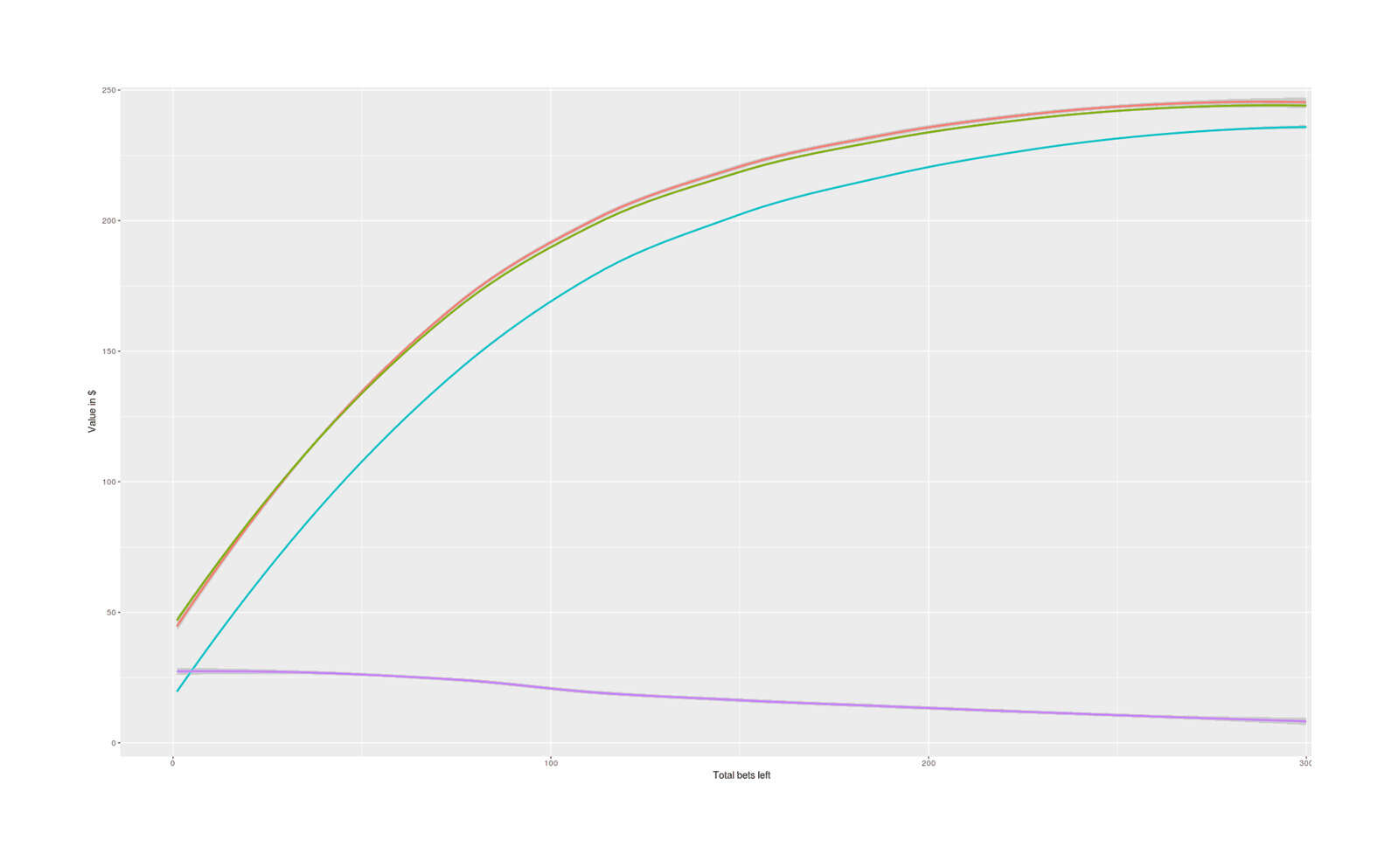

We can also examine how the strategy does for all the other possible horizons and compare with the Kelly.

## Comparing performance in the finite horizon setting up to b=300:

bs <- 1:300

ldply(mclapply(bs, function(bets) {

ky <- round(simulateGame(smarterKelly, b=bets), digits=1)

dt <- round(simulateGame(mVPplan, b=bets), digits=1)

gain <- dt-ky;

print(paste(bets, round(V(25, bets), digits=2), dt, ky, gain)) }

))Simulating 5,000 games for each b:

|

Total bets |

Value function |

Decision tree performance |

Kelly performance |

Difference |

|---|---|---|---|---|

|

1 |

30 |

30.1 |

26 |

4.1 |

|

2 |

36 |

35.5 |

27 |

8.5 |

|

3 |

43.2 |

42.4 |

28.2 |

14.2 |

|

4 |

45.36 |

46 |

29.3 |

16.7 |

|

5 |

47.95 |

49.3 |

30.5 |

18.8 |

|

6 |

53.65 |

52.5 |

31.5 |

21 |

|

7 |

54.59 |

51.4 |

32.7 |

18.7 |

|

8 |

57.57 |

56.1 |

34.1 |

22 |

|

9 |

61.83 |

61.4 |

35.6 |

25.8 |

|

10 |

62.6 |

63 |

36.8 |

26.2 |

|

11 |

66.76 |

68.6 |

38.3 |

30.3 |

|

12 |

68.22 |

66.9 |

40.2 |

26.7 |

|

13 |

70.6 |

70.7 |

41.8 |

28.9 |

|

14 |

74.13 |

71.2 |

43.2 |

28 |

|

15 |

75 |

73.8 |

44.9 |

28.9 |

|

16 |

79.13 |

79.7 |

46.8 |

32.9 |

|

17 |

79.75 |

79.1 |

47.8 |

31.3 |

|

18 |

82.5 |

84.1 |

50.1 |

34 |

|

19 |

84.71 |

83.7 |

52.7 |

31 |

|

20 |

86.25 |

85.6 |

54.2 |

31.4 |

|

21 |

89.8 |

88.6 |

56.4 |

32.2 |

|

22 |

90.28 |

90.2 |

56.5 |

33.7 |

|

23 |

93.85 |

94.8 |

58.7 |

36.1 |

|

24 |

94.49 |

93.7 |

60.7 |

33 |

|

25 |

97 |

95 |

62.2 |

32.8 |

|

26 |

98.83 |

97.1 |

65.2 |

31.9 |

|

27 |

100.38 |

98.7 |

68.1 |

30.6 |

|

28 |

103.23 |

102.1 |

69.4 |

32.7 |

|

29 |

103.95 |

102.5 |

73.4 |

29.1 |

|

30 |

107.61 |

107.7 |

73.7 |

34 |

|

31 |

107.64 |

107.3 |

74.7 |

32.6 |

|

32 |

110.4 |

106.7 |

76.1 |

30.6 |

|

33 |

111.41 |

107.9 |

79.2 |

28.7 |

|

34 |

113.38 |

115.1 |

80.6 |

34.5 |

|

35 |

115.24 |

115.4 |

82.2 |

33.2 |

|

36 |

116.48 |

116.1 |

84.2 |

31.9 |

|

37 |

119.09 |

118.5 |

87.1 |

31.4 |

|

38 |

119.69 |

119.9 |

86 |

33.9 |

|

39 |

122.94 |

125.4 |

89.6 |

35.8 |

|

40 |

122.96 |

119.7 |

92 |

27.7 |

|

41 |

125.54 |

124.2 |

95.2 |

29 |

|

42 |

126.28 |

124.4 |

96.9 |

27.5 |

|

43 |

128.21 |

128.5 |

97.1 |

31.4 |

|

44 |

129.63 |

130.5 |

100.3 |

30.2 |

|

45 |

130.96 |

130.1 |

100 |

30.1 |

|

46 |

132.98 |

131.9 |

100.9 |

31 |

|

47 |

133.78 |

132.4 |

104.2 |

28.2 |

|

48 |

136.33 |

134.2 |

104.5 |

29.7 |

|

49 |

136.64 |

133.6 |

107.7 |

25.9 |

|

50 |

139.36 |

141.2 |

110.1 |

31.1 |

|

51 |

139.54 |

139.4 |

113.6 |

25.8 |

|

52 |

141.68 |

140 |

113 |

27 |

|

53 |

142.45 |

140.7 |

113.5 |

27.2 |

|

54 |

144.08 |

141.8 |

115.5 |

26.3 |

|

55 |

145.36 |

145 |

116.3 |

28.7 |

|

56 |

146.52 |

150.2 |

119.7 |

30.5 |

|

57 |

148.26 |

146.4 |

119.7 |

26.7 |

|

58 |

149 |

143.9 |

120.6 |

23.3 |

|

59 |

151.15 |

151.8 |

124.2 |

27.6 |

|

60 |

151.5 |

148.5 |

124.4 |

24.1 |

|

61 |

154.01 |

151.9 |

125.5 |

26.4 |

|

62 |

154.01 |

150.9 |

127.1 |

23.8 |

|

63 |

156.05 |

157.9 |

128.4 |

29.5 |

|

64 |

156.52 |

154.3 |

129.9 |

24.4 |

|

65 |

158.14 |

155.5 |

132.3 |

23.2 |

|

66 |

159.03 |

156.8 |

132.1 |

24.7 |

|

67 |

160.25 |

157.4 |

133.2 |

24.2 |

|

68 |

161.53 |

159.2 |

137.1 |

22.1 |

|

69 |

162.39 |

160.2 |

135.9 |

24.3 |

|

70 |

164 |

161 |

137.8 |

23.2 |

|

71 |

164.54 |

162.2 |

137.8 |

24.4 |

|

72 |

166.46 |

166.7 |

138.3 |

28.4 |

|

73 |

166.7 |

165.2 |

142.7 |

22.5 |

|

74 |

168.81 |

169 |

145 |

24 |

|

75 |

168.85 |

168.3 |

143.1 |

25.2 |

|

76 |

170.59 |

169.5 |

144.4 |

25.1 |

|

77 |

171 |

165.6 |

146.4 |

19.2 |

|

78 |

172.39 |

171.2 |

147.5 |

23.7 |

|

79 |

173.13 |

171.3 |

150.6 |

20.7 |

|

80 |

174.21 |

170.6 |

151.8 |

18.8 |

|

81 |

175.24 |

174 |

152.3 |

21.7 |

|

82 |

176.04 |

175.5 |

153.8 |

21.7 |

|

83 |

177.34 |

174.9 |

151.8 |

23.1 |

|

84 |

177.87 |

177.6 |

152.5 |

25.1 |

|

85 |

179.4 |

178.5 |

157.3 |

21.2 |

|

86 |

179.7 |

177.1 |

156 |

21.1 |

|

87 |

181.44 |

178.9 |

158.1 |

20.8 |

|

88 |

181.52 |

179.6 |

160.1 |

19.5 |

|

89 |

183.15 |

181.1 |

159 |

22.1 |

|

90 |

183.33 |

182.8 |

163.3 |

19.5 |

|

91 |

184.68 |

184.2 |

162.3 |

21.9 |

|

92 |

185.13 |

183.4 |

162.5 |

20.9 |

|

93 |

186.21 |

187.5 |

165.1 |

22.4 |

|

94 |

186.9 |

185.3 |

160.5 |

24.8 |

|

95 |

187.75 |

188.6 |

164.8 |

23.8 |

|

96 |

188.66 |

186.4 |

167.1 |

19.3 |

|

97 |

189.29 |

187.6 |

168 |

19.6 |

|

98 |

190.39 |

188.9 |

167.7 |

21.2 |

|

99 |

190.82 |

187.8 |

169.8 |

18 |

|

100 |

192.1 |

190.7 |

168.4 |

22.3 |

|

101 |

192.34 |

192.5 |

171.8 |

20.7 |

|

102 |

193.78 |

192.6 |

170 |

22.6 |

|

103 |

193.86 |

193.2 |

170.7 |

22.5 |

|

104 |

195.24 |

194.1 |

170 |

24.1 |

|

105 |

195.35 |

192.9 |

174.1 |

18.8 |

|

106 |

196.52 |

195.2 |

176.8 |

18.4 |

|

107 |

196.84 |

194.5 |

173.4 |

21.1 |

|

108 |

197.79 |

194.4 |

179.1 |

15.3 |

|

109 |

198.3 |

195.5 |

176 |

19.5 |

|

110 |

199.07 |

196.7 |

179.1 |

17.6 |

|

111 |

199.74 |

198.7 |

181.2 |

17.5 |

|

112 |

200.34 |

201.1 |

178.2 |

22.9 |

|

113 |

201.17 |

197.9 |

180.9 |

17 |

|

114 |

201.6 |

200.3 |

181.2 |

19.1 |

|

115 |

202.57 |

202 |

183.2 |

18.8 |

|

116 |

202.85 |

201.6 |

181 |

20.6 |

|

117 |

203.94 |

201.7 |

181.4 |

20.3 |

|

118 |

204.09 |

201.2 |

183.6 |

17.6 |

|

119 |

205.29 |

205.9 |

185 |

20.9 |

|

120 |

205.32 |

201.3 |

186.8 |

14.5 |

|

121 |

206.4 |

204 |

182.2 |

21.8 |

|

122 |

206.53 |

203.7 |

186.2 |

17.5 |

|

123 |

207.44 |

205.7 |

186.1 |

19.6 |

|

124 |

207.72 |

205.2 |

189.5 |

15.7 |

|

125 |

208.48 |

203.9 |

191.4 |

12.5 |

|

126 |

208.9 |

209.3 |

188 |

21.3 |

|

127 |

209.52 |

206.7 |

187.7 |

19 |

|

128 |

210.06 |

209.5 |

188.5 |

21 |

|

129 |

210.54 |

206.5 |

192.4 |

14.1 |

|

130 |

211.2 |

211.1 |

190.9 |

20.2 |

|

131 |

211.56 |

207.1 |

195.6 |

11.5 |

|

132 |

212.32 |

210.3 |

194 |

16.3 |

|

133 |

212.57 |

212.1 |

191.1 |

21 |

|

134 |

213.42 |

211 |

192.7 |

18.3 |

|

135 |

213.56 |

210.2 |

195.8 |

14.4 |

|

136 |

214.5 |

213.3 |

196.8 |

16.5 |

|

137 |

214.55 |

211.3 |

194.4 |

16.9 |

|

138 |

215.46 |

212 |

196.6 |

15.4 |

|

139 |

215.52 |

210.8 |

197.4 |

13.4 |

|

140 |

216.3 |

215.3 |

197 |

18.3 |

|

141 |

216.47 |

217.8 |

199.3 |

18.5 |

|

142 |

217.13 |

215.4 |

197.3 |

18.1 |

|

143 |

217.41 |

214.8 |

196.2 |

18.6 |

|

144 |

217.96 |

213.9 |

200.1 |

13.8 |

|

145 |

218.33 |

215.7 |

200.4 |

15.3 |

|

146 |

218.77 |

217.4 |

200.1 |

17.3 |

|

147 |

219.24 |

217.5 |

199.7 |

17.8 |

|

148 |

219.58 |

218.5 |

200.3 |

18.2 |

|

149 |

220.13 |

218.4 |

200.3 |

18.1 |

|

150 |

220.39 |

220.4 |

201.9 |

18.5 |

|

151 |

221 |

218.1 |

201.6 |

16.5 |

|

152 |

221.18 |

220.5 |

203.9 |

16.6 |

|

153 |

221.86 |

220.6 |

202.6 |

18 |

|

154 |

221.96 |

220.5 |

205.2 |

15.3 |

|

155 |

222.69 |

218.7 |

203.1 |

15.6 |

|

156 |

222.72 |

220.6 |

204.4 |

16.2 |

|

157 |

223.43 |

220.6 |

203.3 |

17.3 |

|

158 |

223.48 |

221.1 |

202.8 |

18.3 |

|

159 |

224.09 |

222.6 |

207.1 |

15.5 |

|

160 |

224.22 |

224.5 |

207.5 |

17 |

|

161 |

224.74 |

220.8 |

206 |

14.8 |

|

162 |

224.95 |

224.2 |

208.1 |

16.1 |

|

163 |

225.39 |

223.8 |

208 |

15.8 |

|

164 |

225.67 |

222.8 |

209 |

13.8 |

|

165 |

226.03 |

223.4 |

208.6 |

14.8 |

|

166 |

226.37 |

224 |

210 |

14 |

|

167 |

226.66 |

225.3 |

209.2 |

16.1 |

|

168 |

227.06 |

224.1 |

211.6 |

12.5 |

|

169 |

227.28 |

224.5 |

210.5 |

14 |

|

170 |

227.73 |

223.8 |

211 |

12.8 |

|

171 |

227.89 |

226.9 |

209.1 |

17.8 |

|

172 |

228.39 |

226 |

212.2 |

13.8 |

|

173 |

228.49 |

226 |

211.8 |

14.2 |

|

174 |

229.04 |

226.6 |

212.1 |

14.5 |

|

175 |

229.09 |

227.9 |

211.3 |

16.6 |

|

176 |

229.67 |

226.4 |

211.5 |

14.9 |

|

177 |

229.67 |

228 |

214 |

14 |

|

178 |

230.18 |

228.4 |

215.1 |

13.3 |

|

179 |

230.24 |

227.5 |

213.3 |

14.2 |

|

180 |

230.68 |

229.2 |

213.6 |

15.6 |

|

181 |

230.8 |

229.5 |

215 |

14.5 |

|

182 |

231.18 |

228.7 |

213.9 |

14.8 |

|

183 |

231.36 |

229.8 |

216 |

13.8 |

|

184 |

231.67 |

230.6 |

214.4 |

16.2 |

|

185 |

231.9 |

231 |

213.1 |

17.9 |

|

186 |

232.16 |

231.2 |

216.2 |

15 |

|

189 |

232.94 |

231.1 |

217.9 |

13.2 |

|

190 |

233.1 |

230.4 |

217.6 |

12.8 |

|

191 |

233.45 |

231.1 |

218.4 |

12.7 |

|

192 |

233.56 |

231.9 |

219 |

12.9 |

|

193 |

233.94 |

232.1 |

216.6 |

15.5 |

|

194 |

234.02 |

232 |

219.3 |

12.7 |

|

195 |

234.43 |

231.8 |

217.5 |

14.3 |

|

196 |

234.47 |

232.4 |

220.6 |

11.8 |

|

197 |

234.9 |

233.5 |

218.6 |

14.9 |

|

198 |

234.9 |

233 |

219.3 |

13.7 |

|

199 |

235.3 |

233.4 |

220.2 |

13.2 |

|

200 |

235.33 |

233.8 |

221.1 |

12.7 |

|

201 |

235.67 |

235.5 |

218.8 |

16.7 |

|

202 |

235.75 |

233 |

222 |

11 |

|

203 |

236.05 |

232.9 |

220.4 |

12.5 |

|

204 |

236.17 |

233.9 |

220.1 |

13.8 |

|

205 |

236.42 |

234.8 |

221 |

13.8 |

|

206 |

236.57 |

234.4 |

221.4 |

13 |

|

207 |

236.78 |

234.8 |

222.6 |

12.2 |

|

208 |

236.96 |

236.4 |

222.5 |

13.9 |

|

209 |

237.14 |

234.6 |

223.5 |

11.1 |

|

210 |

237.35 |

236.6 |

222.6 |

14 |

|

211 |

237.49 |

235.7 |

221.9 |

13.8 |

|

212 |

237.73 |

234.4 |

222.4 |

12 |

|

213 |

237.83 |

234.9 |

226 |

8.9 |

|

214 |

238.1 |

237.3 |

223.9 |

13.4 |

|

215 |

238.17 |

237 |

223.6 |

13.4 |

|

216 |

238.46 |

235.7 |

225.1 |

10.6 |

|

217 |

238.5 |

236.6 |

223.6 |

13 |

|

218 |

238.81 |

237 |

226.1 |

10.9 |

|

219 |

238.83 |

236.4 |

225 |

11.4 |

|