Are Sunk Costs Fallacies?

Human and animal sunk costs often aren’t, and sunk cost bias may be useful on an individual level to encourage learning. Convincing examples of sunk cost bias typically operate on organizational levels and are probably driven by non-psychological causes like competition.

It is time to let bygones be bygones.

Khieu Samphan1, Khmer Rouge head of state

The sunk cost fallacy (“Concorde fallacy”, “escalation bias”, “commitment effect” etc.) could be defined as when an agent ignores that option X has the highest marginal return, and instead chooses option Y because he chose option Y many times before, or simply as “throwing good money after bad”. It can be seen as an attempt to derive some gain from mistaken past choices. (A slogan for avoiding sunk costs: “give up your hopes for a better yesterday!”) The single most famous example, and the reason for it also being called the “Concorde fallacy”, would be the British and French government investing hundreds of millions of dollars into the development of a supersonic passenger jet despite knowing that it would never succeed commercially2. Since 1985’s3 forceful investigation & denunciation, it has become received wisdom4 that sunk costs are a bane of humanity.

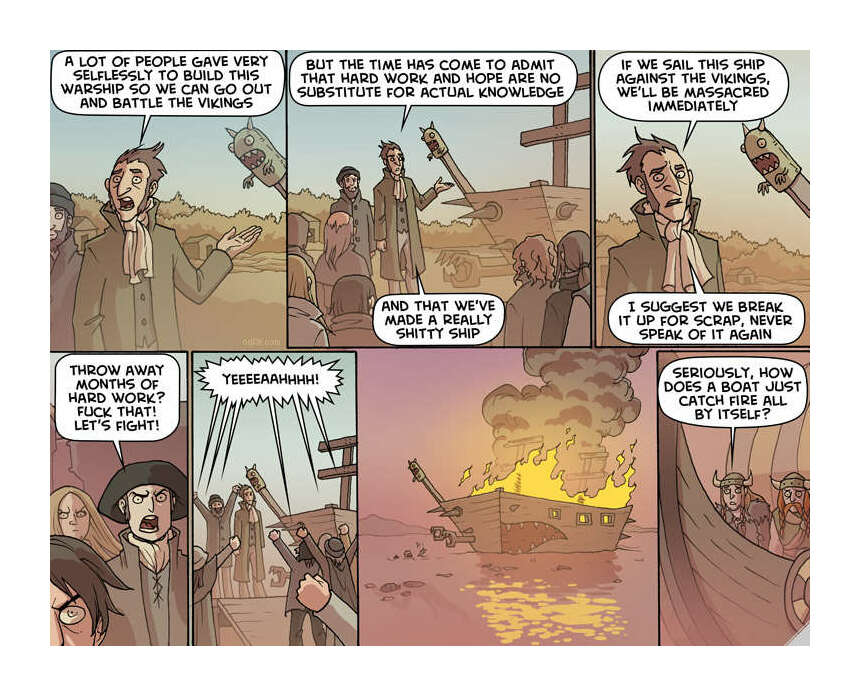

But to what extent is the “sunk cost fallacy” a real fallacy?

Below, I argue the following:

-

sunk costs are probably issues in big organizations

-

but maybe not ones that can be helped

-

-

sunk costs are not issues in animals

-

sunk costs appear to exist in children & adults

-

but many apparent instances of the fallacy are better explained as part of a learning strategy

-

and there’s little evidence sunk cost-like behavior leads to actual problems in individuals

-

-

much of what we call “sunk cost” looks like simple carelessness & thoughtlessness

Subtleties

One cannot proceed from the informal to the formal by formal means.

A “sunk cost fallacy” is clearly a fallacy in a simple model: ‘imagine an agent A who chooses between option X which will return $10 and option Y which will return $6, and agent A in previous rounds chose Y’. If A chooses X, it will be better off by $4 than if it chooses Y. This is correct and as hard to dispute as ‘A implies B; A; therefore B’. We can call both examples valid. But in philosophy, when we discuss modus ponens, we agree that it is always valid, but we do not always agree that it is sound: that A does in fact imply B, or that A really is the case, and so B is the case. ‘The moon being made of cheese implies the astronauts walked on cheese; the moon is made of cheese; therefore the astronauts walked on cheese’ is logically valid, but not sound, since we don’t think that the moon is made of cheese. Or we differ with the first line as well, pointing out that only some of the Apollo astronauts walked on the moon. We reject the soundness.

We can and must do the same thing in economics—but ceteris is never paribus. In simple models, sunk cost is clearly a valid fallacy to be avoided. But is the real world compliant enough to make the fallacy sound? Notice the assumptions we had to make: we wish away issues of risk (and risk aversion), long-delayed consequences, changes in options as a result of past investment, and so on.

We can illustrate this by looking at an even more sacred aspect of normative economics: exponential discounting. One of the key justifications of exponential discounting is that any other discounting can be money-pumped by an exponential agent investing at each time period at whatever the prevailing return is or loaning at appropriate times. (George Ainslie in The Breakdown of Will gives the example of a hyperbolic agent improvidently selling its winter coat every spring and buying it just before the snowstorms every winter, being money-pumped by the consistent exponential agent.) One of the assumptions is that certain rates of investment return will be available; but in the real world, rates can stagger around for long periods. “Hyperbolic discounting is rational: valuing the far future with uncertain discount rates” (Farmer & Geanakoplos 200916ya)5 argues that if returns follow a more geometric random walk, hyperbolic discounting is superior6. Are they correct? They are not much-cited or criticized. But even if they are wrong about hyperbolic discounting, it needs proving that exponential discounting does in fact deal correctly with changing returns. (The market over the past few years has not turned in the proverbial 8–9% annual returns, and one wonders if there will ever be a big bull market that makes up for the great stagnation.)

If we look at sunk cost literature, we must keep many things in mind. For example:

-

organization versus individuals

Sunk costs seem especially common in groups, as has been noticed since the beginning of sunk cost research7; et al 2000 found that culture influenced how much managers were willing to engage in hypothetical sunk costs (South & East Asian more so than North American), and a 2005 meta-analysis that sunk cost was an issue, especially in software-related projects8, agreeing with a 2009 meta-analysis, Desai & Chulkov. 2011 interviewed principals at Californian schools, finding evidence of sunk cost bias. Wikipedia characterizes the Concorde incident as “regarded privately by the British government as a ‘commercial disaster’ which should never have been started, and was almost canceled, but political and legal issues had ultimately made it impossible for either government to pull out.” So at every point, coalitions of politicians and bureaucrats found it in their self-interest to keep the ball rolling.

A sunk cost for the government or nation as a whole is far from the same thing as a sunk cost for those coalitions—responsibility is diffused, which encourages sunk cost9 (If Kennedy or other US presidents could not withdraw from Vietnam or Iraq10 or Afghanistan11 due to perceived sunk costs12, perhaps the real problem was why Americans thought Vietnam was so important and why they feared looking weak or provoking another “who lost China” debate.) People commit sunk cost much more easily if someone else is paying, possibly in part because they are trying to still prove themselves right—an understandable and rational choice13! Other anecdotes from 1992 suggest sunk cost can be expensive corporate problems, but of course are only anecdotes; Robert Campeau killed his company by escalating to an impossibly expensive acquisition of Bloomingdale’s but would Campeau ever have been a good corporate raider without his aggressiveness; or can we say the Philip Morris-Proctor & Gamble coffee price war was a mistake without a great deal more information; and was Bobby Fischer’s vendetta against the Soviet Union sunk cost or a rational strategy to Soviet collusion or simply an early symptom of the apparent mental issues that saw him converting to and impoverished by a peculiar church and ultimately an internally persecuted convict in Iceland?

And why were those coalitions in power in the first place? France and Britain have not found any better systems of government—systems which operate efficiently and are also Nash equilibriums, which successfully avoid any sunk costs in their myriads of projects and initiatives. In Joseph Tainter’s 198837ya Collapse of Complex Societies, he argues that societies that overreach do so because it is impossible for the organizations and members to back down on complexity as long as there is still wealth to extract, even when margins are diminishing; when we accuse Pueblo Indians of sunk cost and causing their civilization to collapse14, we should keep in mind there may be no governance alternatives. Debacles like the Concorde may be necessary because the alternatives are even worse—decision paralysis or institutional paranoia15. Aggressive policing of projects for sunk-costs may wind up violating Chesterton’s fence if managers in later time periods are not very clear on why the projects were started in the first place and what their benefits will be. If we successfully ‘avoid’ sunk cost-style reasoning, does that mean we will avoid future Vietnams, at the expense of World War IIs?16 Goodhart’s Law comes to mind here, particularly because one study recorded how a bank’s attempt to eliminate sunk cost bias in its loan officers resulted in backfiring and evasion17; the overall results seem to still have been an improvement, but it remains a cautionary lesson.

Whatever pressures and feedback loops cause sunk cost fallacy in organizations may be completely different from the causes in individuals.

-

Non-monetary rewards and penalties

“Individual organisms are best thought of as adaptation-executers rather than as fitness-maximizers.” What does this mean in a sunk cost context? That we should be aware that humans may not treat the model at its literal face value (without careful thought or strong encouragement to do so, anyway), treat the situation as simply as ‘$10 versus $6 (and sunk cost)’. It may be more like ‘$10 (and your—non-existent—tribe’s condemnation of you as greedy, insincere, small-minded, and disloyal) versus $6 (and sunk cost)’18. If humans really are forced to think like this, then the modeling of payoffs simply doesn’t correspond with reality and of course our judgements will be wrong. Some assumptions spit out sunk costs as rational strategies19. This is not a trivial issue here (see the self-justification literature, eg. Brockner 198144ya) or in other areas; for example, providing the correct amount of rewards caused many differences in levels of animal intelligence to simply vanish—the rewards had been unequal (see my excerpts of the essay “If a Lion Could Talk: Animal Intelligence and the Evolution of Consciousness”).

-

Sunk costs versus investments and switching costs

Many choices for lower immediate marginal return are investments for greater future return. A single-stage model cannot capture this. Likewise, switching to new projects is not free, and the more expensive switches are, the fewer switches is optimal (eg. et al 2017).

-

Demonstrated harm

It’s not enough to suggest that a behavior may be harmful; it needs to be demonstrated. One might argue that an all-you-can-eat buffet will cause overeating and then long-term harm to health, but do experiments bear out that theory?

Indeed, meta-analysis of escalation effect studies suggests that sunk cost behavior is not one thing but reflects a variety of theorized behaviors & effects of varying rationality, ranging from protecting one’s image & principal-agent conflict to lack of information/options (2012), not all of which can be regarded as a simple cognitive bias to be fixed by greater awareness.

Animals

“It really is the hardest thing in life for people to decide when to cut their losses.”

“No, it’s not. All you have to do is to periodically pretend that you were magically teleported into your current situation. Anything else is the sunk cost fallacy.”

John, Overcoming Bias

Point 3 leads us to an interesting point about sunk cost: it has only been identified in humans, or primates at the widest20.

Arkes & Ayton 199926ya (“The Sunk Cost and Concorde Effects: Are Humans Less Rational Than Lower Animals?”) claims (see also the very similar 1987):

The sunk cost effect is a maladaptive economic behavior that is manifested in a greater tendency to continue an endeavor once an investment in money, effort, or time has been made. The Concorde fallacy is another name for the sunk cost effect, except that the former term has been applied strictly to lower animals, whereas the latter has been applied solely to humans. The authors contend that there are no unambiguous instances of the Concorde fallacy in lower animals and also present evidence that young children, when placed in an economic situation akin to a sunk cost one, exhibit more normatively correct behavior than do adults. These findings pose an enigma: Why do adult humans commit an error contrary to the normative cost-benefit rules of choice, whereas children and phylogenetically humble organisms do not? The authors attempt to show that this paradoxical state of affairs is due to humans’ overgeneralization of the “Don’t waste” rule.

Specifically, in 197253ya, Trivers proposed that fathers are more likely to abandon children, and mothers less likely, because fathers invest less resources into children—mothers are, in effect, committing sunk cost fallacy in taking care of them. 1976 pointed out that this is a misapplication of sunk cost, a version of point #3; Arkes & Ayton’s summary:

If parental resources become depleted, to which of the two offspring should nurturance be given? According to Trivers’s analysis, the older of the two offspring has received more parental investment by dint of its greater age, so the parent or parents will favor it. This would be an example of a past investment governing a current choice, which is a manifestation of the Concorde fallacy and the sunk cost effect. Dawkins and Carlisle suggested that the reason the older offspring is preferred is not because of the magnitude of the prior investment, as Trivers had suggested, but because of the older offspring’s need for less investment in the future. Consideration of the incremental benefits and costs, not of the sunk costs, compels the conclusion that the older offspring represents a far better investment for the parent to make.

Direct testing fails:

A number of experimenters who have tested lower animals have confirmed that they simply do not succumb to the fallacy (see, eg. Armstrong & Robertson, 1988; et al 1989; Maestripieri & Alleva, 1991; Wiklund, 199021).

A direct example of the Trivers vs Dawkins & Carlisle argument:

A prototypical study is that of Maestripieri and Alleva [199134ya], who tested the litter defense behavior of female albino mice. On the 8th day of a mother’s lactation period, a male intruder was introduced to four different groups of mother mice and their litters. Each litter of the first group had been culled at birth to four pups. Each litter of the second group had been culled at birth to eight pups. In the third group, the litters had been culled at birth to eight pups, but four additional pups had been removed 3 to 4 hr before the intruder was introduced. The fourth group was identical to the third except that the removed pups had been returned to the litter after only a 10-min absence.

The logic of the Maestripieri & Alleva 199134ya study is straightforward. If each mother attended to past investment, then those litters that had eight pups during the prior 8 days should be defended most vigorously, as opposed to those litters that had only four pups. After all, having cared for eight pups represents a larger past investment than having cared for only four. On the other hand, if each mother attended to future costs and benefits, then those litters that had eight pups at the time of testing should be defended most vigorously, as opposed to those litters that had only four pups. The results were that the mothers with eight pups at the time of testing defended their litters more vigorously than did the mothers with four pups at the time of testing. The two groups of mothers with four pups did not differ in their level of aggression toward the intruder, even though one group of mothers had invested twice the energy in raising the young because they initially had to care for litters of eight pups.

Arkes & Ayton rebut 3 studies by arguing:

-

1980: digger wasps fight harder in proportion to how much food they contributed, rather than the total—because they are too stupid to count the total and only know how much they personally collected & stand to lose

-

1995: cichlid fish successful in breeding also fight harder against predators; because this may reflect an intrinsic greater healthiness and greater future opportunities, rather than sunk cost fallacy, an argument similar to 1984’s criticism of apparent sunk costs in economics22

-

1979: savannah sparrows defend their nests fiercer as the nest approaches hatching; because as already pointed out, the closer to hatching, the less future investment is required for X chicks compared to starting all over

-

To which 3 we may add tundra swan feeding habits, which are predicted to be optimal by 201123, who remark “we show how optimization of Eq. 3 predicts the sunk-cost effect for certain scenarios; a common element of every case is a large initial cost.”

(Navarro & Fantino 200421ya, “The Sunk Cost Effect In Pigeons And Humans”, claim sunk cost effect in pigeons, but it’s hard to compare its strength to sunk cost in humans, and the setup is complex enough I’m not sure it is sunk cost.)

Humans

Children

Arkes & Ayton cite 2 studies finding that committing sunk cost bias increases with age—as in, children do not commit it. They also cite 2 studies saying that

1997 tested children at three different age groups (5–6, 8–9, and 11–12) with the following modification of the Tversky & Kahneman 198144ya experiment …the older children provided data analogous to those found by Tversky & Kahneman 198144ya: When the money was lost, the majority of the respondents decided to buy a ticket. On the other hand, when the ticket was lost, the majority decided not to buy another ticket. This difference was absent in the youngest children. Note that it is not the case that the youngest children were responding randomly. They showed a definite preference for purchasing a new ticket whether the money or the ticket had been lost. Like the animals that appear to be immune to the Concorde fallacy, young children seemed to be less susceptible than older children to this variant of the sunk cost effect. The results of the study by Krouse (198639ya) corroborate this finding: Compared with adult humans, young children, like animals, seem to be less susceptible to the Concorde fallacy/sunk cost effect.

… Perhaps the impulsiveness of young children (Mischel, Shoda, & Rodriguez, 198936ya) fostered their desire to buy a ticket for the merry-go-round right away, regardless of whether a ticket or money had been lost. However, this alternative interpretation does not explain why the younger children said that they would buy the ticket less often than the older children in the lost-money condition. Nor does this explanation explain the greater adherence to normative rules of decision making by younger children compared with adults in cases where impulsiveness is not an issue (see, eg. Jacobs & Potenza, 1991; Reyna & Ellis, 1994).

I think Arkes & Ayton are probably wrong about children. Those 2 early studies can be criticized easily24, and other studies point the opposite way. et al 1993 asked poor and rich kids (age 5–12) questions including an Arkes & Blumer 198540ya question, and found, in their first study no difference by age, ~30% of the 101 kids committing sunk cost and another ~30% unsure; in their second, they asked 2 questions, with ~50% committing sunk cost—and responses on the 2 questions minimally correlated (r = 0.17). 2004 found that correct (non-sunk cost) responses went up with age (age 5–12, 16%; 5–16, 27%; and adults 37%). Bruine de Bruin et al 200718ya found older adults more susceptible than young adults to some tested fallacies, but that sunk cost resistance increased somewhat with age. et al 2008 studied 75 college age students, finding small or non-statistically-significant results for IQ (as did other studies, see later), education, and age; still older adults (60+) beat their college-age peers at avoiding sunk cost in both Strough et al 200817ya & et al 2011.

(Children also violate transitivity of choices & are more hyperbolic than adults, which is hardly normative.25)

Uses

Learning & Memory

18. If the fool would persist in his folly he would become wise.

46. You never know what is enough unless you know what is more than enough.

Felix Hoeffler in his 200817ya paper “Why humans care about sunk costs while (lower) animals don’t: An evolutionary explanation” takes the previous points at face values and asks how sunk cost might be useful for humans; his answer is that sunk cost forfeits some total gains/utility—just as our simple model indicated—but in exchange for faster learning, an exchange motivated by humans’ well-known risk aversion and dislike of uncertainty26. It is harder to learn the value of choices if one is constantly breaking off before completion to make other choices, or realize any value at all (the classic exploration vs exploitation problem, amusingly illustrated in Clarke’s story “Superiority”).

One could imagine a not too intelligent program which is, like humans, over-optimistic about the value of new projects; it always chooses the highest value option, of course, to avoid committing sunk cost bias, but oddly enough, it never seems to finish projects because better opportunities seem to keep coming along… In the real world, learning is valuable and one has many reasons to persevere even past the point one regards a decision as a mistake; McAfee et al 200718ya (remember the exponential vs hyperbolic discounting example):

Consider a project that may take an unknown expenditure to complete. The failure to complete the project with a given amount of investment is informative about the expected amount needed to complete it. Therefore, the expected additional investment required for fruition will be correlated with the sunk investment. Moreover, in a world of random returns, the realization of a return is informative about the expected value of continuing a project. A large loss, which leads to a rational inference of a high variance, will often lead to a higher option value because option values tend to rise with variance. Consequently, the informativeness of sunk investments is amplified by consideration of the option value…Moreover, given limited time to invest in projects, as the time remaining shrinks, individuals have less time over which to amortize their costs of experimenting with new projects, and therefore may be rationally less likely to abandon current projects…Past investments in a given course of action often provide evidence about whether the course of action is likely to succeed or fail in the future. Other things equal, a greater investment usually implies that success is closer at hand. Consider the following simple model…The only case in which the size of the sunk investment cannot affect the firm’s rational decision about whether to continue investing is the rather special case in which the hazard is exactly constant.

If this model is applicable to humans, we would expect to see a cluster of results related to age, learning, teaching, difficulty of avoiding even with training or education, minimal avoidance with greater intelligence, completion of tasks/projects, largeness of sums (the risks most worth avoiding), and competitiveness of environment. (As well as occasional null results like 2006.) And we do! Many otherwise anomalous results snap into focus with this suggestion:

-

information is worth most to those who have the least: as we previously saw, the young commit sunk cost more than the old

-

in situations where participants can learn and update, we should expect sunk cost to be attenuated or disappear; we do see this (eg. et al 200727, et al 199028, et al 199929, 198130, 198631, et al 199132, 201233)

-

the noisier (higher variance) feedback on profitability was, the more data it took before people give up ( et al 1998, et al 2003)

-

sunk costs were supported more when subjects were given justifications about learning to make better decisions or whether teachers/students were involved (199534)

-

extensive economic training does not stop economics professors from committing sunk cost, and students can be quickly educated to answer sunk cost questions correctly, but with little carry-through to their lives35, and researchers in the area argue about whether particular setups even represent sunk costs at all, on their own merits36 (but don’t feel smug, you probably wouldn’t do much better if you took quizzes on it either)

-

when measured, avoiding sunk cost has little correlation with intelligence37—and one wonders how much of the correlation comes from intelligent people being more likely to try to conform to what they have learned is economics orthodoxy

-

a ‘nearly completed’ effect dominates ‘sunk cost’ (1993, 1998, 2002, et al 2007)

-

for example, the larger the proportion, the more costs were sunk (1991)

-

it is surprisingly hard to find clear-cut real-world non-government examples of serious sunk costs; the commonly cited non-historical examples do not stack up:

-

199538 studied the NBA to see whether high-ranked but underperforming players were over-used by coaches, a sunk cost.

Unfortunately, they do not track the over-use down to actual effects on win-loss or other measures of team performance, effects which are unlikely to be very large since the overuse amounts to ~10–20 minutes a game. Further, “The econometrics and behavioral economics of escalation of commitment: a re-examination of Staw and Hoang’s NBA data” (Camerer & Weber 199926ya), claims to do a better analysis of the NBA data and find the effect is actually weaker. As usual, there are multiple alternatives39.

-

et al 199340 is a much-cited correlational study finding that small entrepreneurs invest further in companies they founded (rather than bought) when the company apparently does poorly; but they acknowledge that there are financial strategies clouding the data, and like Staw & Hoang, do not tie the small effect—which appears only for a year or two, as the entrepreneurs apparently learn—to actual negative outcomes or decrease in expected value.

-

similar to McCarthy et al 199332ya, et al 200941 tracked ‘exit routes’ for young companies such as being bought, merged, or bankrupt—but again, they did not tie apparent sunk cost to actual poor performance.

-

in 2 studies42, Africans did not engage in sunk cost with insecticide-treated bed nets—whether they paid a subsidized price or free did not affect use levels, and in one study, this null effect happened despite the same household engaging in sunk cost for hypothetical questions

-

Internet users may commit sunk cost in browsing news websites43 (but is that serious?)

-

an unpublished 200124ya paper (Barron et al “The Escalation Phenomenon and Executive Turnover: Theory and Evidence”) reportedly finds that projects are ‘significantly more likely’ to be canceled when their top managers leave, suggesting a sunk cost effect of substantial size; but it is unclear how much money is at stake or whether this is—remember point #1—power politics44

-

sunk cost only weakly correlates with suboptimal behavior (much less demonstrates causation):

2005 and Bruine de et al 2007 compiled a number of questions for several cognitive biases—including sunk cost—and then asked questions about impulsiveness, number of sexual partners, etc, while the latter developed a 34-item index of bad decisions/outcomes (the DOI): ever rent a movie you didn’t watch, get expelled, file for bankruptcy, forfeit your driver’s license, miss an airplane, bounce a check, etc. Then they ran correlations. They replicated the minimal correlation of sunk cost avoidance with IQ, but sunk cost (and ‘path independence’) exhibited fascinating behaviors compared to the other biases/fallacies measured: sunk cost & path independence correlated minimally with the other tested biases/fallacies, Cronbach’s alphas were almost uselessly low, education did not help much, age helped some, and sunk cost had low correlations with the risky behavior or the DOI (eg. after controlling for decision-making styles, 0.13).

-

et al 1993 found tests of normative economic reasoning, including sunk cost questions, correlated with increased academic salaries, even for non-economic professors like biologists & humanists (but the effect size & causality are unclear)

-

-

Dissociation in hypotheticals—being told a prior manager made decisions—does not always counteract effects (2006)

Sunk costs may also reflect imperfect memory about what information one had in the past; one may reason that one’s past self had better information about all the forgotten details that went into a decision to make some investments, and respect their decision, thus appearing to honor sunk costs (2011).

Countering Hyperbolic Discounting?

Use barbarians against barbarians.45

Henry Kissinger, On China 2011

The classic kicker of hyperbolic discounting is that it induces temporal discounting—your far-sighted self is able to calculate what is best for you, but then your near-sighted self screws it all up by changing tacks. Knowing this, it may be a good idea to not work on your ‘bad’ habit of being overconfident about your projects46 or engaging in planning fallacy, since at least they will counteract a little the hyperbolic discounting; in particular, you should distrust near-term estimates of the fun or value of activities when you have not learned anything very important47. We could run the same argument but instead point to the psychology research on the connection between blood sugar levels and ‘willpower’; if it takes willpower to start a project but little willpower to cease working on or quit a project, then we would expect our decisions to quit be correlated with low willpower and blood sugar levels, and hence to be ignored!

It’s hard to oppose these issues: humans are biased hardware. If one doesn’t know exactly why a bias is bad, countering a bias may simply let other biases hurt you. Anecdotally, a number of people have problems with quite the opposite of sunk cost fallacy—overestimating the marginal value of the alternatives and discounting how little further investment is necessary48, and people try to commit themselves by deliberately buying things they don’t value.49 (This seems doubly plausible given the high value of Conscientiousness/Grit50—with marginal return high enough that it suggests most people do not commit long-term nearly enough, and if sunk cost is the price of reaping those gains…)

Thoughtlessness: the Real Bias

One of the known ways to eliminate sunk cost bias is to be explicit and emphasize the costs of continuing (Northcraft and Neale, 1986, Tan and Yates, 1995, et al 198251 & conversely 198152, McCain 198639ya), as well as setting explicit budgets (1992, 199553, et al 1997). Fancy tools don’t add much effectiveness54

This, combined with the previous learning-based theory of sunk cost, suggests something to me: sunk cost is a case of the ur-cognitive bias, failure to active System II. One doesn’t intrinsically over-value something due to past investment, one fails to think about the value at all.

Additional Links

-

“Escalation of Commitment Behavior: a critical, prescriptive historiography”, Rice 201015ya (PhD thesis)

-

“Sunk Cost as a Self-Management Device”, et al 2018

External Links

-

“World: Asia-Pacific: US demands ‘killing fields’ trial”, BBC 1998-12-29↩︎

-

And the Concorde definitely did not succeed commercially: operating it could barely cover costs, its minimal profits never came close to the total R&D or opportunity costs, its last flight was in 200322ya (a shockingly low lifetime in an industry which typically tries to operate individual planes, much less entire designs, for decades), and as of 2017, the Concorde still has no successors in its niche or apparent upcoming successors despite great technological progress & global economic development and the notorious growth in wealth of the “1%”.↩︎

-

Arkes & Blumer 198540ya, “The psychology of sunk cost”:

The sunk cost effect is manifested in a greater tendency to continue an endeavor once an investment in money, effort, or time has been made. Evidence that the psychological justification for this behavior is predicated on the desire not to appear wasteful is presented. In a field study, customers who had initially paid more for a season subscription to a theater series attended more plays during the next 6 months, presumably because of their higher sunk cost in the season tickets. Several questionnaire studies corroborated and extended this finding. It is found that those who had incurred a sunk cost inflated their estimate of how likely a project was to succeed compared to the estimates of the same project by those who had not incurred a sunk cost. The basic sunk cost finding that people will throw good money after bad appears to be well described by prospect theory (D. Kahneman & A. Tversky, 1979, Econometrica, 47, 263–291). Only moderate support for the contention that personal involvement increases the sunk cost effect is presented. The sunk cost effect was not lessened by having taken prior courses in economics. Finally, the sunk cost effect cannot be fully subsumed under any of several social psychological theories.

As an example of the sunk cost effect, consider the following example [from Thaler 198045ya]. A man wins a contest sponsored by a local radio station. He is given a free ticket to a football game. Since he does not want to go alone, he persuades a friend to buy a ticket and go with him. As they prepare to go to the game, a terrible blizzard begins. The contest winner peers out his window over the arctic scene and announces that he is not going, because the pain of enduring the snowstorm would be greater than the enjoyment he would derive from watching the game. However, his friend protests, ‘I don’t want to waste the twelve dollars I paid for the ticket! I want to go!’ The friend who purchased the ticket is not behaving rationally according to traditional economic theory. Only incremental costs should influence decisions, not sunk costs. If the agony of sitting in a blinding snowstorm for 3 h is greater than the enjoyment one would derive from trying to see the game, then one should not go. The $12 has been paid whether one goes or not. It is a sunk cost. It should in no way influence the decision to go. But who among us is so rational?

Our final sample thus had eighteen no-discount, nineteen $2 discount, and seventeen $7 discount subjects. Since the ticket stubs were color coded, we were able to collect the stubs after each performance and determine how many persons in each group had attended each play…We performed a 3 (discount: none, $2, $7) x 2 (half of season) analysis of variance on the number of tickets used by each subject. The latter variable was a within-subjects factor. It was also the only significant source of variance, F(1,51) = 32.32, MS, = 1.81, (p < 0.OO). More tickets were used by each subject on the first five plays (3.57) than on the last five plays (2.09). We performed a priori tests on the number of tickets used by each of the three groups during the first half of the theater season. The no-discount group used significantly more tickets (4.11) than both the $2 discount group (3.32) and the $7 discount group (3.29), t = 1.79, 1.83, respectively, p’s < .05, one tailed. The groups did not use significantly different numbers of tickets during the last half of the theater season (2.28, 1 .S4, 2.18, for the no-discount, $2 discount, and $7 discount groups, respectively). Conclusion. Those who had purchased theater tickets at the normal price used more theater tickets during the first half of the season than those who purchased tickets at either of the two discounts. According to rational economic theory, after all subjects had their ticket booklet in hand, they should have been equally likely to attend the plays.

…A second feature of prospect theory pertinent to sunk costs is the certainty effect. This effect is manifested in two ways. First, absolutely certain gains (P = 1) are greatly overvalued. By this we mean that the value of certain gains is higher than what would be expected given an analysis of a person’s values of gains having a probability less than 1.0. Second, certain losses (P = 1.0) are greatly undervalued (ie. further from zero). The value is more negative than what would be expected given an analysis of a person’s values of losses having a probability less than 1.0. In other words, certainty magnifies both positive and negative values. Note that in question 3A the decision not to complete the plane results in a certain loss of the amount already invested. Since prospect theory states that certain losses are particularly aversive, we might predict that subjects would find the other option comparatively attractive. This is in fact what occurred. Whenever a sunk cost dilemma involves the choice of a certain loss (stop the waterway project) versus a long shot (maybe it will become profitable by the year 2500), the certainty effect favors the latter option.

…Fifty-nine students had taken at least one course; sixty-one had taken no such course. All of these students were administered the Experiment 1 questionnaire by a graduate student in psychology. A third group comprised 61 students currently enrolled in an economics course, who were administered the Experiment 1 questionnaire by their economics professor during an economics class. Approximately three fourths of the students in this group had also taken one prior economics course. All of the economics students had been exposed to the concept of sunk cost earlier that semester both in their textbook (Gwartney & Stroup, 198243ya, p. 125 [Microeconomics: Private and public choice]) and in their class lectures. Results. Table 1 contains the results. The x2 analysis does not approach significance. Even when an economics teacher in an economics class hands out a sunk cost questionnaire to economics students, there is no more conformity to rational economic theory than in the other two groups. We conclude that general instruction in economics does not lessen the sunk cost effect. In a recent analysis of entrapment experiments, 1984 concluded that continued investment in many of them does not necessarily represent an economically irrational behavior. For example, continued waiting for the bus will increase the probability that one’s waiting behavior will be rewarded. Therefore there is an eminently rational basis for continued patience. Hence this situation is not a pure demonstration of the sunk cost effect. However, we believe that some sunk cost situations do correspond to entrapment situations. The subjects who ‘owned’ the airline company would have endured continuing expenditures on the plane as they sought the eventual goal of financial rescue. This corresponds to the Brockner et al. entrapment situation. However, entrapment is irrelevant to the analysis of all our other studies. For example, people who paid more money last September for the season theater tickets are in no way trapped. They do not incur small continuous losses as they seek an eventual goal. Therefore we suggest that entrapment is relevant only to the subset of sunk cost situations in which continuing losses are endured in the hope of later rescue by a further investment.

According to Thomas 198144ya [Microeconomic applications: Understanding the American economy], one person who recognized it as an error was none other than Thomas A. Edison. In the 1880s Edison was not making much money on his great invention, the electric lamp. The problem was that his manufacturing plant was not operating at full capacity because he could not sell enough of his lamps. He then got the idea to boost his plant’s production to full capacity and sell each extra lamp below its total cost of production. His associates thought this was an exceedingly poor idea, but Edison did it anyway. By increasing his plant’s output, Edison would add only 2% to the cost of production while increasing production 25%. Edison was able to do this because so much of the manufacturing cost was sunk cost. It would be present whether or not he manufactured more bulbs. [the Europe price > marginal cost] Edison then sold the large number of extra lamps in Europe for much more than the small added manufacturing costs. Since production increases involved negligible new costs but substantial new income, Edison was wise to increase production. While Edison was able to place sunk costs in proper perspective in arriving at his decision, our research suggests that most of the rest of us find that very difficult to do.

Friedman et al 200619ya criticism of Arkes:

↩︎This is consistent with the sunk cost fallacy, but the evidence is not as strong as one might hope. The reported significance levels apparently assume that (apart from the excluded couples) all attendance choices are independent. The authors do not explain why they divided the season in half, nor do they report the significance levels for the entire season (or first quarter, etc.). The data show no significant difference between the small and large discount groups in the first half season nor among any of the groups in the second half season. We are not aware of any replication of this field experiment.

-

Davis’s complaint is a little odd, inasmuch as economics textbooks do apparently discuss sunk costs; Steele 199629ya gives examples back to 1910115ya, or from “Do Sunk Costs Matter?”, McAfee et al 200718ya:

↩︎Introductory textbooks in economics present this as a basic principle and a deep truth of rational decision-making (Frank and Bernanke, 200619ya, p. 10, and Mankiw, 200421ya, p. 297).

-

Popularized discussions of Farmer & Geanakoplos 200916ya:

-

“‘Discounting’ the future cost of climate change”, Science News

-

“Einstein on Wall Street, Time-Money Continuum: Mark Buchanan”, WSJ column

-

“Discounting Details”, on Mark Buchanan’s blog “The Physics of Finance”

-

-

-

Some quotes from the paper:

↩︎Conventional economics supposes that agents value the present vs. the future using an exponential discounting function. In contrast, experiments with animals and humans suggest that agents are better described as hyperbolic discounters, whose discount function decays much more slowly at large times, as a power law. This is generally regarded as being time inconsistent or irrational. We show that when agents cannot be sure of their own future one-period discount rates, then hyperbolic discounting can become rational and exponential discounting irrational. This has important implications for environmental economics, as it implies a much larger weight for the far future.

…Why should we discount the future? Bohm-Bawerk (1889136ya,1923102ya) and Fisher (193095ya) argued that men were naturally impatient, perhaps owing to a failure of the imagination in conjuring the future as vividly as the present. Another justification for declining Ds (τ) in τ, given by Rae (1834191ya,1905120ya), is that people are mortal, so survival probabilities must enter the calculation of the benefits of future potential consumption. There are many possible reasons for discounting, as reviewed by Dasgupta (200421ya, 200817ya). Most economic analysis assumes exponential discounting Ds (τ) = D(τ) = exp(−rτ), as originally posited by Samuelson (193788ya) and put on an axiomatic foundation by Koopmans (196065ya). A natural justification for exponential discounting comes from financial economics and the opportunity cost of foregoing an investment. A dollar at time s can be placed in the bank to collect interest at rate r, and if the interest rate is constant, it will generate exp(r(t—s)) dollars at time t. A dollar at time t is therefore equivalent to exp(−r(t—s)) dollars at time s. Letting τ = t—s, this motivates the exponential discount function Ds (τ) = D(τ) = exp(−rτ), independent of s.

…For roughly the first eighty years the certainty equivalent discount function for the geometric random walk stays fairly close to the exponential, but afterward the two diverge substantially, with the geometric random walk giving a much larger weight to the future. A comparison using more realistic parameters is given in Table 1. For large times the difference is dramatic.

Farmer & Geanakoplos 200916ya: Table 1, comparing geometric random walk (GRW) vs exponential discounting over increasing time periods showing that GRW eventually decays much slower.

year

GRW

exponential

20

0.462

0.456

60

0.125

0.095

100

0.051

0.020

500

0.008

2 × 10−9

1000

0.005

4 × 10−18

…What this analysis makes clear, however, is that the long term behavior of valuations depends extremely sensitively on the interest rate model. The fact that the present value of actions that affect the far future can shift from a few percentage points to infinity when we move from a constant interest rate to a geometric random walk calls seriously into question many well regarded analyses of the economic consequences of global warming. … no fixed discount rate is really adequate—as our analysis makes abundantly clear, the proper discounting function is not an exponential.

-

For example, Staw 198144ya, “The Escalation of Commitment to a Course of Action”:

↩︎A second way to explain decisional errors is to attribute a breakdown in rationality to interpersonal elements such as social power or group dynamics. Pfeffer [197748ya] has, for example, outlined how and when power considerations are likely to outweigh more rational aspects of organizational decision making, and Janis [197253ya] has noted many problems in the decision making of policy groups. Cohesive groups may, according to Janis, suppress dissent, censor information, create illusions of invulnerability, and stereotype enemies. Any of these by-products of social interaction may, of course, hinder rational decision making and lead individuals or groups to decisional errors.

-

2005; from the abstract:

Using meta-analysis, we analyzed the results of 20 sunk cost experiments and found: (1) a large effect size associated with sunk costs, (2) variability of effect sizes across experiments that was larger than pure subject-level sampling error, and (3) stronger effects in experiments involving IT projects as opposed to non-IT projects.

Background on why one might expect effects with IT in particular:

Although project escalation is a general phenomenon, IT project escalation has received considerable attention since Keil and his colleagues began studying the phenomenon (Keil, Mixon et al 199530ya). Survey data suggest that 30–40% of all IT projects involve some degree of project escalation (Keil, Mann, and Rai 200025ya). To study the role of sunk cost in software project escalation, Keil et al 199530ya conducted a series of lab experiments, in which sunk costs were manipulated at various levels, and subjects decided whether or not to continue an IT project facing negative prospects. This IT version of the sunk cost experiment was later replicated across cultures (Keil, Tan et al 200025ya), with group decision makers (Boonthanom 200322ya), and under different de-escalation situations (Heng, Tan et al 200322ya). These experiments demonstrated the sunk cost effect to be significant in IT project escalation.

The “real option” defense of sunk cost behavior has been suggested for software projects (2006)↩︎

-

“Diffusion of Responsibility: Effects on the Escalation Tendency”, Whyte 199134ya (see also 1993):

↩︎In a laboratory study, the possibility was investigated that group decision making in the initial stages of an investment project might reduce the escalation tendency by diffusing responsibility for initiating a failing project. Support for this notion was found. Escalation effects occurred less frequently and were less severe among individuals described as participants in a group decision to initiate a failing course of action than among individuals described as personally responsible for the initial decision. Self-justification theory was found to be less relevant after group than after individual decisions. Because most decisions about important new policies in organizations are made by groups, these results indicate a gap in theorizing about the determinants of escalating commitment for an important category of escalation situations.

…The impact of personal responsibility on persistence in error has been replicated several times (eg. Bazerman, Beekun, & Schoorman, 198243ya; Caldwell & O’Reilly, 198243ya; Staw, 197649ya; Staw & Fox, 197748ya).

-

Both of them—but the first one on the Iraqi side, specifically Saddam Hussein; Bazerman & Neale 199233ya:

↩︎Similarly, it could be argued that in the Iraqi/Kuwait conflict, Iraq (Hussein) had the information necessary to rationally pursue a negotiated settlement. In fact, early on in the crisis, he was offered a package for settlement that was far better than anything that he could have expected through a continued conflict. The escalation literature accurately predicts that the initial “investment” incurred in invading Kuwait would lead Iraq to a further escalation of its commitment not to compromise on the return of Kuwait.

-

Kelly 200421ya:

↩︎The physicist Eugene Demler informs me that exactly parallel arguments were quite commonly made in the Soviet Union in the late 1980s in an attempt to justify continued Soviet involvement in Afghanistan.

-

Dawkins & Carlisle 197649ya sarcastically remark:

↩︎…The idea has been influential4, and it appeals to economic intuition. A government which has invested heavily in, for example, a supersonic airliner, is understandably reluctant to abandon it, even when sober judgement of future prospects suggests that it should do so. Similarly, a popular argument against American withdrawal from the Vietnam war was a retrospective one: ‘We cannot allow those boys to have died in vain’. Intuition says that previous investment commits one to future investment.

-

The former can be found in Bazerman, Giuliano, & Appelman, 1984, Davis & Bobko, 1986, & Staw, 1976 among other studies cited here. The latter is often called ‘self-justification’ or the ‘justification effect’ (eg. 1992).

Self-justification is, of course, in many contexts a valuable trait to have; is the following an error, or business students demonstrating their precocious understanding of an invaluable bureaucratic in-fighting skill? Bazerman et al 198243ya, “Performance evaluation in a dynamic context: A laboratory study of the impact of prior commitment to the ratee” (see also Caldwell & O’Reilly, 198243ya; Staw, 197649ya; Staw & Fox, 197748ya):

↩︎A dynamic view of performance evaluation is proposed that argues that raters who are provided with negative performance data on a previously promoted employee will subsequently evaluate the employee more positively if they, rather than their predecessors, made the earlier promotion decision. A total of 298 business majors participated in the study. The experimental group made a promotion decision by choosing among three candidates, whereas the control group was told that the decision had been made by someone else. Both groups evaluated the promoted employee’s performance after reviewing 2 years of data. The hypothesized escalation of commitment effect was observed in that the experimental group consistently evaluated the employee more favorably, provided larger rewards, and made more optimistic projections of future performance than did the control group.

-

And it is difficult to judge from a distance when sunk cost has occurred: what exactly else are the Indians going to invest in? Remember our exponential discounting example. As long as various settlements are not running at an outright loss or are being subsidized, how steep an opportunity cost do they really face? From the paper:

By the end of the occupation in the late-A.D. 800s there is evidence of depletion of wood resources, pi on seeds, and animals (reviewed by Kohler 199233ya). Following the collapse of these villages, the Dolores area was never reoccupied in force by Puebloan farmers. A second similar case comes from nearby Sand Canyon Locality west of Cortez, Colorado, intensively studied by the Crow Canyon Archaeological Center over the last 15 years (Lipe 199233ya). Here the main occupation is several hundred years later than in Dolores, but the patterns of construction in hamlets versus villages are similar (fig. 4, bottom). The demise of the two villages contributing dated construction events to fig. 4 (bottom) coincides with the famous depopulation of the Four Corners region of the U.S. Southwest. There is strong evidence for declining availability of protein in general and large game animals in particular, and increased competition for the best agricultural land, during the terminal occupation (reviewed by Kohler 200025ya). We draw a final example from an intermediate period. The most famous Anasazi structures, the “great houses” of Chaco Canyon, may follow a similar pattern. Windes & Ford 199629ya show that early construction episodes (in the early A.D. 900s) in the canyon great houses typically coincide with periods of high potential agricultural productivity, but later construction continues in both good periods and bad, particularly in the poor period from ca. A.D. 1030–1050.

Certainly there is strong evidence of diminishing marginal returns—evidence for the Tainter thesis—but diminishing marginal returns is not sunk cost fallacy. Given the general environment, and given that there was a ‘collapse’, arguably there was no opportunity cost to remaining there. How would the Indians have become better off if they abandoned their villages, given that there is little evidence that other places were better off in that period of great droughts and the observation that they would need to make substantial capital investments wherever they went?↩︎

-

Janssen & Scheffer 200421ya:

↩︎In fact, escalation of commitment is found in group decision making ( et al 1984). Members of a group strive for unanimity. A typical goal for political decisions within small-scale societies is to reach consensus (1996). Once unanimity is reached, the easiest way to protect it is to stay committed to the group’s decision (Bazerman et al 198441ya, Janis 197253ya [Victims of groupthink]). Thus, when the group is faced with a negative feedback, members will not suggest abandoning the earlier course of action, because this might disrupt the existing unanimity.

-

McAfee et al 200718ya:

↩︎But there are also examples of people who succeeded by not ignoring sunk costs. The same “we-owe-it-to-our-fallen-countrymen” logic that led Americans to stay the course in Vietnam also helped the war effort in World War II. More generally, many success stories involve people who at some time suffered great setbacks, but persevered when short-term odds were not in their favor because they “had already come too far to give up now.” Columbus did not give up when the shores of India did not appear after weeks at sea, and many on his crew were urging him to turn home (see Olson, 196758ya [The Northmen, Columbus and Cabot, 985–1503], for Columbus’ journal). Jeff Bezos, founder of Amazon.com, did not give up when Amazon’s loss totaled $1.4 billion in 2001, and many on Wall Street were speculating that the company would go broke (see Mendelson and Meza, 2001).

-

“Banking on Commitment: Intended and Unintended Consequences of an Organization’s Attempt to Attenuate Escalation of Commitment”, McNamara et al 200223ya:

The notion that decision makers tend to incorrectly consider previous expenditures when deliberating current utility-based decisions (Arkes & Blumer, 198540ya) has been used to explain fiascoes ranging from the prolonged involvement of the United States in the Vietnam War to the disastrous cost overrun during the construction of the Shoreham Nuclear Power Plant (Ross & Staw, 199332ya). In the Shoreham Nuclear Power Plant example, escalation of commitment meant billions of wasted dollars (Ross & Staw, 199332ya). In the Vietnam War, it may have cost thousands of lives…Kirby and Davis’s (199827ya) experimental study showed that increased monitoring could dampen the escalation of commitment. Staw, Barsade, and Koput’s (199728ya) field data on the banking industry led them to conclude that top manager turnover led to de-escalation of commitment at an aggregate level.

…So far, the results support the efficacy of changes in monitoring and decision responsibility as cures for the escalation of commitment bias. We now turn to the side effects of these treatments. Hypotheses 4 and 5 propose that the threat of increased monitoring and change in management responsibility increase the likelihood of a different form of undesirable decision commitment—the persistent underassessment of borrower risk. The results in column 3 of Table 2 support these hypotheses. Both the threat of increased monitoring and the threat of change in decision responsibility increase the likelihood of persistent underassessment of borrower risk (0.47, p < 0.01, and 0.50, p < 0.05, respectively). These findings support the view that decision makers are likely to fail to appropriately downgrade a borrower when, by doing so, they avoid an organizational intervention. We examined the change in investment commitment for borrowers whose risk was persistently underassessed and who faced either increased monitoring or change in decision responsibility if the decision makers had admitted that the risk needed downgrading. We found that decision makers did appear to exhibit escalation of commitment to these borrowers. The change in commitment (on average, over 30%) is significantly greater than 0 (t = 2.94, p < 0.01) and greater than the change in commitment to those borrowers who were correctly assessed as remaining at the same risk level (t = 2.58, p = 0.01). Combined, these findings suggest that although the organizational efforts to minimize undesirable decision commitment appeared successful at first glance, the threat of these interventions increased the likelihood that decision makers would persistently give overfavorable assessments of the risk of borrowers. In turn, the lending officers would then escalate their monetary commitment to these riskier borrowers.

On nuclear power plants as sunk cost fallacy, McAfee et al 200718ya:

↩︎According to evidence reported by De 1988, managers of many utility companies in the U.S. have been overly reluctant to terminate economically unviable nuclear plant projects. In the 1960s, the nuclear power industry promised “energy too cheap to meter.” But nuclear power later proved unsafe and uneconomical. As the U.S. nuclear power program was failing in the 1970s and 1980s, Public Service Commissions around the nation ordered prudency reviews. From these reviews, De Bondt and Makhija find evidence that the Commissions denied many utility companies even partial recovery of nuclear construction costs on the grounds that they had been mismanaging the nuclear construction projects in ways consistent with “throwing good money after bad.”…In most projects there is uncertainty, and restarting after stopping entails costs, making the option to continue valuable. This is certainly the case for nuclear power plants, for example. Shutting down a nuclear reactor requires dismantling or entombment, and the costs of restarting are extremely high. Moreover, the variance of energy prices has been quite large. The option of maintaining nuclear plants is therefore potentially valuable. Low returns from nuclear power in the 1970s and 1980s might have been a consequence of the large variance, suggesting a high option value of maintaining nuclear plants. This may in part explain the evidence (reported by De Bondt and Makhija, 198837ya) that managers of utilities at the time were so reluctant to shut down seemingly unprofitable plants.

-

Steven Pinker, The Better Angels of Our Nature 201114ya, pg 336:

In the case of a war of attrition, one can imagine a leader who has a changing willingness to suffer a cost over time, increasing as the conflict proceeds and his resolve toughens. His motto would be: ‘We fight on so that our boys shall not have died in vain.’ This mindset, known as loss aversion, the sunk-cost fallacy, and throwing good money after bad, is patently irrational, but it is surprisingly pervasive in human decision-making.65 People stay in an abusive marriage because of the years they have already put into it, or sit through a bad movie because they have already paid for the ticket, or try to reverse a gambling loss by doubling their next bet, or pour money into a boondoggle because they’ve already poured so much money into it. Though psychologists don’t fully understand why people are suckers for sunk costs, a common explanation is that it signals a public commitment. The person is announcing: ‘When I make a decision, I’m not so weak, stupid, or indecisive that I can be easily talked out of it.’ In a contest of resolve like an attrition game, loss aversion could serve as a costly and hence credible signal that the contestant is not about to concede, preempting his opponent’s strategy of outlasting him just one more round.

It’s worth noting that there is at least one example of sunk cost (“entry licenses” [fees]) encouraging cooperation (“collusive price path”) in market agents: Offerman & Potter 200124ya, “Does Auctioning of Entry Licenses Induce Collusion? An Experimental Study”, who point out another case of how our sunk cost map may not correspond to the territory:

↩︎There is one caveat to the sunk cost argument, however. If the game for which the positions are allocated has multiple equilibria, an entry fee may affect the equilibrium that is being selected. Several experimental studies have demonstrated the force of this principle. For example, Cooper, DeJong, 1993, Van Huyck, 1993, and Cachon and Camerer, (199629ya) study coordination games with multiple equilibria and find that an entry fee may induce players to coordinate on a different (Pareto superior) equilibrium.

-

et al 2007

↩︎Reputational Concerns. In team relationships, each participant’s willingness to invest depends on the investments of others. In such circumstances, a commitment to finishing projects even when they appear ex post unprofitable is valuable, because such a commitment induces more efficient ex ante investment. Thus, a reputation for “throwing good money after bad”—the classic sunk cost fallacy—can solve a coordination problem. In contrast to the desire for commitment, people might rationally want to conceal bad choices to appear more talented, which may lead them to make further investments, hoping to conceal their investments gone bad.

Kanodia, Bushman, and Dickhaut (198936ya), 1996, and Camerer & Weber 199926ya develop principal-agent models in which rational agents invest more if they have invested more in the past to protect their reputation for ability. We elucidate the general features of these models below and argue that concerns about reputation for ability are especially powerful in explaining apparent reactions to sunk costs by politicians. Carmichael and MacLeod (200322ya) [see also 2003] develop a model in which agents initially make investments independently and are later matched in pairs, their match produces a surplus, and they bargain over it based on cultural norms of fair division. A fair division rule in which each agent’s surplus share is increasing in their sunk investment, and decreasing in the other’s sunk investment, is shown to be evolutionarily stable.

…If a member of an illegal price-fixing cartel seems likely to confess to the government in exchange for immunity from prosecution, the other cartel members may race to be first to confess, since only the first gets immunity (in Europe, such immunity is called “leniency”). Similarly, a spouse who loses faith in the long-term prospects of a marriage invests less in the relationship, thereby reducing the gains from partnership, potentially dooming the relationship. In both cases, beliefs about the future viability matter to the success of the relationship, and there is the potential for self-fulfilling optimistic and pessimistic beliefs.

In such a situation, individuals may rationally select others who stay in the relationship beyond the point of individual rationality, if such a commitment is possible. Indeed, ex ante it is rational to construct exit barriers like costly and difficult divorce laws, so as to reduce early exit. Such exit barriers might be behavioral as well as legal. If an individual can develop a reputation for sticking in a relationship beyond the break-even point, it would make that individual a more desirable partner and thus enhance the set of available partners, as well as encourage greater and longer lasting investment by the chosen partner. One way of creating such a reputation is to act as if one cares about sunk costs…We now formalize this concept using a simple two-period model that sets aside consideration of selection…That is, a slight possibility of breach is collectively harmful; both agents would be ex ante better off if they could prevent breach when V—ρ < 1, which holds as long as the reputation cost ρ of breaching is not too small. In this model, a tendency to stay in the relationship due to a large sunk investment would be beneficial to each party.

-

The qualifier is because hyperbolic discounting has been demonstrated in many primates, and a number of other biases, eg. Chen et al 200619ya, “How Basic Are Behavioral Biases? Evidence from Capuchin Monkey Trading Behavior”↩︎

-

See also Radford & Blakey 200025ya, “Intensity of nest defence is related to offspring sex ratio in the great tit Parus major”:

↩︎Nest-defence behavior of passerines is a form of parental investment. Parents are selected, therefore, to vary the intensity of their nest defence with respect to the value of their offspring. Great tit, Parus major, males were tested for their defence response to both a nest predator and playback of a great tit chick distress call. The results from the two trials were similar; males gave more alarm calls and made more perch changes if they had larger broods and if they had a greater proportion of sons in their brood. This is the first evidence for a relationship between nest-defence intensity and offspring sex ratio. Paternal quality, size, age and condition, lay date and chick condition did not significantly influence any of the measured nest-defence parameters.

…The most consistent pattern found in studies of avian nest defence has been an increase in the level of the parental response to predators from clutch initiation to £edging (eg. 1981; 1983; 1988; Wiklund 199035ya a). This supports the prediction from parental investment theory (Trivers 197253ya) that parents should risk more in defence of young that are more valuable to them. The intensity of nest defence is also expected to be positively correlated with brood size because the benefits of deterring a predator will increase with offspring number (Williams 196659ya; Wiklund 199035ya b).

-

Northcraft & Wolf 198441ya, “Dollars, sense, and sunk costs: A lifecycle model of research allocation decisions”. Academy of Management Review, 9, 225–234:

The decision maker also may treat the negative feedback as simply a learning experience-a cue to redirect efforts within a project rather than abandon it (Connolly, 197649ya).

…In some cases (Brockner, Shaw, & Rubin, 197946ya), the expected rate of return for further financial commitment even can be shown with a few assumptions to be increasing and (after a certain amount of investment) financially advisable, despite the claim that further resource commitment under the circumstances is psychologically rather than economically motivated…More to the point, the life cycle model clearly reveals the psychologist’s fallacy: continuing a project in the face of a financial setback is not always irrational (it depends on the stage in the project and the magnitude of the financial setback). Second, the life cycle model provides an insight into the manager’s preoccupation with a project’s financial past. It demonstrates how a project’s financial past can be used heuristically to understand the project’s future.

Friedman et al 200619ya:

…There are also several possible rational explanations for an apparent concern with sunk costs. Maintaining a reputation for finishing what you start may have sufficient value to compensate for the expected loss on an additional investment. The ‘real option’ value (eg. Dixit and Pindyck, 199431ya [Investment Under Uncertainty]) [cf. 2009, Tiwana & Fichman 200619ya] of continuing a project also may offset an expected loss. Agency problems in organizations may make it personally better for a manager to continue an unprofitable project than to cancel it and take the heat from its supporters (eg. Milgrom and Roberts, 199233ya [Economics, Organization, and Management]).

(Certainty effects seem to be supported by fMRI imaging.) One may ask why capital constraints aren’t solved—if the projects really are good profitable ideas—by resort to equity or debt? But those are always last resorts due to fundamental coordination & trust issues; McAfee et al 200718ya:

↩︎Abundant theoretical literature in corporate finance shows that imposing financial constraints on firm managers improves agency problems (see Stiglitz and Weiss, 198144ya, Myers and Majluf, 198441ya, Lewis and Sappington, 198936ya, and Hart and Moore, 199530ya). The theoretical conclusion finds overwhelming empirical support, and only a small fraction of business investment is funded by borrowing (see Fazzari and Athey, 198738ya, Fazzari and Peterson, 199332ya, and Love, 200322ya). When managers face financial constraints, sunk costs must influence firm investments simply because of budgets…Firms with financial constraints might rationally react to sunk costs by investing more in a project, rather than less, because the ability to undertake alternative investments declines in the level of sunk costs…Given limited resources, if the firm has already sunk more resources into the current project, then the value of the option to start a new project if it arises is lower relative the value of the option to continue the current project, because fewer resources are left over to bring any new project to fruition, and more resources have already been spent to bring the current project to fruition. Therefore, the firm’s incentive to continue investing in the current project is higher the more resources it has already sunk into the project.

-

Stiglitz, Joseph E. and Weiss, Andrew, 198144ya. “Credit Rationing in Markets with Imperfect Information”, American Economic Review 71, 393–410.

-

Myers, Stewart and Majluf, Nicholas S., 198441ya. “Corporate Financing and Investment Decisions when Firms Have Information that Investors Do Not Have,” Journal of Financial Economics 13, 187–221

-

Lewis, Tracy and Sappington, David E. M., 198936ya. “Countervailing Incentives in Agency Problems,” Journal of Economic Theory 49, 294–313

-

Hart, Oliver and Moore, John, 199530ya. “Debt and Seniority: An Analysis of the Role of Hard Claims in Constraining Management,” American Economic Review 85, 567–585

-

Fazzari, Steven and Athey, Michael J., 198738ya. “Asymmetric Information, Financing Constraints, and Investment,” Review of Economics and Statistics 69, 481–487.

-

Fazzari, Steven and Petersen, Bruce, 199332ya. “Working Capital and Fixed Investment: New Evidence on Financing Constraints,” RAND Journal of Economics 24, 328–342

-

Love, Inessa, 200322ya. “Financial Development and Financing Constraints: International Evidence from the Structural Investment Model,” Review of Financial Studies 16, 765–791

-

-

The swans:

↩︎Although none of these terms are used, the same phenomena is also observed by et al 2001. In particular, tundra swans must expend more energy to “up-end” to feed on deep-water tuber patches than they do to “head-dip” to feed on shallow-water patches; however, contrary to the expectations of Nolet et al the swans feed for a longer time on each high-cost deep-water patch. In every context, the observation of the sunk-cost effect is an enigma because intuition suggests that this behavior is suboptimal. Here, we show how optimization of Eq. (3) predicts the sunk-cost effect for certain scenarios; a common element of every case is a large initial cost.

-

Klaczynski & Cottrell 200421ya:

↩︎Although considerable evidence indicates that adults commit the SC fallacy frequently, age differences in the propensity to honour sunk costs have been little studied. In their investigations of 7–15-year-olds (Study 1) and 5–12-year-olds (Study 2), Baron et al 199332ya found no relationship between age and SC decisions. By contrast, Klaczynski (200124yab) reported that the SC fallacy decreased from early adolescence to adulthood, although normative decisions were infrequent across ages. A third pattern of findings is reviewed by Arkes & Ayton 199926ya. Specifically, Arkes and Ayton argue that two studies (Krouse, 198639ya; Webley & Plaiser, 199827ya) indicate that younger children commit the SC fallacy less frequently than older children. Making sense of these conflicting findings is difficult because criticisms can be levied against each investigation. For instance, Arkes & Ayton 199926ya questioned the null findings of Baron et al 199332ya because sample sizes were small (eg. in Baron et al Study 2, n per age group ranged 7–17). The problems used by Krouse (198639ya) and Webley & Plaiser 199827ya were not, strictly speaking, SC problems (rather, they were problems of ‘mental accounting’; see Webley & Plaiser, 199827ya). Because Klaczynski (200124yab) did not include children in his sample, the age trends he reported are limited to adolescence. Thus, an interpretable montage of age trends in SC decisions cannot be created from prior research.

…An alternative proposition is based on the previously outlined theory of the role of metacognition in mediating interactions between analytic and heuristic processing. In this view, even young children have had ample opportunities to convert the ‘waste not’ heuristic from a conscious strategy to an automatically activated heuristic stored as a procedural memory. Evidence from children’s experiences with food (eg. Birch, Fisher, & Grimm-Thomas, 199926ya) provides some support for the argument that even preschoolers are frequently reinforced for not ‘wasting’ food. Mothers commonly extort their children to ‘clean up their plates’ even though they are sated and even though the nutritional effects of eating more than their bodies require are generally negative. If the ‘waste not’ heuristic is automatically activated in sunk cost situations for both children and adults, then one possibility is that no age differences in committing the fallacy should be expected. However, if activated heuristics are momentarily available for evaluation in working memory, then the superior metacognitive abilities of adolescents and adults should allow them to intercede in experiential processing before the heuristic is actually used. Although the evidence is clear that most adults do not take advantage of this opportunity for evaluation, the proportion of adolescents and adults who actively inhibit the ‘waste not’ heuristic should be greater than the same proportion of children.

-

eg. “Discounting of Delayed Rewards: A Life-Span Comparison”, Green et al 199431ya; abstract:

↩︎In this study, children, young adults, and older adults chose between immediate and delayed hypothetical monetary rewards. The amount of the delayed reward was held constant while its delay was varied. All three age groups showed delay discounting; that is, the amount of an immediate reward judged to be of equal value to the delayed reward decreased as a function of delay. The rate of discounting was highest for children and lowest for older adults, predicting a life-span developmental trend toward increased self-control. Discounting of delayed rewards by all three age groups was well described by a single function with age-sensitive parameters (all R2s > .94). Thus, even though there are quantitative age differences in delay discounting, the existence of an age-invariant form of discount function suggests that the process of choosing between rewards of different amounts and delays is qualitatively similar across the life span.

-

“The Bias Against Creativity: Why People Desire But Reject Creative Ideas”, Mueller et al 201114ya:

↩︎Uncertainty is an aversive state (Fiske & Taylor, 199134ya [Social cognition]; Heider, 195867ya [The psychology of interpersonal relations]) which people feel a strong motivation to diminish and avoid (Whitson & Galinsky, 2008).

-

“Searching for the Sunk Cost Fallacy”, Friedman et al 200718ya:

Subjects play a computer game in which they decide whether to keep digging for treasure on an island or to sink a cost (which will turn out to be either high or low) to move to another island. The research hypothesis is that subjects will stay longer on islands that were more costly to find. Nine treatment variables are considered, eg. alternative visual displays, whether the treasure value of an island is shown on arrival or discovered by trial and error, and alternative parameters for sunk costs. The data reveal a surprisingly small and erratic sunk cost effect that is generally insensitive to the proposed psychological drivers.

I cite Friedman 200619ya here so much because it’s unusual—as McAfee et al 200718ya puts it:

↩︎…Most of the existing empirical work has not controlled for changing hazards, option values, reputations for ability and commitment, and budget constraints. We are aware of only one study in which several of these factors are eliminated—Friedman et al 200619ya. In an experimental environment without option value or reputation considerations, the authors find only very small and non-statistically-significant sunk cost effects in the majority of their treatments, consistent with the rational theory presented here.

-

On the lessons of et al 1990’s observation of non sunk cost fallacy, McAfee et al 200718ya: