Internet Search Tips

A description of advanced tips and tricks for effective Internet research of papers/books, with real-world examples.

Over time, I developed a certain google-fu and expertise in finding references, papers, and books online. I start with the standard tricks like Boolean queries and keyboard shortcuts, and go through the flowchart for how to search, modify searches for hard targets, penetrate paywalls, request jailbreaks, scan books, monitor topics, and host documents. Some of these tricks are not well-known, like checking the Internet Archive (IA) for books.

I try to write down my search workflow, and give general advice about finding and hosting documents, with demonstration case studies.

Google-fu search skill is something I’ve prided myself ever since elementary school, when the librarian challenged the class to find things in the almanac; not infrequently, I’d win. And I can still remember the exact moment it dawned on me in high school that much of the rest of my life would be spent dealing with searches, paywalls, and broken links. The Internet is the greatest almanac of all, and to the curious, a never-ending cornucopia, so I am sad to see many fail to find things after a cursory search—or not look at all. For most people, if it’s not the first hit in Google/Google Scholar, it doesn’t exist. Below, I reveal my best Internet search tricks and try to provide a rough flowchart of how to go about an online search, explaining the subtle tricks and tacit knowledge of search-fu.

Roughly, we need to have proper tools to create an occasion for a search: we cannot search well if we avoid searching at all. Then each search will differ by which search engine & type of medium we are searching—they all have their own quirks, blind spots, and ways to modify a failed search. Often, we will run into walls, each of which has its own circumvention methods. But once we have found something, we are not done: we would often be foolish & short-sighted if we did not then make sure it stayed found. Finally, we might be interested in advanced topics like ensuring in advance resources can be found in the future if need be, or learning about new things we might want to then go find. To illustrate the overall workflow & provide examples of tacit knowledge, I include many Internet case studies of finding hard-to-find things.

Papers

Search

Preparation

Cerca, trova.

Do or do not; there is no try. The first thing you must do is develop a habit of searching when you have a question: “Google is your friend.” Your only search guaranteed to fail is the one you never run. (Beware trivial inconveniences!)

-

Query Syntax Knowledge

Know your basic Boolean operators & the key G search operators: double quotes for exact matches, hyphens for negation/exclusion, and

site:for search a specific website or specific directory of that website (eg.foo site:gwern.net/doc/genetics/, or to exclude folders,foo site:gwern.net -site:gwern.net/doc/). You may also want to play with Advanced Search to understand what is possible. (There are many more G search operators (Russell description) but they aren’t necessarily worth learning, because they implement esoteric functionality and most seem to be buggy1.) -

Hotkey Shortcuts (strongly recommended)

Enable some kind of hotkey search with both prompt and copy-paste selection buffer, to turn searching Google (G)/Google Scholar (GS)/Wikipedia (WP) into a reflex.2 You should be able to search instinctively within a split second of becoming curious, with a few keystrokes. (If you can’t use it while IRCing without the other person noting your pauses, it’s not fast enough.)

Example tools: AutoHotkey (Windows), Quicksilver (Mac), xclip+Surfraw/StumpWM’s

search-engines/XMonad’sActions.Search/Prompt.Shell(Linux). DuckDuckGo offers ‘bangs’, within-engine special searches (most are equivalent to a kind of Googlesite:search), which can be used similarly or combined with prompts/macros/hotkeys.I make heavy use of the XMonad hotkeys, which I wrote, and which gives me window manager shortcuts: while using any program, I can highlight a title string, and press

Super-shift-yto open the current selection as a GS search in a new Firefox tab within an instant; if I want to edit the title (perhaps to add an author surname, year, or keyword), I can instead open a prompt,Super-y, paste withC-y, and edit it before a\nlaunches the search. As can be imagined, this is extremely helpful for searching for many papers or for searching. (There are in-browser equivalents to these shortcuts but I disfavor them because they only work if you are in the browser, typically require more keystrokes or mouse use, and don’t usually support hotkeys or searching the copy-paste selection buffer: Firefox, Chrome) -

Web Browser Hotkeys

For navigating between sets of results and entries, you should have good command of your tabbed web browser. You should be able to go to the address bar, move left/right in tabs, close tabs, open new blank tabs, unclose tabs, go to the nth tab, etc. (In Firefox/Chrome Win/Linux, those are, respectively:

C-l,C-PgUp/C-PgDwn,C-w,C-t/C-T,M-[1–9].)

Searching

Having launched your search in, presumably, Google Scholar, you must navigate the GS results. For GS, it is often as simple as clicking on the [PDF] or [HTML] link in the top right which denotes (what GS believes to be) a fulltext link, eg:

![An example of a hit in Google Scholar: note the [HTML] link indicating there is a fulltext Pubmed version of this paper (often overlooked by newbies).](/doc/technology/google/gwern-googlescholar-search-highlightfulltextlink.png)

An example of a hit in Google Scholar: note the [HTML] link indicating there is a fulltext Pubmed version of this paper (often overlooked by newbies).

GS: if no fulltext in upper right, look for soft walls. In GS, remember that a fulltext link is not always denoted by a “[PDF]” link! Check the top hits by hand: there are often ‘soft walls’ which block web spiders but still let you download fulltext (perhaps after substantial hassle, like SSRN).

Note that GS supports other useful features like alerts for search queries, alerts for anything citing a specific paper, and reverse citation searches (to followup on a paper to look for failures-to-replicate or criticisms of it).

Drilling Down

A useful hit may not turn up immediately. Life is like that.3 You may have to get creative:

-

Title searches: if a paper fulltext doesn’t turn up on the first page, start tweaking (hard rules cannot be given for this, it requires development of “mechanical sympathy” and asking a mixture of “how would a machine think to classify this” and “how would other people think to write this”):

-

The Golden Mean: Keep mind when searching, you want some but not too many or too few results. A few hundred hits in GS is around the sweet spot. If you have less than a page of hits, you have made your search too specific.

If nothing is turning up, try trimming the title. Titles tend to have more errors towards the end than the beginning, and people often drop So start cutting words off the end of the title to broaden the search. Think about what kinds of errors you make when you recall titles: you drop punctuation or subtitles, substitute in more familiar synonyms, or otherwise simplify it. (How might OCR software screw up a title?)

Pay attention to technical terms that pop up in association with your own query terms, particularly in the snippets or full abstracts. Which ones look like they might be more popular than yours, or indicate yours are usually used slightly different from you think they mean? You may need to switch terms.

If deleting a few terms then yields way too many hits, try to filter out large classes of hits with a negation

foo -bar, adding as many as necessary; also useful is using OR clauses to open up the search in a more restricted way by adding in possible synonyms, with parentheses for group. This can get quite elaborate, and border on hacking—I have on occasion resorted to search queries as baroque as(foo OR baz) AND (qux OR quux) -bar -garply -waldo -fredto the point where I hit search query length limits and CAPTCHA barriers.4 (By that point, it is time to consider alternate attacks.) -

Tweak The Title: quote the title; delete any subtitle; try the subtitle instead; be suspicious of any character which is not alphanumeric and if there are colons, split it into two title quotes (instead of searching

Foo bar: baz quux, or"Foo bar: baz quux", search"Foo bar" "baz quux"); swap their order. -

Tweak The Metadata:

-

Add/remove the year.

-

Add/remove the first author’s surname. Try searching GS for just the author (

author:foo).

-

-

Delete Odd Characters/Punctuation:

Libgen had trouble with colons for a long time, and many websites still do (eg. GoodReads); I don’t know why colons in particular are such trouble, although hyphens/em-dashes and any kind of quote or apostrophe or period are problematic too. Watch out for words which may be space-separated—if you want to find Arpad Elo’s epochal The Rating of Chessplayers in Libgen, you need to search “The Rating of Chess Players” instead! (This is also an example of why falling back to search by author is a good idea.)

-

Tweak Spelling: Try alternate spellings of British/American terms. This shouldn’t be necessary, but then, deleting colons or punctuation shouldn’t be necessary either.

-

Check For Book Publication: many papers are published in the form of book anthologies, not journal articles. So look for the book if the paper is mysteriously absent.

A book will not necessarily turn up in GS and thus its constituent papers may not either; similarly, while SH does a good job of linking article paywalls to their respective book compilation in LG, it is far from infallible. If a paper was published in any kind of ‘proceeding’ or ‘conference’ or ‘series’ or anything with an ISBN, the paper may be absent from the usual places but the book readily available. It can be quite frustrating to be searching hard for a paper and realize the book was there in plain sight all along. (My suggestion in such cases for post-finding is to cut out the relevant page range & upload the paper for others to more easily find.)

-

Use URLs: if you have a URL, try searching chunks of it, typically towards the end, stripping out dates and domain names.

-

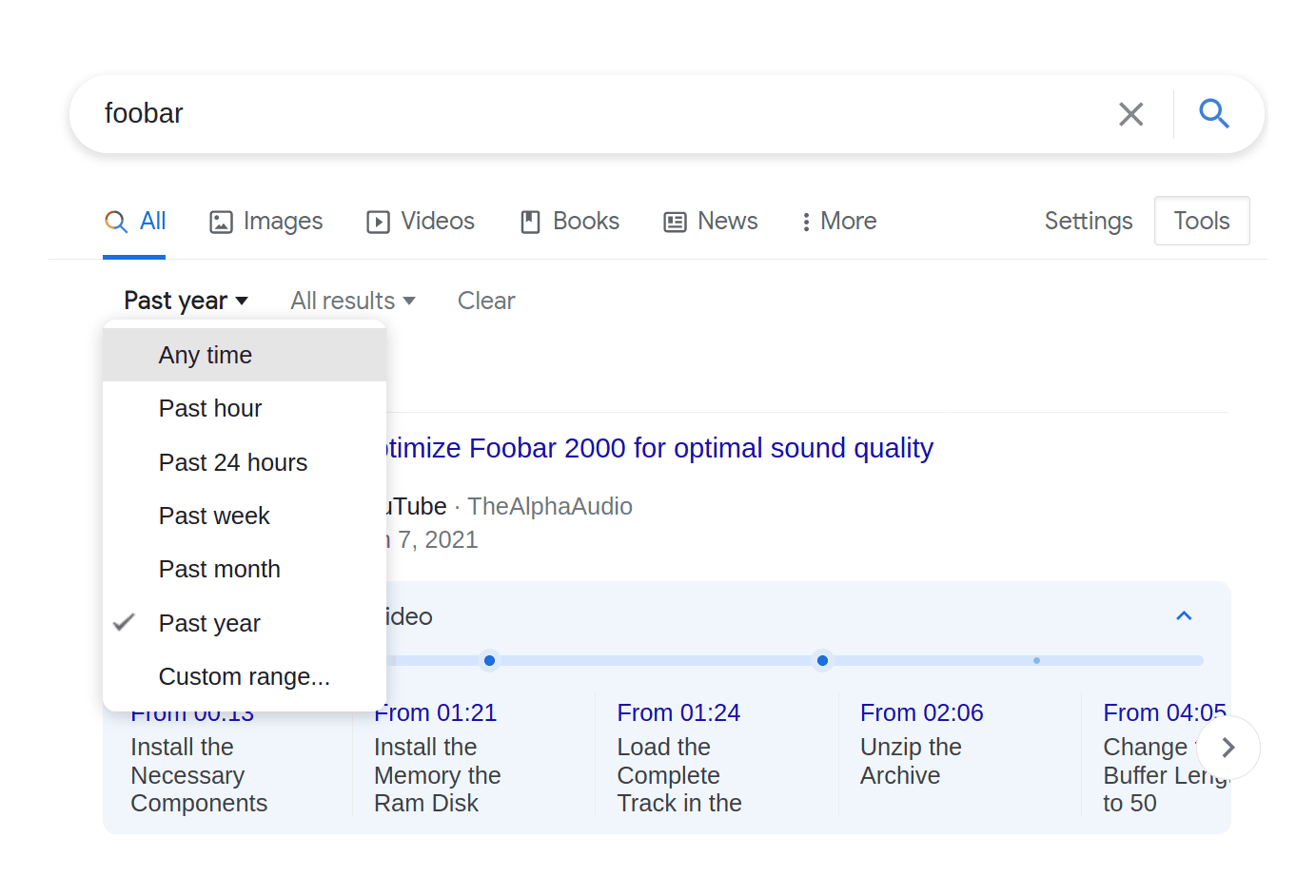

Date Search:

Use a search engine (eg. G/GS) date range feature (in “Tools”) to search ±4 years: metadata can be wrong, publishing conventions can be odd (eg. a magazine published in ‘June’ may actually be published several months before or after), publishers can be extremely slow. This is particularly useful if you add a date constraint & simultaneously loosen the search query to turn up the most temporally-relevant of what would otherwise be far too many hits. If this doesn’t turn up the relevant target, it might turn up related discussions or fixed citations, since most things are cited most shortly after publication and then vanish into obscurity.

Click “Tools” on the far right to access date-range & “verbatim” search modes in Google Search.

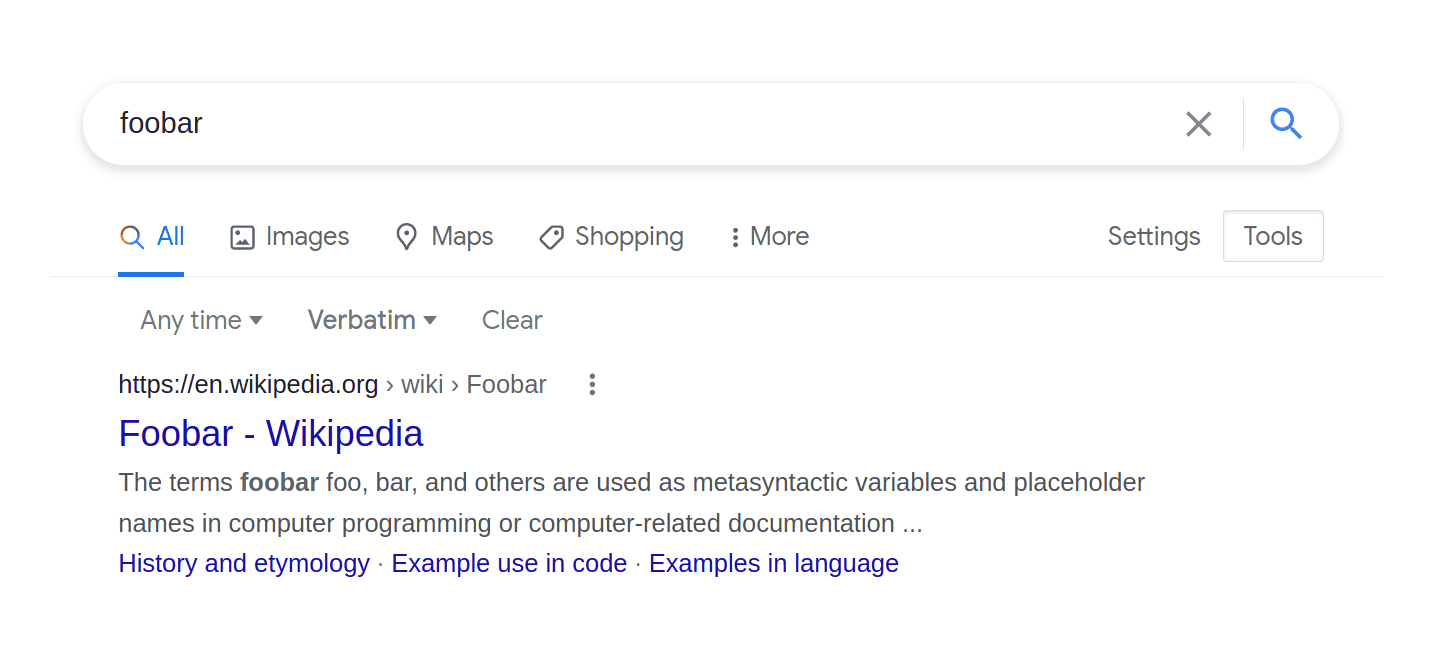

The “verbatim” mode is useful for forcing more literal matching: without it, a search for “foobar” will insist on hits about music players, hiring contests, etc rather than the programming term itself.

If a year is not specified, try to guess from the medium: popular media has heavy recentist bias & prefers only contemporary research which is ‘news’, while academic publications go back a few more years; the style of the reference can give a hint as to how relatively old some mentioned research or writings is. Frequently, given the author surname and a reasonable guess at some research being a year or two old, the name + date-range + keyword in GS will be enough to find the paper.

-

Consider errors: typos are common. If nothing is showing up in the date-range despite a specific date, perhaps there was a typographic error. Even a diligent typist will occasionally copy metadata from a previous entry or type the same character twice or 2 characters in the wrong order, and for numbers, there is no spellcheck to help catch such errors. Authors frequently propagate bibliographic errors without correcting them (demonstrating, incidentally, that they probably did not read the original and so any summaries should be taken with a grain of salt). Think about plausible transpositions & neighboring keys on a QWERTY keyboard: eg. a year like “1976” may actually be 196659ya, 196758ya, 197550ya, 197748ya, or 198639ya (but it will not be 1876149ya or 2976 or 198738ya). What typos would you make if you were reading or typing in a hurry? What OCR errors are likely, such as confusing ‘3’/‘8’?

-

-

Add Jargon: Add technical terminology which might be used by relevant papers; for example, if you are looking for an article on college admissions statistics, any such analysis would probably be using logistic regression and, even if they do not say “logistic regression” (in favor of some more precise yet unguessable term) would express their effects in terms of “odds”.

If you don’t know what jargon might be used, you may need to back off and look for a review article or textbook or WP page and spend some quality time reading. If you’re using the wrong term, period, nothing will help you; you can spend hours going through countless pages, but that won’t make the wrong term work. You may need to read through overviews until you finally recognize the skeleton of what you want under a completely different (and often rather obtuse) name. Nothing is more frustrating that knowing there must be a large literature on a topic (“Cowen’s Law”) but being unable to find it because it’s named something completely different from expected—and many fields have different names for the same concept or tool. (Occasionally people compile “Rosetta stones” to translate between fields: eg. 2009, 2018, et al 2018’s Table 1. These are invaluable.)

-

Even The Humble Have A Tale: Beware hastily dismissing ‘bibliographic’ websites as useless—they may have more than you think.

While a bibliographic-focused library site like

elibrary.ruis (almost) always useless & clutters up search results by hosting only the citation metadata but not fulltext, every so often I run into a peculiar foreign website (often Indian or Chinese) which happens to have a scan of a book or paper. (eg. 1954, which eluded me for well over half an hour until, taking the alternate approach of hunting its volume, I out of desperation clicked on an Indian index/library website which… had it. Go figure.) Sometimes you have to check every hit, just in case. -

Search Internet Archive:

The Internet Archive (IA) deserves special mention as a target because it is the Internet’s attic, bursting at the seams with a remarkable assortment of scans & uploads from all sorts of sources—not just archiving web pages, but scanning university collections, accepting uploads from rogue archivists and hackers and obsessive fans and the aforementioned Indian/Chinese libraries with more laissez-faire approaches.5 This extends to its media collections as well—who would expect to find so many old science-fiction magazines (as well as many other magazines), a near-infinite number of Grateful Dead recordings, the original 114 episodes of Tom and Jerry, or thousands of arcade & console & PC & Flash games (all playable in-browser)? The Internet Archive is a veritable Internet in and of itself; the problem, of course, is finding anything…

So not infrequently, a book may be available, or a paper exists in the middle of a scan of an entire journal volume, but the IA will be ranked very low in search queries and the snippet will be misleading due to bad OCR. A good search strategy is to drop the quotes around titles or excerpts and focus down to

site:archive.organd check the first few hits by hand. (You can also try the relatively new “Internet Archive Scholar”, which appears to be more comprehensive than Google-site-search.)

Hard Cases

If the basic tricks aren’t giving any hints of working, you will have to get serious. The title may be completely wrong, or it may be indexed under a different author, or not directly indexed at all, or hidden inside a database. Here are some indirect approaches to finding articles:

-

Reverse Citations: Take a look in GS’s “related articles” or “cited by” to find similar articles such as later versions of a paper which may be useful. (These are also good features to know about if you want to check things like “has this ever been replicated?” or are still figuring out the right jargon to search.)

-

Anomalous Hits: Look for hints of hidden bibliographic connections and anomalous hits.

Does a paper pop up high in the search results which doesn’t seem to make sense, such as not containing your keywords in the displayed snippet? GS generally penalizes items which exist as simply bibliographic entries, so if one is ranked high in a sea of fulltexts, that should make you wonder why it is being prioritized. Similarly, for Google Books (GB): a book might be forbidden from displaying even snippets but rank high; that might be for a good reason—it may actually contain the fulltext hidden inside it, or something else relevant.

Likewise, you cannot trust metadata too much. The inferred or claimed title may be wrong, and a hit may be your desired target lurking in disguise.

-

Compilation Files: Some papers can be found by searching for the volume or book title to find it indirectly, especially conference proceedings or anthologies; many papers appear to not be available online but are merely buried deep inside a 500-page PDF, and the G snippet listing is misleading.

Conferences are particularly complex bibliographically, so you may need to apply the same tricks as for page titles: drop parts, don’t fixate on the numbers, know that the authors or ISBN or ordering of “title: subtitle” can differ between sources, etc.

PDF Pages Are Linkable

Tech trick: you can link to a specific page number N of any PDF by adding

#page=Nto the URL (eg. this link links to the text samples in the Megatron paper on page 13, rather than the first page) -

Search By Issue: Another approach is to look up the listing for a journal issue, and find the paper by hand; sometimes papers are listed in the journal issue’s online Table of Contents, but just don’t appear in search engines (‽). In particularly insidious cases, a paper may be digitized & available—but lumped in with another paper due to error, or only as part of a catch-all file which contains the last 20 miscellaneous pages of an issue. Page range citations are particularly helpful here because they show where the overlap is, so you can download the suspicious overlapping ‘papers’ to see what they really contain.

Esoteric as this may sound, this has been a problem on multiple occasions. I searched in vain for any hint of 1929’s existence, half-convinced it was a typo for his 1959 publication, until I turned to the raw journal scans. A particularly epic example was 1966 where after an hour of hunting, all I had was bibliographic echoes despite apparently being published in a high-profile, easily obtained, & definitely digitized journal, Science—leaving me thoroughly baffled. I eventually looked up the ToC and inferred it had been hidden in a set of abstracts!6 Or a number of SMPY papers turned out to be split or merged with neighboring items in journal issues, and I had to fix them by hand.

-

Masters/PhD Theses: sorry. It may be hopeless if it’s pre-2000. You may well find the citation and even an abstract, but actual fulltext…?

If you have a university proxy, you may be able to get a copy off ProQuest (specializing in US theses). If ProQuest does not allow a download but indexes it, that usually means it has a copy archived on microfilm/microfiche, but no one has yet paid for a scan to be made; you can sign up without any special permission, and then purchase ProQuest scans for ~$43 (as of 2023), and that gives you a downloadable PDF. (They apparently scan non-digital works from their vast backlog only on request, so it’s almost like ransoming papers; which means that buying a scan makes it available to academic subscribers as part of the ProQuest database.)

Otherwise, you need full university ILL services7, and even that might not be enough (a surprising number of universities appear to restrict access only to the university students/faculty, with the complicating factor of most theses being stored on microfilm).

-

Reverse Image Search: If images are involved, a reverse image search in Google Images or TinEye or Yandex Search can turn up important leads.

Bellingcat has a good guide by Aric Toller: “Guide To Using Reverse Image Search For Investigations”. (Yandex image search appears to exploit face recognition, text OCR, and other capabilities Google Images will not, and bows less to copyright concerns.)

Use Browser Page Info to Bypass Image Restrictions

If you are having trouble downloading an image from a web page which is badly/maliciously designed to stop you, use “View Page Info”’s (

C-I) “Media” tab (eg), which will list the images in a page and let one download them directly. -

Enemy Action: Is a page or topic not turning up in Google/IA that you know ought to be there? Check the website’s

robots.txt& sitemap. While not as relevant as they used to be (due to increasing use of dynamic pages & entities ignoring it),robots.txtcan sometimes be relevant: key URLs may be excluded from search results, and overly-restrictiverobots.txtcan cause enormous holes in IA coverage, which may be impossible to fix (but at least you’ll know why). -

Patience: not every paywall can be bypassed immediately, and papers may be embargoed or proxies not immediately available.

If something is not available at the moment, it may become available in a few months. Use calendar reminders to check back in to see if an embargoed paper is available or if LG/SH have obtained it, and whether to proceed to additional search steps like manual requests.

-

Domain Knowledge-Specific Tips:

-

Twitter: Twitter is indexed in Google so web searches may turn up hits, but if you know any metadata, Twitter’s native search functions are still relatively powerful (although Twitter limits searches in many ways in order to drive business to its staggeringly-expensive ‘firehose’ & historical analytics). Use of Twitter’s “advanced search” interface, particularly the

from:&to:search query operators, can vastly cut down the search space. (Also of note:list:,-filter:retweets,near:,url:, &since:/until:.) -

US federal courts: US federal court documents can be downloaded off PACER after registration; it is pay-per-page ($0.13$0.12018/page) but users under a certain level each quarter (currently $19.41$152018) have their fees waived, so if you are careful, you may not need to pay anything at all. There is a public mirror, called RECAP, which can be searched & downloaded from for free. If you fail to find a case in RECAP and must use PACER (as often happens for obscure cases), please install the Firefox/Chrome RECAP browser extension, which will copy anything you download into RECAP. (This can be handy if you realize later that you should’ve kept a long PDF you downloaded or want to double-check a docket.)

Navigating PACER can be difficult because it is an old & highly specialized computer system which assumes you are a lawyer, or at least very familiar with PACER & the American federal court system. As a rule of thumb, if you are looking up a particular case, what you want to do is to search for the first name & surname (even if you have the case ID) for either criminal or civil cases as relevant, and pull up all cases which might pertain to an individual; there can be multiple cases, cases can hibernate for years, be closed, reopened as a different case number, etc. Once you have found the most active or relevant case, you want to look at the “docket”, and check the options to see all documents in the case. This will pull up a list of many documents as the case unfolds over time; most of these documents are legal bureaucracy, like rescheduling hearings or notifications of changed lawyers. You want the longest documents, as those are most likely to be useful. In particular, you want the “indictment”, the “criminal complaint”8, and any transcripts of trial testimony.9 Shorter documents, like 1–2pg entries in the docket, can be useful, but are much less likely to be useful unless you are interested in the exact details of how things like pre-trial negotiations unfold. So carelessly choosing the ‘download all’ option on PACER may blow through your quarterly budget without getting you anything interesting (and also may interfere with RECAP uploading documents).

There is no equivalent for state or county court systems, which are balkanized and use a thousand different systems (often privatized & charging far more than PACER); those must be handled on a case by case basis. (Interesting trivia point: according to Nick Bilton’s account of the Silk Road 1 case, the FBI and other federal agencies in the SR1 investigation would deliberately steer cases into state rather than federal courts in order to hide them from the relative transparency of the PACER system. The use of multiple court systems can backfire on them, however, as in the case of SR2’s DoctorClu (see the DNM arrest census for details), where the local police filings revealed the use of hacking techniques to deanonymize SR2 Tor users, implicating CMU’s CERT center—details which were belatedly scrubbed from the PACER filings.)

-

charity financials: for USA charity financial filings, do

Form 990 site:charity.comand then check GuideStar (eg. looking at Girl Scouts filings or “Case Study: Reading Edge’s financial filings”). For UK charities, the Charity Commission for England and Wales may be helpful. -

education research: for anything related to education, do a site search of ERIC, which is similar to IA in that it will often have fulltext which is buried in the usual search results

-

Wellcome Library: the Wellcome Library has many old journals or books digitized which are impossible to find elsewhere; unfortunately, their SEO is awful & their PDFs are unnecessarily hidden behind click-through EULAs, so they will not show up normally in Google Scholar or elsewhere. If you see the Wellcome Library in your Google hits, check it out carefully.

-

magazines (as opposed to scholarly or trade journals) are hard to get.

They are not covered in Libgen/Sci-Hub, which outsource that to MagzDB; coverage is poor, however. An alternative is pdf-giant. Particularly for pre-2000 magazines, one may have to resort to looking for old used copies on eBay. Some magazines are easier than others—I generally give up if I run into a New Scientist citation because it’s never worth the trouble.

-

-

Newspapers: like theses, tricky. I don’t know of any general solutions short of a LexisNexis subscription.10 An interesting resource for American papers is Chronicling America’s “Historic American Newspaper” scans.

By Quote or Description

For quote/description searches: if you don’t have a title and are falling back on searching quotes, try varying your search similarly to titles:

-

Novel sentences: Try the easy search first—whatever looks most memorable or unique.

-

Short quotes are unique: Don’t search too long a quote, a sentence or two is usually enough to be near-unique, and can be helpful in turning up other sources quoting different chunks which may have better citations.

-

Break up quotes: Because even phrases can be unique, try multiple sub-quotes from a big quote, especially from the beginning and end, which are likely to overlap with quotes which have prior or subsequent passages. This can be critical with apocryphal quotes, which often delete less witty sub-passages while accreting new material. (An extreme example would be the Oliver Heaviside quote.)

-

Odd idiosyncratic wording: Search for oddly-specific phrases or words, especially numbers. 3 or 4 keywords is usually enough.

-

Paraphrasing: Look for passages in the original text which seem like they might be based on the same source, particularly if they are simply dropped in without any hint at sourcing and don’t sound like the author; authors typically don’t cite every time they draw on a source, usually only the first time, and during editing the ‘first’ appearance of a source could easily have been moved to later in the text. All of these additional uses are something to add to your searches.

-

-

Robust Quotes: You are fighting a game of Chinese whispers, so look for unique-sounding sentences and terms which can survive garbling in the repeated transmissions.

Memories are urban legends told by one neuron to another over the years. Pay attention to how you mis-remember things: you distort them by simplifying them, rounding them to the nearest easiest version, and by adding in details which should have been there. Avoid phrases which could be easily reworded in multiple equivalent ways, as people usually will reword them when quoting from memory, screwing up literal searches. Remember the fallibility of memory and the basic principles of textual criticism: people substitute easy-to-remember versions for the hard, long11, or unusual original.

-

Tweak Spelling: Watch out for punctuation and spelling differences hiding hits.

-

Gradient Ascent: Longer, less witty versions are usually closer to the original and a sign you are on the right trail. The worse, the better. Sniff in the direction of worse versions. (Authors all too often fail to write what they were supposed to write—as Yogi Berra remarked, “I really didn’t say everything I said.”)

-

Search Books: Switch to GB and hope someone paraphrases or quotes it, and includes a real citation; if you can’t see the full passage or the reference section, look up the book in Libgen.

Dealing With Paywalls

Gold once out of the earth is no more due unto it; What was unreasonably committed to the ground is reasonably resumed from it: Let Monuments and rich Fabricks, not Riches adorn mens ashes. The commerce of the living is not to be transferred unto the dead: It is not injustice to take that which none complains to lose, and no man is wronged where no man is possessor.

Use Sci-Hub/Libgen for books/papers. A paywall can usually be bypassed by using Libgen (LG)/Sci-Hub (SH): papers can be searched directly (ideally with the DOI12, but title+author with no quotes will usually work), or an easier way may be to prepend13 sci-hub.st (or whatever SH mirror you prefer) to the URL of a paywall. Occasionally Sci-Hub will not have a paper or will persistently error out with some HTTP or proxy error, but searching the DOI in Libgen directly will work. Finally, there is a LibGen/Sci-Hub fulltext search engine on the Z-Library mirror, which is a useful alternative to Google Books (despite the poor OCR).

Use university Internet. If those don’t work and you do not have a university proxy or alumni access, many university libraries have IP-based access rules and also open WiFi or Internet-capable computers with public logins inside the library, which can be used, if you are willing to take the time to visit a university in person, for using their databases (probably a good idea to keep a list of needed items before paying a visit).

Public libraries too. Public libraries often subscribe to commercial newspapers or magazine databases; they are inconvenient to get to, but you can usually at least check what’s available on their website. Public & school libraries also have a useful trick for getting common schooling-related resources, such as the OED, or the archives of the New York Times or New Yorker: because of their usually unsophisticated & transient userbase, some public & school libraries will post lists of usernames/passwords on their website (sometimes as a PDF). They shouldn’t, but they do. Googling phrases like “public library New Yorker username password” can turn up examples of these. Used discreetly to fetch an article or two, it will do them no harm. (This trick works less well with passwords to anything else.)

If that doesn’t work, there is a more opaque ecosystem of filesharing services: booksc/bookfi/bookzz, private torrent trackers like Bibliotik, IRC channels with XDCC bots like #bookz/#ebooks, old P2P networks like eMule, private DC++ hubs…

Site-specific notes:

-

PubMed: most papers with a PMC ID can be purchased through the Chinese scanning service Eureka Mag; scans are $37.09$302020 & electronic papers are $24.73$202020.

-

Elsevier/

sciencedirect.com: easy, always available via SH/LGNote that many Elsevier journal websites do not work with the SH proxy, although their

sciencedirect.comversion does and/or the paper is already in LG. If you see a link tosciencedirect.comon a paywall, try it if SH fails on the journal website itself. -

PsycNET: one of the worst sites; SH/LG never work with the URL method, rarely work with paper titles/DOIs, and with my university library proxy, loaded pages ‘expire’ and redirect while breaking the browser back button (‽‽‽), combined searches don’t usually work (frequently failing to pull up even bibliographic entries), and only DOI or manual title searches in the EBSCOhost database have a chance of fulltext. (EBSCOhost itself is a fragile search engine which is difficult to query reliably in the absence of a DOI.)

Try to find the paper anywhere else besides PsycNET!

-

ProQuest/JSTOR: ProQuest/JSTOR are not standard academic publishers, but have access to or mirrors of a surprisingly large number of publications.

I have been surprised how often I have hit dead-ends, and then discovered a copy sitting in ProQuest/JSTOR, poorly-indexed by search engines.

-

Custom journal websites: sometimes a journal will have its own website (eg. Cell or Florida Tax Review), but will still be ultimately run by one of the giants like Elsevier or HeinOnline. (You can often see hints of this in the site design, such as the footer, the URL structure, direct links to the publisher version, etc.)

When this is the case, it is usually a waste of time to try to use the journal website: it won’t whitelist university IPs, SH/LG won’t know how to handle it, etc. Instead, look for the alternative version.

Request

Human flesh search engine. Last resort: if none of this works, there are a few places online you can request a copy (however, they will usually fail if you have exhausted all previous avenues):

Finally, you can always try to contact the author. This only occasionally works for the papers I have the hardest time with, since they tend to be old ones where the author is dead or unreachable—any author publishing a paper since 199035ya will usually have been digitized somewhere—but it’s easy to try.

Post-Finding

After finding a fulltext copy, you should find a reliable long-term link/place to store it and make it more findable (remember—if it’s not in Google/Google Scholar, it doesn’t exist!):

-

Never Link Unreliable Hosts:

-

LG/SH: Always operate under the assumption they could be gone tomorrow. (As my uncle found out with Library.nu shortly after paying for a lifetime membership!) There are no guarantees either one will be around for long under their legal assaults or the behind-the-scenes dramas, and no guarantee that they are being properly mirrored or will be restored elsewhere.

When in doubt, make a copy. Disk space is cheaper every day. Download anything you need and keep a copy of it yourself and, ideally, host it publicly.

-

NBER: never rely on a

papers.nber.org/tmp/orpsycnet.apa.orgURL, as they are temporary. (SSRN is also undesirable due to making it increasingly difficult to download, but it is at least reliable.) -

Scribd: never link Scribd—they are a scummy website which impede downloads, and anything on Scribd usually first appeared elsewhere anyway. (In fact, if you run into anything vaguely useful-looking which exists only on Scribd, you’ll do humanity a service if you copy it elsewhere just in case.)

-

RG: avoid linking to ResearchGate (compromised by new ownership & PDFs get deleted routinely, apparently often by authors) or

Academia.edu(the URLs are one-time and break) -

high-impact journals: be careful linking to Nature.com or Cell (if a paper is not explicitly marked as Open Access, even if it’s available, it may disappear in a few months!14); similarly, watch out for

wiley.com,tandfonline.com,jstor.org,springer.com,springerlink.com, &mendeley.com, who pull similar shenanigans. -

~/: be careful linking to academic personal directories on university websites (often noticeable by the Unix convention.edu/~user/or by directories suggestive of ephemeral hosting, like.edu/cs/course112/readings/foo.pdf); they have short half-lives. -

?token=: beware any PDF URL with a lot of trailing garbage in the URL such as query strings like?casa_tokenor?cookieor?X(or hosted on S3/AWS); such links may or may not work for other people but will surely stop working soon. (Academia.edu, Nature, and Elsevier are particularly egregious offenders here.)

-

-

PDF Editing: if a scan, it may be worth editing the PDF to crop the edges, threshold to binarize it (which, for a bad grayscale or color scan, can drastically reduce filesize while increasing readability), and OCR it.

I use gscan2pdf but there are alternatives worth checking out.

-

Check & Improve Metadata.

Adding metadata to papers/books is a good idea because it makes the file findable in G/GS (if it’s not online, does it really exist?) and helps you if you decide to use bibliographic software like Zotero in the future. Many academic publishers & LG are terrible about metadata, and will not include even title/author/DOI/year.

PDFs can be easily annotated with metadata using ExifTool::

exiftool -Allprints all metadata, and the metadata can be set individually using similar fields.For papers hidden inside volumes or other files, you should extract the relevant page range to create a single relevant file. (For extraction of PDF page-ranges, I use

pdftk, eg:pdftk 2010-davidson-wellplayed10-videogamesvaluemeaning.pdf cat 180-196 output 2009-fortugno.pdf. Many publishers insert a spam page as the first page. You can drop that easily withpdftk INPUT.pdf cat 2-end output OUTPUT.pdf, but note that PDFtk may drop all metadata, so do that before adding any metadata. To delete pseudo-encryption or ‘passworded’ PDFs, dopdftk INPUT.pdf input_pw output OUTPUT.pdf; PDFs using actual encryption are trickier but can often be beaten by off-the-shelf password-cracking utilities.) For converting JPG/PNGs to PDF, one can use ImageMagick for <64 pages (convert *.png foo.pdf) but beyond that one may need to convert them individually & then join the resulting PDFs (eg.for f in *.png; do convert "$f" "${f%.png}.pdf"; done && pdftk *.pdf cat output foo.pdfor join withpdfunite *.pdf foo.pdf.)I try to set at least title/author/DOI/year/subject, and stuff any additional topics & bibliographic information into the “Keywords” field. Example of setting metadata:

exiftool -Author="Frank P. Ramsey" -Date=1930 -Title="On a Problem of Formal Logic" -DOI="10.1112/plms/s2-30.1.264" \ -Subject="mathematics" -Keywords="Ramsey theory, Ramsey's theorem, combinatorics, mathematical logic, decidability, \ first-order logic, Bernays-Schönfinkel-Ramsey class of first-order logic, _Proceedings of the London Mathematical \ Society_, Volume s2-30, Issue 1, 1930-01-01, pg264-286" 1930-ramsey.pdf“PDF Plus” is better than “PDF”.

If two versions are provided, the “PDF” one may be intended (if there is any real difference) for printing and exclude features like hyperlinks .

-

Public Hosting: if possible, host a public copy; especially if it was very difficult to find, even if it was useless, it should be hosted. The life you save may be your own.

-

Link On WP/Social Media: for bonus points, link it in appropriate places on Wikipedia or Reddit or Twitter; this makes people aware of the copy being available, and also supercharges visibility in search engines.

-

Link Specific Pages: as noted before, you can link a specific page by adding

#page=Nto the URL. Linking the relevant page is helpful to readers. (I recommend against doing this if this is done to link an entire article inside a book, because that article will still have bad SEO and it will be hard to find; in such cases, it’s better to crop out the relevant page range as a standalone article, eg. usingpdftkagain forpdftk 1900-BOOK.pdf cat 123-456 output 1900-PAPER.pdf.)

Advanced

Aside from the (highly-recommended) use of hotkeys and Booleans for searches, there are a few useful tools for the researcher, which while expensive initially, can pay off in the long-term:

-

archiver-bot: automatically archive your web browsing and/or links from arbitrary websites to forestall linkrot; particularly useful for detecting & recovering from dead PDF links -

Subscriptions like PubMed & GS search alerts: set up alerts for a specific search query, or for new citations of a specific paper. (Google Alerts is not as useful as it seems.)

-

PubMed has straightforward conversion of search queries into alerts: “Create alert” below the search bar. (Given the volume of PubMed indexing, I recommend carefully tailoring your search to be as narrow as possible, or else your alerts may overwhelm you.)

-

To create generic GS search query alert, simply use the “Create alert” on the sidebar for any search. To follow citations of a key paper, you must: 1. bring up the paper in GS; 2. click on “Cited by X”; 3. then use “Create alert” on the sidebar.

-

-

GCSE: a Google Custom Search Engines is a specialized search queries limited to whitelisted pages/domains etc (eg. my Wikipedia-focused anime/manga CSE).

A GCSE can be thought of as a saved search query on steroids. If you find yourself regularly including scores of the same domains in multiple searches search, or constantly blacklisting domains with

-site:or using many negations to filter out common false positives, it may be time to set up a GCSE which does all that by default. -

Clippings: note-taking services like Evernote/Microsoft OneNote: regularly making and keeping excerpts creates a personalized search engine, in effect.

This can be vital for refinding old things you read where the search terms are hopelessly generic or you can’t remember an exact quote or reference; it is one thing to search a keyword like “autism” in a few score thousand clippings, and another thing to search that in the entire Internet! (One can also reorganize or edit the notes to add in the keywords one is thinking of, to help with refinding.) I make heavy use of Evernote clipping and it is key to refinding my references.

-

Crawling Websites: sometimes having copies of whole websites might be useful, either for more flexible searching or for ensuring you have anything you might need in the future. (example: “Darknet Market Archives (2013–22015)”).

Useful tools to know about: wget, cURL, HTTrack; Firefox plugins: NoScript, uBlock origin, Live HTTP Headers, Bypass Paywalls, cookie exporting.

Short of downloading a website, it might also be useful to pre-emptively archive it by using

linkcheckerto crawl it, compile a list of all external & internal links, and store them for processing by another archival program (see Archiving URLs for examples). In certain rare circumstances, security tools likenmapcan be useful to examine a mysterious server in more detail: what web server and services does it run, what else might be on it (sometimes interesting things like old anonymous FTP servers turn up), has a website moved between IPs or servers, etc.

Web Pages

With proper use of pre-emptive archiving tools like archiver-bot, fixing linkrot in one’s own pages is much easier, but that leaves other references. Searching for lost web pages is similar to searching for papers:

-

Just Search The Title: if the page title is given, search for the title.

It is a good idea to include page titles in one’s own pages, as well as the URL, to help with future searches, since the URL may be meaningless gibberish on its own, and pre-emptive archiving can fail. HTML supports both

altandtitleparameters in link tags, and, in cases where displaying a title is not desirable (because the link is being used inline as part of normal hypertextual writing), titles can be included cleanly in Markdown documents like this:[inline text description](URL "Title"). -

Clean URLs: check the URL for weirdness or trailing garbage like

?rss=1or?utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+blogspot%2FgJZg+%28Google+AI+Blog%29? Or a variant domain, like amobile.foo.com/m.foo.com/foo.com/amp/URL? Those are all less likely to be findable or archived than the canonical version. -

Domain Site Search: restrict G search to the original domain with

site:, or to related domains -

Time-Limited Search: restrict G search to the original date-range/years.

You can use this to tame overly-general searches. An alternative to the date-range widget is the advanced search syntax, which works (for now): specify numeric range queries using double-dots like

foo 2020..2023(which is useful beyond just years). If this is still too broad, it can always be narrowed down to individual years. -

Switch Engines: try a different search engine: corpuses can vary, and in some cases G tries to be too smart for its own good when you need a literal search; DuckDuckGo (especially for ‘bang’ special searches), Bing, and Yandex are usable alternatives

-

Check Archives: if nowhere on the clearnet, try the Internet Archive (IA) or the Memento meta-archive search engine:

IA is the default backup for a dead URL. If IA doesn’t Just Work, there may be other versions in it:

-

misleading redirects: did the IA ‘helpfully’ redirect you to a much-later-in-time error page? Kill the redirect and check the earliest stored version for the exact URL rather than the redirect. Did the page initially load but then error out/redirect? Disable JS with NoScript and reload.

-

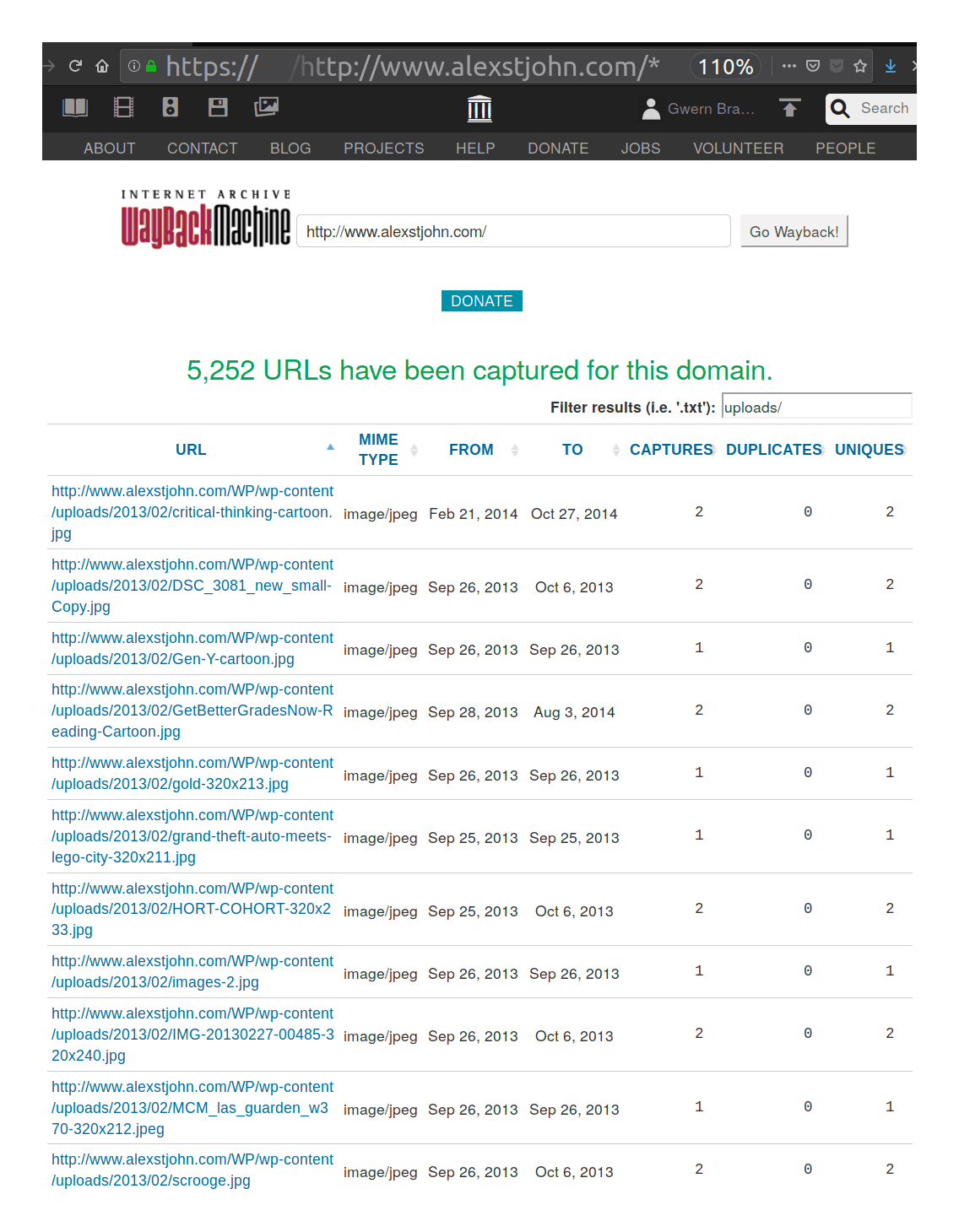

Within-Domain Archives: IA lets you list all URLs with any archived versions, by searching for

URL/*; the list of available URLs may reveal an alternate newer/older URL. It can also be useful to filter by filetype or substring.For example, one might list all URLs in a domain, and if the list is too long and filled with garbage URLs, then using the “Filter results” incremental-search widget to search for “uploads/” on a WordPress blog.15

Screenshot of an oft-overlooked feature of the Internet Archive: displaying all available/archived URLs for a specific domain, filtered down to a subset matching a string like

*uploads/*.-

wayback_machine_downloader(not to be confused with theinternetarchivePython package which provides a CLI interface to uploading files) is a Ruby tool which lets you download whole domains from IA, which can be useful for running a local fulltext search using regexps (a goodgrepquery is often enough), in cases where just looking at the URLs viaURL/*is not helpful. (An alternative which might work iswebsitedownloader.io.)

Example:

gem install --user-install wayback_machine_downloader ~/.gem/ruby/2.7.0/bin/wayback_machine_downloader wayback_machine_downloader --all-timestamps 'https://blog.okcupid.com' -

-

did the domain change, eg. from

www.foo.comtofoo.comorwww.foo.org? Entirely different as far as IA is concerned. -

does the internal evidence of the URL provide any hints? You can learn a lot from URLs just by paying attention and thinking about what each directory and argument means.

-

is this a Blogspot blog? Blogspot is uniquely horrible in that it has versions of each blog for every country domain: a

foo.blogspot.comblog could be under any offoo.blogspot.de,foo.blogspot.au,foo.blogspot.hk,foo.blogspot.jp…16 -

did the website provide RSS feeds?

A little known fact is that Google Reader (GR; October 2005–July 201312ya) stored all RSS items it crawled, so if a website’s RSS feed was configured to include full items, the RSS feed history was an alternate mirror of the whole website, and since GR never removed RSS items, it was possible to retrieve pages or whole websites from it. GR has since closed down, sadly, but before it closed, Archive Team downloaded a large fraction of GR’s historical RSS feeds, and those archives are now hosted on IA. The catch is that they are stored in mega-WARCs, which, for all their archival virtues, are not the most user-friendly format. The raw GR mega-WARCs are difficult enough to work with that I defer an example to the appendix.

-

archive.today: an IA-like mirror. (Sometimes bypasses paywalls or has snapshots other services do not; I strongly recommend against treating archive.today/archive.is/etc as anything but a temporary mirror to grab snapshots from, as it has no long-term plans.) -

any local archives, such as those made with my

archiver-bot -

Google Cache (GC): no longer extant as of 2024-09-24.

-

Books

Digital

E-books are rarer and harder to get than papers, although the situation has improved vastly since the early 2000s. To search for books online:

-

More Straightforward: book searches tend to be faster and simpler than paper searches, and to require less cleverness in search query formulation, perhaps because they are rarer online, much larger, and have simpler titles, making it easier for search engines.

Search G, not GS, for books:

No Books in Google Scholar

Book fulltexts usually don’t show up in GS (for unknown reasons). You need to check G when searching for books.

To double-check, you can try a

filetype:pdfsearch; then check LG. Typically, if the main title + author doesn’t turn it up, it’s not online. (In some cases, the author order is reversed, or the title:subtitle are reversed, and you can find a copy by tweaking your search, but these are rare.). -

IA: the Internet Archive has many books scanned which do not appear easily in search results (poor SEO?).

-

If an IA hit pops up in a search, always check it; the OCR may offer hints as to where to find it. If you don’t find anything or the provided, try doing an IA site search in G (not the IA built-in search engine), eg.

book title site:archive.org. -

DRM workarounds: if it is on IA but the IA version is DRMed and is only available for “checkout”, you can jailbreak it.

Check the book out for the full period, 14 days. Download the PDF (not EPUB) version to Adobe Digital Elements version ≤4.0 (which can be run in Wine on Linux), and then import it to Calibre with the De-DRM plugin, which will produce a DRM-free PDF inside Calibre’s library. (Getting De-DRM running can be tricky, especially under Linux. I wound up having to edit some of the paths in the Python files to make them work with Wine. It also appears to fail on the most recent Google Play ebooks, ~2021.) You can then add metadata to the PDF & upload it to LG17. (LG’s versions of books are usually better than the IA scans, but if they don’t exist, IA’s is better than nothing.)

-

-

Google Play: use the same PDF DRM as IA, can be broken same way

-

HathiTrust also hosts many book scans, which can be searched for clues or hints or jailbroken.

HathiTrust blocks whole-book downloads but it’s easy to download each page in a loop and stitch them together, for example:

for i in {1..151} do if [[ ! -s "$i.pdf" ]]; then wget "https://babel.hathitrust.org/cgi/imgsrv/download/pdf?id=mdp.39015050609067;orient=0;size=100;seq=$i;attachment=0" \ -O "$i.pdf" sleep 20s fi done pdftk *.pdf cat output 1957-super-scientificcareersandvocationaldevelopmenttheory.pdf exiftool -Title="Scientific Careers and Vocational Development Theory: A review, a critique and some recommendations" \ -Date=1957 -Author="Donald E. Super, Paul B. Bachrach" -Subject="psychology" \ -Keywords="Bureau Of Publications (Teachers College Columbia University), LCCCN: 57-12336, National Science Foundation, public domain, \ https://babel.hathitrust.org/cgi/pt?id=mdp.39015050609067;view=1up;seq=1 https://psycnet.apa.org/record/1959-04098-000" \ 1957-super-scientificcareersandvocationaldevelopmenttheory.pdfAnother example of this would be the Wellcome Library; while looking for An Investigation Into The Relation Between Intelligence And Inheritance, 1931, I came up dry until I checked one of the last search results, a “Wellcome Digital Library” hit, on the slim off-chance that, like the occasional Chinese/Indian library website, it just might have fulltext. As it happens, it did—good news? Yes, but with a caveat: it provides no way to download the book! It provides OCR, metadata, and individual page-image downloads all under CC-BY-NC-SA (so no legal problems), but… not the book. (The OCR is also unnecessarily zipped, so that is why Google ranked the page so low and did not show any revealing excerpts from the OCR transcript: because it’s hidden in an opaque archive to save a few kilobytes while destroying SEO.) Examining the download URLs for the highest-resolution images, they follow an unfortunate schema:

-

https://dlcs.io/iiif-img/wellcome/1/5c27d7de-6d55-473c-b3b2-6c74ac7a04c6/full/2212,/0/default.jpg -

https://dlcs.io/iiif-img/wellcome/1/d514271c-b290-4ae8-bed7-fd30fb14d59e/full/2212,/0/default.jpg -

etc

Instead of being sequentially numbered 1–90 or whatever, they all live under a unique hash or ID. Fortunately, one of the metadata files, the ‘manifest’ file, provides all of the hashes/IDs (but not the high-quality download URLs). Extracting the IDs from the manifest can be done with some quick

sed&trstring processing, and fed into another shortwgetloop for downloadgrep -F '@id' manifest\?manifest\=https\:%2F%2Fwellcomelibrary.org%2Fiiif%2Fb18032217%2Fmanifest | \ sed -e 's/.*imageanno\/\(.*\)/\1/' | grep -E -v '^ .*' | tr -d ',' | tr -d '"' # " # bf23642e-e89b-43a0-8736-f5c6c77c03c3 # 334faf27-3ee1-4a63-92d9-b40d55ab72ad # 5c27d7de-6d55-473c-b3b2-6c74ac7a04c6 # d514271c-b290-4ae8-bed7-fd30fb14d59e # f85ef645-ec96-4d5a-be4e-0a781f87b5e2 # a2e1af25-5576-4101-abee-96bd7c237a4d # 6580e767-0d03-40a1-ab8b-e6a37abe849c # ca178578-81c9-4829-b912-97c957b668a3 # 2bd8959d-5540-4f36-82d9-49658f67cff6 # ...etc I=1 for HASH in $HASHES; do wget "https://dlcs.io/iiif-img/wellcome/1/$HASH/full/2212,/0/default.jpg" -O $I.jpg I=$((I+1)) doneAnd then the 59MB of JPGs can be cleaned up as usual with

gscan2pdf(empty pages deleted, tables rotated, cover page cropped, all other pages binarized), compressed/OCRed withocrmypdf, and metadata set withexiftool, producing a readable, downloadable, highly-search-engine-friendly 1.8MB PDF. -

-

remember the Analog Hole works for papers/books too:

if you can find a copy to read, but cannot figure out how to download it directly because the site uses JS or complicated cookie authentication or other tricks, you can always exploit the ‘analogue hole’—fullscreen the book in high resolution & take screenshots of every page; then crop, OCR etc. This is tedious but it works. And if you take screenshots at sufficiently high resolution, there will be relatively little quality loss. (This works better for books that are scans than ones born-digital.)

Physical

Expensive but feasible. Books are something of a double-edged sword compared to papers/theses. On the one hand, books are much more often unavailable online, and must be bought offline, but at least you almost always can buy used books offline without much trouble (and often for <$12.56$102019 total); on the other hand, while paper/theses are often available online, when one is not unavailable, it’s usually very unavailable, and you’re stuck (unless you have a university ILL department backing you up or are willing to travel to the few or only universities with paper or microfilm copies).

Purchasing from used book sellers:

-

Sellers:

-

used book search engines: Google Books/find-more-books.com: a good starting point for seller links; if buying from a marketplace like AbeBooks/Amazon/Barnes & Noble, it’s worth searching the seller to see if they have their own website, which is potentially much cheaper. They may also have multiple editions in stock.

-

bad: eBay & Amazon are often bad, due to high-minimum-order+S&H and sellers on Amazon seem to assume Amazon buyers are easily rooked; but can be useful in providing metadata like page count or ISBN or variations on the title

-

good: AbeBooks, Thrift Books, Better World Books, B&N, Discover Books.

Note: on AbeBooks, international orders can be useful (especially for behavioral genetics or psychology books) but be careful of international orders with your credit card—many debit/credit cards will fail on international orders and trigger a fraud alert, and PayPal is not accepted.

-

-

Price Alerts: if a book is not available or too expensive, set price watches: AbeBooks supports email alerts on stored searches, and Amazon can be monitored via CamelCamelCamel (remember the CCC price alert you want is on the used third-party category, as new books are more expensive, less available, and unnecessary).

Scanning:

-

Destructive Vs Non-Destructive: the fundamental dilemma of book scanning—destructively debinding books with a razor or guillotine cutter works much better & is much less time-consuming than spreading them on a flatbed scanner to scan one-by-one18, because it allows use of a sheet-fed scanner instead, which is easily 5x faster and will give higher-quality scans (because the sheets will be flat, scanned edge-to-edge, and much more closely aligned), but does, of course, require effectively destroying the book.

-

Tools:

-

cutting: For simple debinding of a few books a year, an X-acto knife/razor is good (avoid the ‘triangle’ blades, get curved blades intended for large cuts instead of detail work).

Once you start doing more than one a month, it’s time to upgrade to a guillotine blade paper cutter (a fancier swinging-arm paper cutter, which uses a two-joint system to clamp down and cut uniformly).

A guillotine blade can cut chunks of 200 pages easily without much slippage, so for books with more pages, I use both: an X-acto to cut along the spine and turn it into several 200-page chunks for the guillotine cutter.

-

scanning: at some point, it may make sense to switch to a scanning service like 1DollarScan (1DS has acceptable quality for the black-white scans I have used them for thus far, but watch out for their nickel-and-diming fees for OCR or “setting the PDF title”; these can be done in no time yourself using

gscan2pdf/exiftool/ocrmypdfand will save a lot of money as they, amazingly, bill by 100-page units). Books can be sent directly to 1DS, reducing logistical hassles.

-

-

Clean Up: after scanning, crop/threshold/OCR/add metadata

-

Adding metadata: same principles as papers. While more elaborate metadata can be added, like bookmarks, I have not experimented with those yet.

-

-

File format: PDF, not DjVu

Despite being a worse format in many respects, I now recommend PDF and have stopped using DjVu for new scans19 and have converted my old DjVu files to PDF.

-

Uploading: to LibGen, usually, and Gwern.net sometimes. For backups, filelockers like Dropbox, Mega, MediaFire, or Google Drive are good. I usually upload 3 copies including LG. I rotate accounts once a year, to avoid putting too many files into a single account. [I discourage reliance on IA links.)

Do Not Use Google Docs/Scribd/Dropbox/IA/etc for Long-Term Documents

‘Document’ websites like Google Docs (GD) should be strictly avoided as primary hosting. GD does not appear in G/GS, dooming a document to obscurity, and Scribd is ludicrously user-hostile with changing dark patterns. Such sites cannot be searched, scraped, downloaded reliably, clipped, used on many devices, archived20, or counted on for the long haul. (For example, Google Docs has made many documents ‘private’, breaking public links, to the surprise of even the authors when I contact them about it, for unclear reasons.)

Such sites may be useful for collaboration or surveys, but should be regarded as strictly temporary working files, and moved to clean static HTML/PDF/XLSX hosted elsewhere as soon as possible.

-

Hosting: hosting papers is easy but books come with risk:

Books can be dangerous; in deciding whether to host a book, my rule of thumb is host only books pre-2000 and which do not have Kindle editions or other signs of active exploitation and is effectively an ‘orphan work’.

As of 2019-10-23, hosting 4090 files over 9 years (very roughly, assuming linear growth, <6.7 million document-days of hosting: 3763 × 0.5 × 8 × 365.25 = 6722426), I’ve received 4 takedown orders: a behavioral genetics textbook (201312ya), The Handbook of Psychopathy (200520ya), a recent meta-analysis paper ( et al 2016), and a CUP DMCA takedown order for 27 files. I broke my rule of thumb to host the 2 books (my mistake), which leaves only the 1 paper, which I think was a fluke. So, as long as one avoids relatively recent books, the risk should be minimal.

Case Studies

Case study examples of using Internet search tips in action.

See Also

External Links

-

“A look at search engines with their own indexes” (reviews alternatives like Kagi or Mojeek or Stract)

-

“Million Short” (search engine overlay which removes top 100/1k/10k/100k/1m domains from hits, exposing obscurer sites which may be highly novel)

-

Practice G search problems: “A Google A Day”; Google Power Searching course (OK for beginners but you may want to skip the videos in favor of the slides)

-

Archive Team’s Archive Bot

-

“The Mystery of the Bloomfield Bridge”, Tyler Vigen

Appendix

Searching the Google Reader Archives

A 2015 tutorial on how to do manual searches of the 201312ya Google Reader archives on the Internet Archive. Google Reader provides fulltext mirrors of many websites which are long gone and not otherwise available even in the IA; however, the Archive Team archives are extremely user-unfriendly and challenging to use even for programmers.

I explain how to find & extract specific websites.

Note: now largely obsoleted by querying IA’s Wayback Machine for the GR RSS URL.

A little-known way to ‘undelete’ a pre-2013 blog or website is to use Google Reader (GR). Unusual archive: Google Reader. GR crawled regularly almost all blogs’ RSS feeds; RSS feeds often contain the fulltext of articles. If a blog author writes an article, the fulltext is included in the RSS feed, GR downloads it, and then the author changes their mind and edits or deletes it, GR would redownload the new version but it would continue to show the version the old version as well (you would see two versions, chronologically). If the author blogged regularly and so GR had learned to check regularly, it could hypothetically grab different edited versions, even, not just ones with weeks or months in between. Assuming that GR did not, as it sometimes did for inscrutable reasons, stop displaying the historical archives and only showed the last 90 days or so to readers; I was never able to figure out why this happened or if indeed it really did happen and was not some sort of UI problem. Regardless, if all went well, this let you undelete an article, albeit perhaps with messed up formatting or something. Sadly, GR was closed back on 2013-07-01, and you cannot simply log in and look for blogs.

Archive Team mirrored Google Reader. However, before it was closed, Archive Team launched a major effort to download as much of GR as possible. So in that dump, there may be archives of all of a random blog’s posts. Specifically: if a GR user subscribed to it; if Archive Team knew about it; if they requested it in time before closure; and if GR did keep full archives stretching back to the first posting.

AT mirror is raw binary data. Downside: the Archive Team dump is not in an easily browsed format, and merely figuring out what it might have is difficult. In fact, it’s so difficult that before researching Craig Wright in November–December 2015, I never had an urgent enough reason to figure out how to get anything out of it before, and I’m not sure I’ve ever seen anyone actually use it before; Archive Team takes the attitude that it’s better to preserve the data somehow and let posterity worry about using it. (There is a site which claimed to be a frontend to the dump but when I tried to use it, it was broken & still is in April 2024.)

Extracting

Find the right archive. The 9TB of data is stored in ~69 opaque compressed WARC archives. 9TB is a bit much to download and uncompress to look for one or two files, so to find out which WARC you need, you have to download the ~69 CDX indexes which record the contents of their respective WARC, and search them for the URLs you are interested in. (They are plain text so you can just grep them.)

Locations

In this example, we will look at the main blog of Craig Wright, gse-compliance.blogspot.com. (Another blog, security-doctor.blogspot.com, appears to have been too obscure to be crawled by GR.) To locate the WARC with the Wright RSS feeds, download the the master index. To search:

for file in *.gz; do echo $file; zcat $file | grep -F -e 'gse-compliance' -e 'security-doctor'; done

# com,google/reader/api/0/stream/contents/feed/http:/gse-compliance.blogspot.com/atom.xml?client=\

# archiveteam&comments=true&likes=true&n=1000&r=n 20130602001238 https://www.google.com/reader/\

# api/0/stream/contents/feed/http%3A%2F%2Fgse-compliance.blogspot.com%2Fatom.xml?r=n&n=1000&\

# likes=true&comments=true&client=ArchiveTeam unk - 4GZ4KXJISATWOFEZXMNB4Q5L3JVVPJPM - - 1316181\

# 19808229791 archiveteam_greader_20130604001315/greader_20130604001315.megawarc.warc.gz

# com,google/reader/api/0/stream/contents/feed/http:/gse-compliance.blogspot.com/feeds/posts/default?\

# alt=rss?client=archiveteam&comments=true&likes=true&n=1000&r=n 20130602001249 https://www.google.\

# com/reader/api/0/stream/contents/feed/http%3A%2F%2Fgse-compliance.blogspot.com%2Ffeeds%2Fposts%2Fdefault\

# %3Falt%3Drss?r=n&n=1000&likes=true&comments=true&client=ArchiveTeam unk - HOYKQ63N2D6UJ4TOIXMOTUD4IY7MP5HM\

# - - 1326824 19810951910 archiveteam_greader_20130604001315/greader_20130604001315.megawarc.warc.gz

# com,google/reader/api/0/stream/contents/feed/http:/gse-compliance.blogspot.com/feeds/posts/default?\

# client=archiveteam&comments=true&likes=true&n=1000&r=n 20130602001244 https://www.google.com/\

# reader/api/0/stream/contents/feed/http%3A%2F%2Fgse-compliance.blogspot.com%2Ffeeds%2Fposts%2Fdefault?\

# r=n&n=1000&likes=true&comments=true&client=ArchiveTeam unk - XXISZYMRUZWD3L6WEEEQQ7KY7KA5BD2X - - \

# 1404934 19809546472 archiveteam_greader_20130604001315/greader_20130604001315.megawarc.warc.gz

# com,google/reader/api/0/stream/contents/feed/http:/gse-compliance.blogspot.com/rss.xml?client=archiveteam\

# &comments=true&likes=true&n=1000&r=n 20130602001253 https://www.google.com/reader/api/0/stream/contents\

# /feed/http%3A%2F%2Fgse-compliance.blogspot.com%2Frss.xml?r=n&n=1000&likes=true&comments=true\

# &client=ArchiveTeam text/html 404 AJSJWHNSRBYIASRYY544HJMKLDBBKRMO - - 9467 19812279226 \

# archiveteam_greader_20130604001315/greader_20130604001315.megawarc.warc.gzUnderstanding the output: the format is defined by the first line, which then can be looked up:

-

the format string is:

CDX N b a m s k r M S V g; which means here:-

N: massaged url -

b: date -

a: original url -

m: MIME type of original document -

s: response code -

k: new style checksum -

r: redirect -

M: meta tags (AIF) -

S: ? -

V: compressed arc file offset -

g: file name

-

Example:

(com,google)/reader/api/0/stream/contents/feed/http:/gse-compliance.blogspot.com/atom.xml\

?client=archiveteam&comments=true&likes=true&n=1000&r=n 20130602001238 https://www.google.com\

/reader/api/0/stream/contents/feed/http%3A%2F%2Fgse-compliance.blogspot.com%2Fatom.xml?r=n\

&n=1000&likes=true&comments=true&client=ArchiveTeam unk - 4GZ4KXJISATWOFEZXMNB4Q5L3JVVPJPM\

- - 1316181 19808229791 archiveteam_greader_20130604001315/greader_20130604001315.megawarc.warc.gzConverts to:

-

massaged URL:

(com,google)/reader/api/0/stream/contents/feed/http:/gse-compliance.blogspot.com/atom.xml?client=archiveteam&comments=true&likes=true&n=1000&r=n -

date: 20130602001238

-

original URL:

https://www.google.com/reader/api/0/stream/contents/feed/http%3A%2F%2Fgse-compliance.blogspot.com%2Fatom.xml?r=n&n=1000&likes=true&comments=true&client=ArchiveTeam -

MIME type:

unk[unknown?] -

response code:—[none?]

-

new-style checksum:

4GZ4KXJISATWOFEZXMNB4Q5L3JVVPJPM -

redirect:—[none?]

-

meta tags:—[none?]

-

S [? maybe length?]: 1316181

-

compressed arc file offset: 19808229791 (19,808,229,791; so somewhere around 19.8GB into the mega-WARC)

-

filename:

archiveteam_greader_20130604001315/greader_20130604001315.megawarc.warc.gz

As of 2024, the WARCs have been processed into Wayback Machine and the original google.com/reader/api/0/ RSS URLs are now searchable, so one could look the old GR RSS up like a normal URL and do the normal broader searches like searching for all versions

However, in 2015, we had to do it the hard way: extracting directly from the WARC. Knowing the offset theoretically makes it possible to extract directly from the IA copy without having to download and decompress the entire thing… The S & offsets for gse-compliance are:

-

1316181/19808229791

-

1326824/19810951910

-

1404934/19809546472

-

9467/19812279226

So we found hits pointing towards archiveteam_greader_20130604001315 & archiveteam_greader_20130614211457 which we then need to download (25GB each):

wget 'https://archive.org/download/archiveteam_greader_20130604001315/greader_20130604001315.megawarc.warc.gz'

wget 'https://archive.org/download/archiveteam_greader_20130614211457/greader_20130614211457.megawarc.warc.gz'Once downloaded, how do we get the feeds? There are a number of hard-to-use and incomplete tools for working with giant WARCs; I contacted the original GR archiver, ivan, but that wasn’t too helpful.

warcat

I tried using warcat to unpack the entire WARC archive into individual files, and then delete everything which was not relevant:

python3 -m warcat extract /home/gwern/googlereader/...

find ./www.google.com/ -type f -not \( -name "*gse-compliance*" -or -name "*security-doctor*" \) -delete

find ./www.google.com/But this was too slow, and crashed partway through before finishing.

Bug reports:

-

request: extract based on index/length (to avoid using

dd) -

request: extract using regexp (to let one pull out whole domains from GR archives)

A more recent alternative library, which I haven’t tried, is warcio, which may be able to find the byte ranges & extract them.

dd

If we are feeling brave, we can use the offset and presumed length to have dd directly extract byte ranges:

dd skip=19810951910 count=1326824 if=greader_20130604001315.megawarc.warc.gz of=2.gz bs=1

# 1326824+0 records in

# 1326824+0 records out

# 1326824 bytes (1.3 MB) copied, 14.6218 s, 90.7 kB/s

dd skip=19810951910 count=1326824 if=greader_20130604001315.megawarc.warc.gz of=3.gz bs=1

# 1326824+0 records in

# 1326824+0 records out

# 1326824 bytes (1.3 MB) copied, 14.2119 s, 93.4 kB/s

dd skip=19809546472 count=1404934 if=greader_20130604001315.megawarc.warc.gz of=4.gz bs=1

# 1404934+0 records in

# 1404934+0 records out

# 1404934 bytes (1.4 MB) copied, 15.4225 s, 91.1 kB/s

dd skip=19812279226 count=9467 if=greader_20130604001315.megawarc.warc.gz of=5.gz bs=1

# 9467+0 records in

# 9467+0 records out

# 9467 bytes (9.5 kB) copied, 0.125689 s, 75.3 kB/s

dd skip=19808229791 count=1316181 if=greader_20130604001315.megawarc.warc.gz of=1.gz bs=1

# 1316181+0 records in

# 316181+0 records out

# 1316181 bytes (1.3 MB) copied, 14.6209 s, 90.0 kB/s

gunzip *.gzResults

Success: raw HTML. My dd extraction was successful, and the resulting HTML/RSS could then be browsed with a command like cat *.warc | fold --spaces -width=200 | less. They can probably also be converted to a local form and browsed, although they won’t include any of the site assets like images or CSS/JS, since the original RSS feed assumes you can load any references from the original website and didn’t do any kind of data-URI or mirroring (not, after all, having been intended for archive purposes in the first place…)

-

For example, the

info:operator is entirely useless. Thelink:operator, in almost a decade of me trying it once in a great while, has never returned remotely as many links to my website as Google Webmaster Tools returns for inbound links, and seems to have been disabled entirely at some point.↩︎ -

WP is increasingly out of date & unrepresentative due to increasingly narrow policies about sourcing & preprints, part of its overall deletionist decay, so it’s not a good place to look for references. It is a good place to look for key terminology, though.↩︎

-

When I was a kid, I knew I could just ask my reference librarian to request any book I wanted by providing the unique ID, the ISBN, and there was a physical copy of the book inside the Library of Congress; made sense. I never understood how I was supposed to get these “paper” things my popular science books or newspaper articles would sometimes cite—where was a paper, exactly? If it was published in The Journal of Papers, where did I get this journal? My library only had a few score magazine subscriptions, certainly not all of these Science and Nature and beyond. The bitter answer turns out to be: ‘nowhere’. There is no unique identifier (the majority of papers lack any DOI still), and there is no central repository nor anyone in charge—only a chaotic patchwork of individual libraries and defunct websites. Thus, books tend to be easy to get, but a paper can be a multi-decade odyssey taking one to the depths of the Internet Archive or purchasing from sketchy Chinese websites who hire pirates to infiltrate private databases.↩︎

-

Most search engines will treat any space or separation as an implicit

AND, but I find it helpful to be explicit about it to make sure I’m searching what I think I’m searching.↩︎ -

It also exposes OCR of them all, which can help Google find them—albeit at the cost of you needing to learn ‘OCRese’ in the snippets, so you can recognize when relevant text has been found, but mangled by OCR/layout.↩︎

-

This probably explains part of why no one cites that paper, and those who cite it clearly have not actually read it, even though it invented racial admixture analysis, which, since reinvented by others, has become a major method in medical genetics.↩︎

-

University ILL privileges are one of the most underrated fringe benefits of being a student, if you do any kind of research or hobbyist reading—you can request almost anything you can find in WorldCat, whether it’s an ultra-obscure book or a master’s thesis from 195075ya! Why wouldn’t you make regular use of it‽ Of things I miss from being a student, ILL is near the top.↩︎

-

The complaint and indictment are not necessarily the same thing. An indictment frequently will leave out many details and confine itself to listing what the defendant is accused of. Complaints tend to be much richer in detail. However, sometimes there will be only one and not the other, perhaps because the more detailed complaint has been sealed (possibly precisely because it is more detailed).↩︎

-

Trial testimony can run to hundreds of pages and blow through your remaining PACER budget, so one must be careful. In particular, testimony operates under an interesting & controversial price discrimination system related to how court stenographers report—who are not necessarily paid employees but may be contractors or freelancers—intended to ensure covering transcription costs: the transcript initially may cost hundreds of dollars, intended to extract full value from those who need the trial transcript immediately, such as lawyers or journalists, but then a while later, PACER drops the price to something more reasonable. That is, the first “original” fee costs a fortune, but then “copy” fees are cheaper. So for the US federal court system, the “original”, when ordered within hours of the testimony, will cost <$9.11$7.252019/page but then the second person ordering the same transcript pays only <$1.51$1.22019/page & everyone subsequently <$1.13$0.92019/page, and as further time passes, that drops to <$0.75$0.62019 (and I believe after a few months, PACER will then charge only the standard $0.13$0.12019). So, when it comes to trial transcript on PACER, patience pays off.↩︎

-

I’ve heard that LexisNexis terminals are sometimes available for public use in places like federal libraries or courthouses, but I have never tried this myself.↩︎

-

Curiously, in historical textual criticism of copied manuscripts, it’s the opposite: shorter = truer. But with memories or paraphrases, longer = truer, because those tend to elide details and mutate into catchier versions when the transmitter is not ostensibly exactly copying a text.↩︎

-