Embryo editing for intelligence

A cost-benefit analysis of CRISPR-based editing for intelligence with 2015–2016 state-of-the-art

Embryo Editing

One approach not discussed in Shulman & Bostrom is embryo editing, which has compelling advantages over selection:

-

Embryo selection must be done collectively for any meaningful gains, so one must score all viable embryos; while editing can potentially be done singly, editing only the embryo being implanted, and no more if that embryo yields a birth.

-

Further, a failed implantation is a disaster for embryo selection, since it means one must settle for a possibly much lower scoring embryo with little or no gain while still having paid upfront for selection; on the other hand, the edit gains will be largely uniform across all embryos and so the loss of the first embryo is unfortunate (since it means another set of edits and an implantation will be necessary) but not a major setback.

-

Embryo selection suffers from the curse of thin tails and being unable to start from a higher mean (except as part of a multi-generational scheme): one takes 2 steps back for every 3 steps forward, so for larger gains, one must brute-force them with a steeply escalating number of embryos.

-

Embryo selection is constrained by the broad-sense heritability, and the number of available embryos.

-

No matter how many GWASes are done on whole-genomes or fancy algorithms are applied, embryo selection will never surpass the upper bound set by a broad-sense heritability 0.8; while the effects of editing has no known upper bound either in terms of existing population variation, as there appear to be thousands of variants inclusive of all genetic changes, and evolutionarily novel changes could theoretically be made as well1 (a bacteria or a chimpanzee is, after all, not simply a small human who refuses to talk).

-

Editing is also largely independent of the number of embryos, since each embryo can be edited about as much as any other, and as long as one has enough embryos to expect a birth, more embryos make no difference.

-

-

Editing scales linearly and smoothly by going to the root of the problem, rather than difficulty increasing exponentially in gain - an example:

as calculated above, the et al 2013 polygenic score can yield a gain no greater than 2.58 IQ points with 1-in-10 selection, and in practice, we can hardly expect more than 0.14-0.56 IQ points in practice due to fewer than 10 embryos usually being viable etc. But with editing, we can do better: the same study reported 3 SNP hits (rs9320913, rs11584700, rs4851266) each predicting +1 month of schooling, the largest effects translating to ~0.5IQ points (the education proxy makes the exact value a little tricky but we can cross-check with other GWASes which regressed directly on fluid intelligence, like et al 2015’s whose largest hit, rs10457441, does indeed have a reported beta of -0.0324SD or -0.486) with fairly even balanced frequencies around 50% as well.2 So one can see immediately that if an embryo is sequenced & found to have the bad variant on any of those SNPs, a single edit has the same gain as the entire embryo selection process! Unlike embryo selection, there is no inherent reason an editing process must stop at one edit - and with two edits, one doesn’t even need to sequence in the first place, as the frequency means that the expected value of editing the SNP is 0.25 points and so two blind edits would have the same gain! Continuing, the gains can stack; taking the 15 top hits from et al 2015’s Supplementary Table S3, 15 edits would yield 6.35 points and yes, combined with the next 15 after that would yield ~14 points, blowing past the SNP embryo selection upper bounds. While on average any embryo would not need half of those edits, that just means that an editing procedure will go down another entry down the list and edit that instead (given a budget of 15 edits, one might wind up editing, say #30). Since the estimated effects do not decline too fast and frequencies are high, this is similar to if we skipped every other edit and so the gains are still substantial:

davies2015 <- data.frame(Beta=c(-0.0324, 0.0321, -0.0446, -0.032, 0.0329, 0.0315, 0.0312, 0.0312, -0.0311, -0.0315, -0.0314, 0.0305, 0.0309, 0.0306, 0.0305, 0.0293, -0.0292, -0.0292, -0.0292, 0.0292, -0.0292, 0.0292, -0.0291, -0.0293, -0.0293, 0.0292, -0.0296, -0.0293, -0.0291, 0.0296, -0.0313, -0.047, -0.0295, 0.0295, -0.0292, -0.028, -0.0287, -0.029, 0.0289, 0.0302, -0.0289, 0.0289, -0.0281, -0.028, 0.028, -0.028, 0.0281, -0.028, 0.0281, 0.028, 0.028, 0.028, -0.029, 0.029, 0.028, -0.0279, -0.029, 0.0279, -0.0289, -0.027, 0.0289, -0.0282, -0.0286, -0.0278, -0.0279, 0.0289, -0.0288, 0.0278, 0.0314, -0.0324, -0.0288, 0.0278, 0.0287, 0.0278, 0.0277, -0.0287, -0.0268, -0.0287, -0.0287, -0.0272, -0.0277, 0.0277, -0.0286, -0.0276, -0.0267, 0.0276, -0.0277, 0.0284, 0.0277, -0.0276, 0.0337, 0.0276, 0.0286, -0.0279, 0.0282, 0.0275, -0.0269, -0.0277), Frequency=c(0.4797, 0.5199, 0.2931, 0.4803, 0.5256, 0.4858, 0.484, 0.4858, 0.4791, 0.4802, 0.4805, 0.487, 0.528, 0.5018, 0.5196, 0.5191, 0.481, 0.481, 0.4807, 0.5191, 0.4808, 0.5221, 0.4924, 0.3898, 0.3897, 0.5196, 0.3901, 0.3897, 0.4755, 0.4861, 0.6679, 0.1534, 0.3653, 0.6351, 0.6266, 0.4772, 0.3747, 0.3714, 0.6292, 0.6885, 0.668, 0.3319, 0.3703, 0.3696, 0.6307, 0.3695, 0.6255, 0.3695, 0.3559, 0.6306, 0.6305, 0.6309, 0.316, 0.684, 0.631, 0.3692, 0.3143, 0.631, 0.316, 0.4493, 0.6856, 0.6491, 0.6681, 0.3694, 0.3686, 0.6845, 0.3155, 0.6314, 0.2421, 0.7459, 0.3142, 0.3606, 0.6859, 0.6315, 0.6305, 0.3157, 0.5364, 0.3144, 0.3141, 0.5876, 0.3686, 0.6314, 0.3227, 0.3695, 0.5359, 0.6305, 0.3728, 0.3318, 0.3551, 0.3695, 0.2244, 0.6304, 0.6856, 0.6482, 0.6304, 0.6304, 0.4498, 0.6469)) davies2015$Beta <- abs(davies2015$Beta) sum(head(davies2015$Beta,n=30))*15 # [1] 13.851 editSample <- function(editBudget) { head(Filter(function(x){rbinom(1, 1, prob=davies2015$Frequency)}, davies2015$Beta), n=editBudget) } mean(replicate(1000, sum(editSample(30) * 15))) # [1] 13.534914 -

Editing can be done on low-frequency or rare variants, whose effects are known but will not be available in the embryos in most selection instances.

For example, George Church lists 10 rare mutations of large effect that may be worth editing into people:

To which I would add: sleep duration, quality, morningness-eveningness, and resistance to sleep deprivation ( et al 2012) are, like most traits, heritable. The extreme case is that of “short-sleepers”, the ~1% of the population who normally sleep 3-6h; they often mention a parent who was also a short-sleeper, short sleep starting in childhood, that ‘over’ sleeping is unpleasant & they do not fall asleep faster & don’t sleep excessively more on weekends (indicating they are not merely chronically sleep-deprived), and are anecdotally described as highly energetic multi-taskers, thin, with positive attitudes & high pain thresholds ( et al 2001) without any known health effects or downsides in humans4 or mice (aside from, presumably, greater caloric expenditure).

Some instances of short-sleepers are due to DEC2, with a variant found in short-sleepers vs controls (6.25h vs 8.37h, -127m) and the effect confirmed by knockout-mice ( et al 2010); another short-sleep variant was identified in a discordant twin pair with an effect of -64m ( et al 2014). DEC2/BHLHE41 SNPs are also rare (for example, 3 such SNPs have frequencies of 0.08%, 3%, & 5%). Hence, selection would be almost entirely ineffective, but editing is easy.

As far as costs and benefits go, we can observe that being able to stay awake an additional 127 minutes a day is equivalent to being able to live an additional 7 years, 191 minutes to 11 years; to negate that, any side-effect would have to be tantamount to lifelong smoking of tobacco.

The largest disadvantage of editing, and the largest advantage of embryo selection, is that selection relies on proven, well-understood known-priced PGD technology already in use for other purposes; while the former hasn’t existed and has been science fiction, not fact.

Genome Synthesis

The cost of CRISPR editing will scale roughly as the number of edits: 100 edits will cost 10x 10 edits. It may also scale superlinearly if each edit makes the next edit more difficult. This poses challenges to profitable editing since the marginal gain of each edit will keep decreasing as the SNPs with largest effect sizes are edited first - it’s hard to see how 500 or 1000 edits would be profitable. Similar to the daunting cost of iterated embryo selection, where the IES is done only once or a few times and then gametes are distributed en masse to prospective parents to amortize per-child costs to small amounts, one could imagine doing the same thing for a heavily CRISPR-edited embryo.

But at some point, doing many edits raises the question of why you are bothering with the wild type genome? Couldn’t you just create a whole human genome from scratch incorporating every possible edit? Synthesize a whole genome’s DNA and incorporate all edits one wishes; in the design phase, take the GWASes for a wide variety of traits and set each SNP, no matter how weakly estimated, to the positive direction; in copying in the data of regions with rare variants, they are probably harmful and can be erased with the modal human base-pairs at those positions, for systematic health benefits across most diseases; or to imitate iterative embryo selection, which exploits the tagging power of SNPs to pull in the beneficial rare variants, copy over the haplotypes for beneficial SNPs which might be tagging a rare variant. Between the erasing of mutation load and exploiting all common variants simultaneously, the results could be a staggering phenotype.

DNA synthesis, synthesizing a strand of DNA base-pair by base-pair, has long been done, but generally limited to a few hundred BPs, which is much less than the 23 human chromosomes’ collective ~3.3 billion BP. Past work in synthesizing genomes has included Craig Venter’s minimal bacterium in 200817ya with 582,970 BP; https://www.nature.com/articles/news.200817ya.522 1.1 million BP in 201015ya https://www.nature.com/articles/news.201015ya.253 483,000 BP and 531,000 BP in 2016 https://www.nature.com/articles/531557a (spending somewhere ~$40m on these projects) 272,871 in 2014 (1 yeast chromosome; 90k BP costing $50k at the time) and plans for synthesizing the whole yeast genome in 5 years https://www.nature.com/articles/nature.2014.14941 https://science.sciencemag.org/content/290/5498/1972 https://science.sciencemag.org/content/329/5987/52 https://science.sciencemag.org/content/342/6156/357 https://science.sciencemag.org/content/333/6040/348 2,750,000 BP in 2016 for E. coli https://www.nature.com/articles/nature.2016.20451 “Design, synthesis, and testing toward a 57-codon genome”, et al 2016 https://science.sciencemag.org/content/353/6301/819

https://www.quora.com/How-many-base-pairs-of-DNA-can-I-synthesize-for-1-dollar

The biggest chromosome is #1, with 8.1% of the base-pairs or 249,250,621; the smallest is #21, 1.6%, or 48,129,895. DNA synthesis prices drop each year in an exponential decline (if not remotely as fast as the DNA sequencing cost curve), and so 2016 synthesizing costs have reached <$0.3; let’s say $0.25/BP.

|

Chromosome |

Length in base-pairs |

Fraction |

Synthesis cost at $0.25/BP |

|---|---|---|---|

|

1 |

249,250,621 |

0.081 |

$62,312,655 |

|

2 |

243,199,373 |

0.079 |

$60,799,843 |

|

3 |

198,022,430 |

0.064 |

$49,505,608 |

|

4 |

191,154,276 |

0.062 |

$47,788,569 |

|

5 |

180,915,260 |

0.058 |

$45,228,815 |

|

6 |

171,115,067 |

0.055 |

$42,778,767 |

|

7 |

159,138,663 |

0.051 |

$39,784,666 |

|

8 |

146,364,022 |

0.047 |

$36,591,006 |

|

9 |

141,213,431 |

0.046 |

$35,303,358 |

|

10 |

135,534,747 |

0.044 |

$33,883,687 |

|

11 |

135,006,516 |

0.044 |

$33,751,629 |

|

12 |

133,851,895 |

0.043 |

$33,462,974 |

|

13 |

115,169,878 |

0.037 |

$28,792,470 |

|

14 |

107,349,540 |

0.035 |

$26,837,385 |

|

15 |

102,531,392 |

0.033 |

$25,632,848 |

|

16 |

90,354,753 |

0.029 |

$22,588,688 |

|

17 |

81,195,210 |

0.026 |

$20,298,802 |

|

18 |

78,077,248 |

0.025 |

$19,519,312 |

|

19 |

59,128,983 |

0.019 |

$14,782,246 |

|

20 |

63,025,520 |

0.020 |

$15,756,380 |

|

21 |

48,129,895 |

0.016 |

$12,032,474 |

|

22 |

51,304,566 |

0.017 |

$12,826,142 |

|

X |

155,270,560 |

0.050 |

$38,817,640 |

|

Y |

59,373,566 |

0.019 |

$14,843,392 |

|

total |

3,095,693,981 |

1.000 |

$773,923,495 |

So the synthesis of one genome in 2016, assuming no economies of scale or further improvement, would come in at ~$773m. This is a staggering but finite and even feasible amount: the original Human Genome Project cost ~$3b, and other large science projects like the LHC, Manhattan Project, ITER, Apollo Program, ISS, the National Children’s Study etc have cost many times what 1 human genome would.

https://en.wikipedia.org/wiki/Genome_Project-Write https://www.nature.com/articles/nature.2016.20028 /doc/genetics/genome-synthesis/2016-boeke.pdf https://www.science.org/doi/10.1126/science.aaf6850 https://www.nytimes.com/2016/06/03/science/human-genome-project-write-synthetic-dna.html https://diyhpl.us/wiki/transcripts/2017-01-26-george-church/ https://www.wired.com/story/live-forever-synthetic-human-genome/ https://medium.proto.life/andrew-hessel-human-genome-project-write-d15580dd0885 “Is the World Ready for Synthetic People?: Stanford bioengineer Drew Endy doesn’t mind bringing dragons to life. What really scares him are humans.” https://medium.com/neodotlife/q-a-with-drew-endy-bde0950fd038 https://www.chemistryworld.com/features/step-by-step-synthesis-of-dna/3008753.article

Church is optimistic: maybe even $100k/genome by 2037 https://www.wired.co.uk/article/human-genome-synthesise-dna “Humans 2.0: these geneticists want to create an artificial genome by synthesising our DNA; Scientists intend to have fully synthesised the genome in a living cell - which would make the material functional - within ten years, at a projected cost of $1 billion” > But these are the “byproducts” of HGP-Write, in Hessel’s view: the project’s true purpose is to create the impetus for technological advances that will lead to these long-term benefits. “Since all these [synthesis] technologies are exponentially improving, we should keep pushing that improvement rather than just turning the crank blindly and expensively,” Church says. In 20 years, this could cut the cost of synthesising a human genome to $100,000, compared to the $12 billion estimated a decade ago.

The benefit of this investment would be to bypass the death by a thousand cuts of CRISPR editing and create a genome with an arbitrary number of edits on an arbitrary number of traits for the fixed upfront cost. Unlike multiple selection, one would not need to trade off multiple traits against each other (except for pleiotropy); unlike editing, one would not be limited to making only edits with a marginal expected value exceeding the cost of 1 edit. Doing individual genome syntheses will be out of the question for a long time to come, so genome synthesis is like IES in amortizing its cost over prospective parents.

The “2013 Assisted Reproductive Technology National Summary Report” says ~10% of IVF cycles use donor eggs, and a total of 67996 infants, implying >6.7k infants conceived with donor eggs, embryos, or sperm (sperm is not covered by that report) and were only half or less related to their parents. What immediate 1-year return over 6.7k infants would justify spending $773m? Considering just the low estimate of IQ at $3,270/point and no other traits, that would translate to (773m/6.7k) / 3270 = 35 IQ points or at an average IQ gain of 0.1 points, the equivalent of 350 causal edits. This is doable. If we allow amortization at a high discount rate of 5% and reuse the genome indefinitely for each year’s crop of 6.7k infants, then we need at least X IQ points where ((x*3270*6700) / log(1.05)) - 773000000 >= 0; x >= 1.73 or at ~0.1 points per edit, 18 edits. We could also synthesize only 1 chromosome and pay much less upfront (but at the cost of a lower upper bound, as GCTA heritability/length regressions and GWAS polygenic score results indicates that intelligence and many other complex traits are spread very evenly over the genome, so each chromosome will harbor variants proportional to its length).

The causal edit problem remains but at 12% causal rates, 18 causal edits can easily be made with 150 edits of SNP candidates, which is less than already available. So at first glance, whole genome synthesis can be profitable with optimization of only one trait using existing GWAS hits, and will be extremely profitable if dozens of traits are optimized and mutation load minimized.

How much profitable? …see embryo selection calculations… At 70 SDs and 12% causal, then the profit would be 70*15*3270*0.12*6700 - 773000000 = $1,987,534,000 the first year or NPV of $55,806,723,536. TODO: only half-relatedness

TODO:

-

model DNA synthesis cost curve; when can we expect a whole human genome to be synthesizable with a single lab’s resources, like $1m/$5m/$10m? when does synthesis begin to look better than IES?

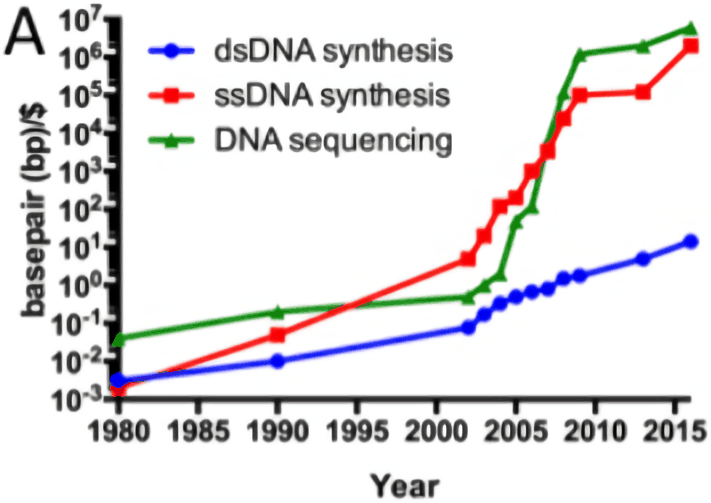

eyeballing https://www.science.org/doi/10.1126/science.aaf6850 , 0.01/$ or 100$ in 1990, 3/$ or 0.3$ in 2015?

synthesis <- data.frame(Year=c(1990,2015), Cost=c(100, 0.33)) summary(l <- lm(log(Cost) ~ Year, data=synthesis)) prettyNum(big.mark=",", round(exp(predict(l, data.frame(Year=c(2016:2040), Cost=NA))) * 3095693981)) # 1 2 3 4 5 6 7 8 9 10 11 12 13 # "812,853,950" "646,774,781" "514,628,265" "409,481,413" "325,817,758" "259,247,936" "206,279,403" "164,133,195" "130,598,137" "103,914,832" "82,683,356" "65,789,813" "52,347,894" # 14 15 16 17 18 19 20 21 22 23 24 25 # "41,652,375" "33,142,123" "26,370,653" "20,982,703" "16,695,599" "13,284,419" "10,570,198" "8,410,536" "6,692,128" "5,324,818" "4,236,872" "3,371,211"As the cost appears to be roughly linear in chromosome length, it would be possible to scale down synthesis projects if an entire genome cannot be afforded.

For example, IQ is highly polygenic and the relevant SNPs & causal variants are spread fairly evenly over the entire genome (as indicated by the original GCTAs show SNP heritability per chromosome correlates with chromosome length, and location of GWAS hits), so one could instead synthesize a chromosome accounting for ~1% of basepairs which will carry 1% of variants at 1% of the total cost.

So if a whole genome costs $1b, there are ~10,000 variants, with an average effect of ~0.1 IQ points and a frequency of 50%, then for $10m one could create a chromosome which would improve over a wild genome’s chromosome by

10000 * 0.01 * 0.5 * 0.1 = 5points; then as resources allow and the synthesis price keeps dropping, create a second small chromosome for another 5 points, and so on with the bigger chromosomes for larger gains.

“Large-scale de novo DNA synthesis: technologies and applications”, Kosuri & Church 201411ya https://arep.med.harvard.edu/pdf/Kosuri_Church_201411ya.pdf

Cost curve:

Historical cost curves of genome sequencing & synthesis, 1980–352015 (log scale)

“Bricks and blueprints: methods and standards for DNA assembly” http://scienseed.com/clients/tomellis/wp-content/uploads/2015/08/BricksReview.pdf et al 2015

‘Leproust says that won’t always be the case-not if her plans to improve the technology work out. “In a few years it won’t be $129,371.51$100,0002018 to store that data,” she says. “It will be 10 cents.”’ https://www.technologyreview.com/s/610717/this-company-can-encode-your-favorite-song-in-dnafor-100000/ April 2018

http://www.synthesis.cc/synthesis/2016/03/on_dna_and_transistors

Questions: what is the best way to do genome synthesis? Lots of possibilities:

-

can do one or more chromosomes at a time (which would fit in small budgets)

-

optimize 1 trait to maximize PGS SNP-wise (but causal tagging/LD problem…)

-

optimize 1 trait to maximize haplotype PGS

-

optimize multiple traits with genetic correlations and unit-weighted

-

multiple trait, utility-weighted

-

limited optimization:

-

partial factorial trial of haplotypes (eg. take the maximal utility-weighted, then flip a random half for the first genome; flip a different random half for the second genome; etc)

-

this could be used for response-surface estimation: to try to estimate where additivity breaks down and genetic correlations change substantially

-

-

constrained optimization of haplotypes: maximize the utility-weight subject to a soft constraint like total phenotype increases of >2SD on average (eg. if there are 10 traits, allow +20SD of total phenotype change); or a hard constraint, like no trait past >5SD (so at least a few people to ever live could have had a similar PGS value on each trait)

-

because of how many real-world outcomes are log-normally distributed and the component normals have thin tails, it will be more efficient to increase 20 traits by 1SD than 1 trait by 20SD to optimize the leaky pipeline

-

-

modalization: simply take the modal human genome, implicitly reaping gains from removing much mutation load

-

partial optimization of a prototype genome: select some exemplar genome as a baseline, like a modal genome or very accomplished or very intelligent person, and optimize only a few SD up from that

-

“dose-ranging study”: multiple genomes optimized to various SDs or various hard/soft constraints, to as quickly as possible estimate the safe extreme (eg. +5 vs 10 vs 15 SD)

-

exotic changes: adding very rare variants like the short-sleeper or myostatin; increasing CNVs of genes differing between humans and chimpanzees; genome with all codons recorded to make viral infection impossible

CRISPR

The past 5 years have seen major breakthroughs in cheap, reliable, general-purpose genetic engineering & editing using CRISPR, since the 2012 demonstration that it could edit cells, including human ones ( et al 2013).5 Commentary in 201213ya and 201312ya regarded prospects for embryo editing as highly remote6 Even as late as July 201411ya, coverage was highly circumspect, with vague musing that “Some experiments also hint that doctors may someday be able to use it to treat genetic disorders”; successful editing of zebrafish ( et al 2013), monkey, and human embryos was already being demonstrated in the lab, and human trials would begin 2-3 years later. (Surprisingly, Shulman & Bostrom do not mention CRISPR, but that gives an idea of how shockingly fast CRISPR went from strange to news to standard, and how low expectations for genetic engineering were pre-2015 after decades of agonizingly slow progress & high-profile failures & deaths, such that the default assumption was that direct cheap safe genetic engineering of embryos would not be feasible for decades and certainly not the foreseeable future.)

The CRISPR/Cas system exploits a bacterial anti-viral immune system in which snippets of virus DNA are stored and an enzyme system detects the presence in the bacterial genome of fresh viral DNA and then edits it, breaking it, and presumably stopping the infection in its latent phase. This requires the CRISPR enzymes to be highly precise (lest it attack legitimate DNA, damaging or killing itself), repeatable (it’s a poor immune system that can only fight off one infection ever), and programmable by short RNA sequences (because viruses constantly mutate).

This turns out to be usable in animal and human cells to delete/knock-out genes: create an RNA sequence matching a particular gene, inject the RNA sequence along with the key enzymes into a cell, and it will find the gene in question inside the nucleus and snip it; this can be augmented to edit rather than delete by providing another DNA template which the snip-repair mechanisms will unwittingly copy from. Compared to the major earlier approaches using zinc finger nuclease & TALENs, CRISPR is far faster and easier and cheaper to use, with large (at least halving) decreases in time & money cited, and use of it has exploded in research labs, drawing comparisons to the invention of PCR and Nobel Prize predictions. It has been used to edit at least 36 creatures as of June 20157, including:

-

rhesus monkey embryos ( et al 2017)

-

sheep

-

muscly beagles ( et al 2015)

-

muscly ( et al 2014) & hairy goats ( et al 2015)

-

human embryos, curing β-thalassaemia ( et al 20158)

-

curing retinitis pigmentosa in mice cells ( et al 2016) & live mice ( et al 2017) and human cells, ( et al 2016); prelude to planned 2017 trials on Leber congenital amaurosis in live humans and Duchenne muscular dystrophy ( et al 2014)

-

cure rats or mice of tyrosinemia ( et al 2015, et al 2016), cataracts ( et al 2013), hemophilia, & HIV ( et al 2017)

-

cure human cells of cystic fibrosis ( et al 2013) and HIV ( et al 2013, et al 2016)

-

cut out up to 62 copies of the dangerous viral PERV genes from a pig ( et al 2016, et al 2017), with another demonstration in 2019 of ~2.6k edits in a single cell (“Enabling large-scale genome editing by reducing DNA nicking”, et al 2019); other multiple-edit capabilities have been demonstrated; eg. in bacteria ( et al 2014), mice ( et al 2013), rats ( et al 2013), goats ( et al 2015), up to 14 in elephant cells9, and human cells ( et al 2014, et al 2013)

-

create gene drives in yeast ( et al 2015), fruit flies (2015), & mosquitoes ( et al 2015. et al 2015)

-

virus-resistant pigs ( et al 2016)

-

sterile farmed catfish10

-

cattle with no female offspring which need to be culled11 and vice-versa, chickens without male offspring12

-

cattle without horns that need to be painfully cut off13

-

tuberculosis-resistant cows ( et al 2017)

-

edited ferrets ( et al 2015); ferrets may be used for flu experiments14

-

autistic-like macaque monkeys ( et al 2016)

-

planned: make allergen-free eggs15, disease/parasite-resistant bees16, cattle resistant to sleeping sickness17, koi carp of different sizes & color patterns18

(I am convinced some of these were done primarily for the lulz.)

It has appeared to be a very effective and promising genome editing tool in mammalian cells Cho et al 201312ya “Targeted genome engineering in human cells with the Cas9 RNA-guided end” http://www.bmskorea.co.kr/bms_email/email201312ya/13-0802/paper.pdf human cells Cong et al 201312ya “Multiplex genome engineering using CRISPR/Cas systems” https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3795411/ mouse and human cells Jinek et al 201213ya “A programmable dual-RNA-guided DNA endonuclease in adaptive bacterial immunity” zebrafish somatic cells at the organismal level Hwang et al 201312ya”Efficient genome editing in zebrafish using a CRISPR-Cas system” https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3686313/ genomic editing in cultured mammalian cells et al 2013 pluripotent stem cells, but also in zebrafish embryos with efficiencies that are comparable to those obtained using ZFNs and TALENs (Hwang et al 201312ya).

et al 2016, “Potential of promotion of alleles by genome editing to improve quantitative traits in livestock breeding programs” https://gsejournal.biomedcentral.com/articles/10.1186/s12711-015-0135-3

“Extensive Mammalian Germline Genome Engineering”, et al 2019 (media—“engineering 18 different loci using multiple genome engineering methods” in pigs; followup to et al 2017)

Estimating the profit from CRISPR edits is, in some ways, less straightforward than from embryo selection:

-

taking the highest n reported betas/coefficients from a GWAS OLS regressions implies that they will be systematically biased upwards due to the winner’s curse and statistical-significance filtering, thereby exaggerating the potential benefit from each SNP

-

the causal tagging problem: GWAS estimates correlating SNPs with phenotype traits, while valid for prediction (and hence selection), are not necessarily causal (such that an edit at that SNP will have the estimated effect), and the probability of being non-causal

-

each CRISPR edit has a chance of not making the correct edit, and making wrong edits; an edit may not work in an embryo (the specificity, false negative), and there is a chance of an ‘off-target’ mutation (false positive). A non-edit is a waste of money, while an off-target mutation could be fatal.

-

CRISPR has advanced at such a rapid rate that numbers on cost, specificity, & off-target mutation rate are generally either not available, or are arguably out of date before they were published19

The winner’s curse can be dealt with several ways; the supplementary of et al 2014 discusses 4 methods (“inverting the conditional expectation of the OLS estimator, maximum likelihood estimation (MLE), Bayesian estimation, and empirical-Bayes estimation”). The best one for our purposes would be a Bayesian approach, yielding posterior distributions of effect sizes. Unfortunately, the raw data for GWAS studies like et al 2013 is not available; but for our purposes, we can, like in the Rietveld et al 201411ya supplement, simply simulate a dataset and work with that to get posteriors to find the SNPs with unbiased largest posterior means.

The causal tagging problem is more difficult. The background for the causal tagging problem is that genes are recombined randomly at conception, but they are not recombined at the individual gene level; rather, genes tend to live in ‘blocks’ of contiguous genes called haplotypes, leading to linkage disequilibrium (LD). Not every possible variant in a haplotype is detected by a SNP array chip, so if you have genes A/B/C/D, it may be the case that a variant of A will increase intelligence but your SNP array chip only detects variants of D; when you do a GWAS, you then discover D predicts increased intelligence. (And if your SNP array chip tags B or C, you may get hits on those as well!) This is not a problem for embryo selection, because if you can only see D’s variants, and you see that an embryo has the ‘good’ D variant, and you pick that embryo, it will indeed grow up as you hoped because that D variant pulled the true causal A variant along for the ride. Indeed, for selection or prediction, the causal tagging problem can be seen as a good thing: your GWASes can pick up effects from parts of the genome you didn’t even pay to sequence - “buy one, get one free”. (The downside can be underestimation due to imperfect proxies.) But this is a problem for embryo editing because if a CRISPR enzyme goes in and carefully edits D while leaving everything untouched, A by definition remains the same and there will be no benefits. A SNP being non-causal is not a serious problem for embryo editing if we know which SNPs are non-causal; as illustrated above, the distribution of effects is smooth enough that discarding a top SNP for the next best alternative costs little. But it is a serious problem if we don’t know which ones are non-causal, because then we waste precious edits (imagine a budget of 30 edits and a non-causal rate of 50%; if we are ignorant which are non-causal, we effectively get only 15 edits, but if we know which to drop, then it’s almost as good as if all were causal). Causality can be established in a few ways; for example, a hit can be reused in a mutant laboratory animal breed to see if it also predicts there (This is behind some striking breakthroughs like et al 2016’s proof that neural pruning is a cause of schizophrenia), either using existing strains or creating a fresh modification (using, say, CRISPR). This has not been done for the top intelligence hits and given the expense & difficulty of animal experimentation, we can’t expect it anytime soon. One can also try to use prior information to boost the posterior probability of an effect: if a gene has already been linked to the nervous system by previous studies exploring mutations or gene expression data etc, or other aspects of the physical structure point towards that SNP like being closest to a gene, then that is evidence for the association being causal. Intelligence hits are enriched for nervous system connections, but this method is inherently weak. A better method is fine-mapping or whole-genome sequencing: when all the variants in a haplotype are sequenced, then the true causal variant will tend to perform subtly better and one can single it out of the whole SNP set using various statistical criteria (eg. et al 2015 using their algorithm on autoimmune disorder estimate 5.5% of their 4.9k SNPs are causal). Useful, but whole-genomes are still expensive enough that they are not created nearly as much as SNPs and there do not seem to be many comparisons to ground-truths or meta-analyses. Another approach is similar to the lab animal approach: human groups differ genetically, and their haplotypes & LD patterns can differ greatly; if D looks associated with intelligence in one group, but is not associated in a genetically distant group with different haplotypes, that strongly suggests that in the first group, D was indeed just proxying for another variant. We can also expect that variants with high frequencies will not be population-specific but be ancient & shared causal variants. Some of the intelligence hits have replicated in European-American samples, as expected but not helpfully; more importantly, in African-American ( et al 2015) and East Asian samples ( et al 2015), and the top SNPs have some predictive power of mean IQs across ethnic groups (2015). More generally, while GWASes usually are paranoid about ancestry, using as homogeneous a sample as possible and controlling away any detectable differences in ancestry, GWASes can use cross-ethnic and differing ancestries in “admixture mapping” to home in on causal variants, but this hasn’t been done for intelligence. We can note that some GWASes have compared how hits replicate across populations (eg. et al 2010, et al 2010, et al 2011, et al 2011, et al 2011, et al 2012, et al 2013/ et al 2013, et al 2013, et al 2014, DIAGRAM et al 2014, et al 2015, et al 2015, et al 2015); despite interpretative difficulties such as statistical power, hits often replicate from European-descent samples to distant ethnicities, and the example of the schizophrenia GWASes ( et al 2013, et al 2014) also offers hope in showing a strong correlation of 0.66/0.61 between African & European schizophrenia SNP-based GCTAs. (It also seems to me that there is a trend with the complex highly polygenic traits having better cross-ethnic replicability than in simpler diseases.)

-

Ntzani et al 201213ya, “Consistency of genome-wide associations across major ancestral groups” /doc/genetics/heritable/correlation/2012-ntzani.pdf

-

Marigorta & Navarro 201312ya “High Trans-ethnic Replicability of GWAS Results Implies Common Causal Variants” https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3681663/

“Genetic effects influencing risk for major depressive disorder in China and Europe”, et al 2017 https://www.nature.com/tp/journal/v7/n3/full/tp2016292a.html

“Father Absence and Accelerated Reproductive Development”, et al 2017 https://www.biorxiv.org/content/biorxiv/early/2017/04/04/123711.full.pdf

“Transethnic differences in GWAS signals: A simulation study”, 2018

“Transethnic genetic correlation estimates from summary statistics”, et al 2016

“Molecular Genetic Evidence for Genetic Overlap between General Cognitive Ability and Risk for Schizophrenia: A Report from the Cognitive Genomics Consortium (COGENT)”, Lencz et al 201312ya/201411ya: IQ PGS applied to schizophrenia case status:

-

EA: 0.41%

-

Japanese: 0.38%

-

Ashkenazi Jew: 0.16%

-

MGS African-American: 0.00%

myopia (refractive error): European/East Asian ( et al 2018, “Genome-wide association meta-analysis highlights light-induced signaling as a driver for refractive error”): r_g = 0.90/0.80

“Consistent Association of Type 2 Diabetes Risk Variants Found in Europeans in Diverse Racial and Ethnic Groups”, et al 2010 https://journals.plos.org/plosgenetics/article?id=10.1371/journal.pgen.1001078 et al 2017, “Heterogeneity in polygenic scores for common human traits” https://www.biorxiv.org/content/early/2017/02/05/106062.full Figure 3 - the height/education/etc polygenic scores roughly 5x as predictive in white Americans as African-Americans, ~0.05 R^2 vs ~0.01 R^2, and since African-Americans average about 25% white anyway, that’s consistent with 10% or less… “Using Genetic Distance to Infer the Accuracy of Genomic Prediction”, et al 2016 https://journals.plos.org/plosgenetics/article?id=10.1371/journal.pgen.1006288

Height PGSes don’t work crossracially: “Human Demographic History Impacts Genetic Risk Prediction across Diverse Populations”, et al 2017 (note that this paper had errors considerably exaggerating the decline):

The vast majority of genome-wide association studies (GWASs) are performed in Europeans, and their transferability to other populations is dependent on many factors (eg. linkage disequilibrium, allele frequencies, genetic architecture). As medical genomics studies become increasingly large and diverse, gaining insights into population history and consequently the transferability of disease risk measurement is critical. Here, we disentangle recent population history in the widely used 1000 Genomes Project reference panel, with an emphasis on populations underrepresented in medical studies. To examine the transferability of single-ancestry GWASs, we used published summary statistics to calculate polygenic risk scores for eight well-studied phenotypes. We identify directional inconsistencies in all scores; for example, height is predicted to decrease with genetic distance from Europeans, despite robust anthropological evidence that West Africans are as tall as Europeans on average. To gain deeper quantitative insights into GWAS transferability, we developed a complex trait coalescent-based simulation framework considering effects of polygenicity, causal allele frequency divergence, and heritability. As expected, correlations between true and inferred risk are typically highest in the population from which summary statistics were derived. We demonstrate that scores inferred from European GWASs are biased by genetic drift in other populations even when choosing the same causal variants and that biases in any direction are possible and unpredictable. This work cautions that summarizing findings from large-scale GWASs may have limited portability to other populations using standard approaches and highlights the need for generalized risk prediction methods and the inclusion of more diverse individuals in medical genomics.

“Genetic contributors to variation in alcohol consumption vary by race/ethnicity in a large multi-ethnic genome-wide association study”, et al 2017 /doc/genetics/heritable/correlation/2017-jorgenson.pdf

“High-Resolution Genetic Maps Identify Multiple Type 2 Diabetes Loci at Regulatory Hotspots in African Americans and Europeans”, et al 2017 /doc/genetics/heritable/correlation/2017-lau.pdf

“The genomic landscape of African populations in health and disease”, et al 2017 /doc/genetics/selection/2017-rotimi.pdf

Wray et al (MDDWG PGC) 2017, “Genome-wide association analyses identify 44 risk variants and refine the genetic architecture of major depression”:

-

European/Chinese depression: rg = 0.31

-

European/Chinese schizophrenia: rg = 0.34

-

European/Chinese bipolar disorder: rg = 0.45

“Genetic Diversity Turns a New PAGE in Our Understanding of Complex Traits”, et al 2017:

To demonstrate the benefit of studying underrepresented populations, the Population Architecture using Genomics and Epidemiology (PAGE) study conducted a GWAS of 26 clinical and behavioral phenotypes in 49,839 non-European individuals. Using novel strategies for multi-ethnic analysis of admixed populations, we confirm 574 GWAS catalog variants across these traits, and find 28 novel loci and 42 residual signals in known loci. Our data show strong evidence of effect-size heterogeneity across ancestries for published GWAS associations, which substantially restricts genetically-guided precision medicine. We advocate for new, large genome-wide efforts in diverse populations to reduce health disparities.

et al 2017, “Genome-wide association study identifies 112 new loci for body mass index in the Japanese population”: r = 0.82 between top European/Japanese SNP hits

“Generalizing Genetic Risk Scores from Europeans to Hispanics/Latinos”, et al 2018:

…we compare various approaches for GRS construction, using GWAS results from both large EA studies and a smaller study in Hispanics/Latinos, the Hispanic Community Health Study/Study of Latinos (HCHS/SOL, n=12,803). We consider multiple ways to select SNPs from association regions and to calculate the SNP weights. We study the performance of the resulting GRSs in an independent study of Hispanics/Latinos from the Woman Health Initiative (WHI, n=3,582). We support our investigation with simulation studies of potential genetic architectures in a single locus. We observed that selecting variants based on EA GWASs generally performs well, as long as SNP weights are calculated using Hispanics/Latinos GWASs, or using the meta-analysis of EA and Hispanics/Latinos GWASs. The optimal approach depends on the genetic architecture of the trait.

“Genetic architecture of gene expression traits across diverse populations”, et al 2018: causal variants are mostly shared cross-racially despite different LD patterns, as indicated by similar gene expression effects?

Consistent with the concordant direction of effect at associated SNPs, there was high genetic correlation (rG) between the European and East Asian cohort when considering the additive effects of all SNPs genotyped on the Immunochip19 (Crohn’s disease rG = 0.76, ulcerative colitis rG = 0.79) (Supplementary Table 11). Given that rare SNPs (minor allele frequency (MAF) < 1%) are more likely to be population-specific, these high rG values also support the notion that the majority of causal variants are common (MAF>5%).

“Theoretical and empirical quantification of the accuracy of polygenic scores in ancestry divergent populations”, et al 2020:

Polygenic scores (PGS) have been widely used to predict complex traits and risk of diseases using variants identified from genome-wide association studies (GWASs). To date, most GWASs have been conducted in populations of European ancestry, which limits the use of GWAS-derived PGS in non-European populations. Here, we develop a new theory to predict the relative accuracy (RA, relative to the accuracy in populations of the same ancestry as the discovery population) of PGS across ancestries. We used simulations and real data from the UK Biobank to evaluate our results. We found across various simulation scenarios that the RA of PGS based on trait-associated SNPs can be predicted accurately from modeling linkage disequilibrium (LD), minor allele frequencies (MAF), cross-population correlations of SNP effect sizes and heritability. Altogether, we find that LD and MAF differences between ancestries explain alone up to ~70% of the loss of RA using European-based PGS in African ancestry for traits like body mass index and height. Our results suggest that causal variants underlying common genetic variation identified in European ancestry GWASs are mostly shared across continents.

“Polygenic prediction of the phenome, across ancestry, in emerging adulthood”, et al 2017 (supplement): large loss of PGS power in many traits, but direct comparison seems to be unavailable for most of the PGSes other than stuff like height?

“Genetics of self-reported risk-taking behavior, trans-ethnic consistency and relevance to brain gene expression”, et al 2018:

There were strong positive genetic correlations between risk-taking and attention-deficit hyperactivity disorder, bipolar disorder and schizophrenia. Index genetic variants demonstrated effects generally consistent with the discovery analysis in individuals of non-British White, South Asian, African-Caribbean or mixed ethnicity.

Polygenic risk scores are gaining more and more attention for estimating genetic risks for liabilities, especially for noncommunicable diseases. They are now calculated using thousands of DNA markers. In this paper, we compare the score distributions of two previously published very large risk score models within different populations. We show that the risk score model together with its risk stratification thresholds, built upon the data of one population, cannot be applied to another population without taking into account the target population’s structure. We also show that if an individual is classified to the wrong population, his/her disease risk can be systematically incorrectly estimated.

“Analysis of Polygenic Score Usage and Performance across Diverse Human Populations”, et al 2018:

We analyzed the first decade of polygenic scoring studies (2008–92017, inclusive), and found that 67% of studies included exclusively European ancestry participants and another 19% included only East Asian ancestry participants. Only 3.8% of studies were carried out on samples of African, Hispanic, or Indigenous peoples. We find that effect sizes for European ancestry-derived polygenic scores are only 36% as large in African ancestry samples, as in European ancestry samples (t=-10.056, df=22, p=5.5x10-10). Poorer performance was also observed in other non-European ancestry samples. Analysis of polygenic scores in the 1000Genomes samples revealed many strong correlations with global principal components, and relationships between height polygenic scores and height phenotypes that were highly variable depending on methodological choices in polygenic score construction.

“Transancestral GWAS of alcohol dependence reveals common genetic underpinnings with psychiatric disorders”, et al 2018:

PRS based on our meta-analysis of AD were significantly predictive of AD outcomes in all three tested external cohorts. PRS derived from the unrelated EU GWAS predicted up to 0.51% of the variance in past month alcohol use disorder in the Avon Longitudinal Study of Parents and Children (ALSPAC; P=0.0195; Supplementary Fig. 10a) and up to 0.3% of problem drinking in Generation Scotland (P=7.9×10-6; Supplementary Fig. 10b) as indexed by the CAGE (Cutting down, Annoyance by criticism, Guilty feelings, and Eye-openers) questionnaire. PRS derived from the unrelated AA GWAS predicted up to 1.7% of the variance in alcohol dependence in the COGA AAfGWAS cohort (P=1.92×10-7; Supplementary Fig. 10c). Notably, PRS derived from the unrelated EU GWAS showed much weaker prediction (maximum r2=0.37%, P=0.01; Supplementary Fig. 10d) in the COGA AAfGWAS than the ancestrally matched AA GWAS-based PRS despite the much smaller discovery sample for AA. In addition, the AA GWAS-based AD PRS also still yielded significant variance explained after controlling for other genetic factors (r2=1.16%, P=2.5×10-7). Prediction of CAGE scores in Generation Scotland remained significant and showed minimal attenuation (r2=0.29%, P=1.0×10-5) after conditioning on PRS for alcohol consumption derived from UK Biobank results17. In COGA AAfGWAS, the AA PRS derived from our study continued to predict 1.6% of the variance in alcohol dependence after inclusion of rs2066702 genotype as a covariate, indicating independent polygenic effects beyond the lead ADH1B variant (Supplementary Methods).

So EU->AA PGS is 0.37/1.7=21%?

“The transferability of lipid-associated loci across African, Asian and European cohorts”, et al 2019:

A polygenic score based on established LDL-cholesterol-associated loci from European discovery samples had consistent effects on serum levels in samples from the UK, Uganda and Greek population isolates (correlation coefficient r=0.23 to 0.28 per LDL standard deviation, p<1.9x10-14). Trans-ethnic genetic correlations between European ancestry, Chinese and Japanese cohorts did not differ significantly from 1 for HDL, LDL and triglycerides. In each study, >60% of major lipid loci displayed evidence of replication with one exception. There was evidence for an effect on serum levels in the Ugandan samples for only 10% of major triglyceride loci. The PRS was only weakly associated in this group (r=0.06, SE=0.013).

“Identification of 28 new susceptibility loci for type 2 diabetes in the Japanese population”, et al 2019:

When we compared the effect sizes of 69 of the 88 lead variants in Japanese and Europeans that were available in a published European GWAS2 (Supplementary Table 3 and Supplementary Fig. 5), we found a strong positive correlation (Pearson’s r= 0.87, P= 1.4 × 10−22) and directional consistency (65 of 69 loci, 94%, sign-test P= 3.1 × 10−15). In addition, when we compared the effect sizes of the 95 of 113 lead variants reported in the European type 2 diabetes GWAS2 that were available in both Japanese and European type 2 diabetes GWAS (Supplementary Table 2 and Supplementary Fig. 6a), we also found a strong positive correlation (Pearson’s r= 0.74, P= 5.9 × 10−18) and directional consistency (83 of 95 loci, 87%, sign-test P= 3.2 × 10−14). After this manuscript was submitted, a larger type 2 diabetes GWAS of European ancestry was published17. When we repeated the comparison at the lead variants reported in this larger European GWAS, we found a more prominent correlation (Pearson’s r= 0.83, P= 8.7 × 10−51) and directional consistency (181 of 192 loci, 94%, sign-test P= 8.3 × 10−41) of the effect sizes (Supplementary Table 4 and Supplementary Fig. 6b). These results indicate that most of the type 2 diabetes susceptibility loci identified in the Japanese or European population had comparable effects on type 2 diabetes in the other population.

“Identification of type 2 diabetes loci in 433,540 East Asian individuals”, et al 2019:

Overall, the per-allele effect sizes between the two ancestries were moderately correlated (r=0.54; Figure 2A). When the comparison was restricted to the 290 variants that are common (MAF≥5%) in both ancestries, the effect size correlation increased to r=0.71 (Figure 2B; Supplementary Figure 8). This effect size correlation further increased to r=0.88 for 116 variants significantly associated with T2D (P<5x10-8) in both ancestries. While the overall effect sizes for all 343 variants appear, on average, to be stronger in East Asian individuals than European, this trend is reduced when each locus is represented only by the lead variant from one population (Supplementary Figure 9). Specifically, many variants identified with larger effect sizes in the European meta-analysis are missing from the comparison because they were rare/monomorphic or poorly imputed in the East Asian meta-analysis, for which imputation reference panels are less comprehensive compared to the European-centric Haplotype Reference Consortium panel.

“Genetic analyses of diverse populations improves discovery for complex traits”, Wojcik

When stratified by self-identified race/ethnicity, the effect sizes for the Hispanic/Latino population remained significantly attenuated compared to the previously reported effect sizes (β= 0.86; 95% confidence interval = 0.83–0.90; Fig. 2a). Effect sizes for the African American population were even further diminished at nearly half the strength (β= 0.54; 95% confidence interval = 0.50–0.58; Fig. 2a). This is suggestive of truly differential effect sizes between ancestries at previously reported variants, rather than these effect sizes being upwardly biased in general (that is, exhibiting ‘winner’s curse’), which should affect all groups equally.

“Contributions of common genetic variants to risk of schizophrenia among individuals of African and Latino ancestry”, et al 2019:

Consistent with previous reports demonstrating the generalizability of polygenic findings for schizophrenia across diverse populations [14, 43, 44], individual-level scores constructed from PGC-SCZ2 summary statistics were significantly associated with case−control status in admixed African, Latino, and European cohorts in the current study (Fig. 2a). When considering scores constructed from approximately independent common variants (pairwise r2 < 0.1), we observed the best overall prediction at a P value threshold (PT) of 0.05; these scores explained ~3.5% of the variance in schizophrenia liability among Europeans (P = 4.03 × 10−110), ~1.7% among Latino individuals (P = 9.02 × 10−52), and ~0.5% among admixed African individuals (P = 8.25 × 10−19) (Fig. 2a; Supplemental Table 6). Consistent with expectation, when comparing results for scores constructed from larger numbers of nonindependent SNPs, we generally observed an improvement in predictive value (Fig. 2b; Supplemental Table 7).

Polygenic scores based on African ancestry GWAS results were significantly associated with schizophrenia among admixed African individuals, attaining the best overall predictive value when constructed from approximately independent common variants (pairwise r2 < 0.1) with PT ≤ 0.5 in the discovery analysis (Fig. 2a and Supplemental Table 6); this score explained ~1.3% of the variance in schizophrenia liability (P = 3.47 × 10−41). Scores trained on African ancestry GWAS results also significantly predicted case−control status across populations; scores based on a PT ≤ 0.5 and pairwise r2 < 0.8 explained ~0.2% of the variability in liability in Europeans (P = 2.35 × 10−7) and ~0.1% among Latino individuals (P = 0.000184) (Fig. 2b and Supplemental Table 7). Similarly, scores constructed from Latino GWAS results (PT ≤ 0.5) were of greatest predictive value among Latinos (liability R2 = 2%; P = 3.11 × 10−19) and Europeans (liability R2 = 0.8%; P = 1.60 × 10−9); with scores based on PT ≤ 0.05 and pairwise r2 < 0.1 showing nominally significant association with case-control status among African ancestry individuals (liability R2 = 0.2%; P = 0.00513).

We next considered polygenic scores constructed from trans-ancestry meta-analysis of PGC-SCZ2 summary statistics and our African and Latino GWAS, which revealed increased significance and improved predictive value in all three ancestries. Among African ancestry individuals, meta-analytic scores based on PT ≤ 0.5 explained ~1.7% of the variance (P = 4.37 × 10−53); while scores based on PT ≤ 0.05 accounted for ~2.1% and ~3.7% of the variability in liability among Latino (P = 1.10 × 10−59) and European individuals (P = 1.73 × 10−114), respectively.

“Quantifying genetic heterogeneity between continental populations for human height and body mass index”, et al 2019:

Genome-wide association studies (GWAS) in samples of European ancestry have identified thousands of genetic variants associated with complex traits in humans. However, it remains largely unclear whether these associations can be used in non-European populations. Here, we seek to quantify the proportion of genetic variation for a complex trait shared between continental populations. We estimated the between-population correlation of genetic effects at all SNPs (rg) or genome-wide significant SNPs (rg(GWS)) for height and body mass index (BMI) in samples of European (EUR; n = 49,839) and African (AFR; n = 17,426) ancestry. The rg between EUR and AFR was 0.75 (s. e. = 0.035) for height and 0.68 (s. e. = 0.062) for BMI, and the corresponding rg(gwas) was 0.82 (s. e. = 0.030) for height and 0.87 (s. e. = 0.064) for BMI, suggesting that a large proportion of GWAS findings discovered in Europeans are likely applicable to non-Europeans for height and BMI. There was no evidence that rg differs in SNP groups with different levels of between-population difference in allele frequency or linkage disequilibrium, which, however, can be due to the lack of power.

To elucidate the genetics of coronary artery disease (CAD) in the Japanese population, we conducted a large-scale genome-wide association study (GWAS) of 168,228 Japanese (25,892 cases and 142,336 controls) with genotype imputation using a newly developed reference panel of Japanese haplotypes including 1,782 CAD cases and 3,148 controls. We detected 9 novel disease-susceptibility loci and Japanese-specific rare variants contributing to disease severity and increased cardiovascular mortality. We then conducted a transethnic meta-analysis and discovered 37 additional novel loci. Using the result of the meta-analysis, we derived a polygenic risk score (PRS) for CAD, which outperformed those derived from either Japanese or European GWAS. The PRS prioritized risk factors among various clinical parameters and segregated individuals with increased risk of long-term cardiovascular mortality. Our data improves clinical characterization of CAD genetics and suggests the utility of transethnic meta-analysis for PRS derivation in non-European populations.

“Genetic Risk Scores for Cardiometabolic Traits in Sub-Saharan African Populations”, et al 2020:

There is growing support for the use of genetic risk scores (GRS) in routine clinical settings. Due to the limited diversity of current genomic discovery samples, there are concerns that the predictive power of GRS will be limited in non-European ancestry populations. Here, we evaluated the predictive utility of GRS for 12 cardiometabolic traits in sub-Saharan Africans (AF; n=5200), African Americans (AA; n=9139), and European Americans (EA; n=9594). GRS were constructed as weighted sums of the number of risk alleles. Predictive utility was assessed using the additional phenotypic variance explained and increase in discriminatory ability over traditional risk factors (age, sex and BMI), with adjustment for ancestry-derived principal components. Across all traits, GRS showed upto a 5-fold and 20-fold greater predictive utility in EA relative to AA and AF, respectively. Predictive utility was most consistent for lipid traits, with percent increase in explained variation attributable to GRS ranging from 10.6% to 127.1% among EA, 26.6% to 65.8% among AA, and 2.4% to 37.5% among AF. These differences were recapitulated in the discriminatory power, whereby the predictive utility of GRS was 4-fold greater in EA relative to AA and up to 44-fold greater in EA relative to AF. Obesity and blood pressure traits showed a similar pattern of greater predictive utility among EA.

Given the practice of embryo editing, the causal tagging problem can gradually solve itself: as edits are made, forcibly breaking the potential confounds, the causal nature of an SNP becomes clear. But how to allocate edits across the top SNPs to determine each’s causal nature as efficiently as possible without spending too many edits investigating? A naive answer might be something along the lines of a power analysis: in a two-group t-test trying to detect a difference of ~0.03 SD for each variant (the rough size of the top few variants), with variance reduced by the known polygenic score, and desired power is the standard 80%; it follows that one would need to randomize a total sample of n = 34842 to reject the null hypothesis of 0 effect20. Setting up a factorial experiment & randomizing several variants simultaneously may allow inferences on them as well, but clearly this is going to be a tough row to hoe. This is unduly pessimistic, since we neither need nor desire 80% power, nor are we comparing to a null hypothesis, as our goal is more modest: since only a certain number of edits will be doable for any embryo, say 30, we merely want to accumulate enough evidence about the top 30 variants to either demote it to #31 (and so we no longer spend any edits on it) or confirm it belongs in the top 30 and we should always be editing it. This is immediately recognizable as reinforcement learning: the multi-armed bandit (MAB) problem (each possible edit being an independent arm which can pulled or not); or more precisely, since early childhood (5yo) IQ scores are relatively poorly correlated with adult scores (r = 0.55) and many embryos may be edited before data on the first edits starts coming in, a multi-armed bandit with multiple plays and delayed feedback. (There is no way immediately upon birth to receive meaningful feedback about the effect of an edit, although there might be ways to get feedback faster, such as using short-sleeper gene edits to enhance education.) Thompson sampling is a randomized Bayesian approach which is simple and theoretically optimal, with excellent performance in practice as well; an extension to multiple plays is also optimal. Dealing with the delayed feedback is known to be difficult and multiple-play Thompson sampling may not be optimal, but in simulations it performs better with delayed feedback than other standard MABs. We can consider a simulation of the scenario in which every time-step is a day and 1 or more embryos must be edited that day; a noisy measure of IQ is then made available 9*31+5*365=2104 days later, which is fed into a GWAS mixture model in which the GWAS correlation for each SNP is considered as drawn with an unknown probability from a causal distribution and from a nuisance distribution, so with additional data, the effect estimates of the SNPs are refined, the probability of being drawn from the causal distribution is refined, and the overall mixture probability is likewise refined, similar to the “Bayes B” paradigm from the “Bayesian alphabet” of genomic prediction methods in animal breeding. (So for the first 2104 time-steps, a Thompson sample would be performed to yield a new set of edits, then each subsequent time-step a datapoint would mature, the posteriors updated, and another set of edits created.) The relevant question is how much regret will fall and how many causal SNPs become the top picks after how many edits & days: hopefully high performance & low regret will be achieved within a few years after the initial 5-year delay.

a more concrete example: imagine we have a budget of 60 edits (based on the multiplex pig editing), a causal probability of 10%, an exponential distribution (rate 70.87) over 500000 candidate alleles of which we consider the top 1000, each of which has a frequency of 50% and we sequence before editing to avoid wasting edits. What is our best case and worst-case IQ increase? In the worst case, the top 60 are all non-causal, so our improvement is 0 IQ points; in the best case where all hits are causal, half of the hits are discarded after sequencing, and then the remaining top 60 get us ~6.1 IQ points; the intermediate case of 10% causal gets us to ~0.61 IQ points, and so our regret is 5.49 IQ points per embryo. Unsurprisingly, a 10% causal rate is horribly inefficient. In the 10% case, if we can infer the true causal SNPs, we only need to start with ~600 SNPs to saturate our editing budget on average, or ~900 to have <1% chance of winding up with <60 causal SNPs, so 1000 SNPs seems like a good starting point. (Of course, we also want a larger window so as our edit budget increases with future income growth & technological improvement, we can smoothly incorporate the additional SNPs.) So what order of samples do we need here to reduce our regret of 5.49 to something more reasonable like <0.25 IQ points?

SNPs <- 500000

SNPlimit <- 1000

rate <- 70.87

editBudget <- 60

frequency <- 0.5

mean(replicate(1000, {

hits <- sort(rexp(SNPs, rate=rate), decreasing=TRUE)[1:SNPlimit]

sum(sample(hits, length(hits) * 0.5)[1:editBudget])

}))http://jmlr.csail.mit.edu/proceedings/papers/v31/agrawal13a.pdf Regret of Thompson sampling with Gaussian priors & likelihood is O(sqrt(N * T * ln(N))), where N = number of different arms/actions and T = current timestep hence, if we have 1000 actions and we sample 1 time, our expected total regret is on the order of sqrt(1000 * 1 * ln(1000)) = 83; with 100 samples, our expected total regret has increased by two orders to 831 but we are only incurring an additional expected regret of ~4 or 5% of the first timestep’s regret diff(sapply((1:10000, function(t) { sqrt(1000 * t * log(1000)) } )))

Thompson sampling also achieves the lower bound in multiple-play but the asymptotic is more complex, and does not take into account the long delay & noise in measuring IQ. TS empirically performs well but hard to know what sort of sample size is required. But at least we can say that the asymptotics don’t imply dozens of thousands of embryos.

problem: what’s the probability of non-causal tagging due to LD? probably low since they work cross-ethnically don’t they? on the other hand: https://emilkirkegaard.dk/en/?p=5415 > “If the GWAS SNPs owe their predictive power to being actual causal variants, then LD is irrelevant and they should predict the relevant outcome in any racial group. If however they owe wholly or partly their predictive power to just being statistically related to causal variants, they should be relatively worse predictors in racial groups that are most distantly related. One can investigate this by comparing the predictive power of GWAS betas derived from one population on another population. Since there are by now 1000s of GWAS, meta-analyses have in fact made such comparisons, mostly for disease traits. Two reviews found substantial cross-validity for the Eurasian population (Europeans and East Asians), and less for Africans (usually African Americans) (23,24). The first review only relied on SNPs with p < α and found weaker results. This is expected because using only these is a threshold effect, as discussed earlier.

The second review (from 201312ya; 299 included GWAS) found much stronger results, probably because it included more SNPs and because they also adjusted for statistical power. Doing so, they found that: ~100% of SNPs replicate in other European samples when accounting for statistical power, ~80% in East Asian samples but only ~10% in the African American sample (not adjusted for statistical power, which was ~60% on average). There were fairly few GWAS for AAs however, so some caution is needed in interpreting the number. Still, this throws some doubt on the usefulness of GWAS results from Europeans or Asians used on African samples (or reversely).” and https://emilkirkegaard.dk/en/?p=6415

“Identifying Causal Variants at Loci with Multiple Signals of Association”, Hormozdiari et al 201411ya http://genetics.org/content/198/2/497.full “Where is the causal variant? On the advantage of the family design over the case-control design in genetic association studies”, Dandine-2015 https://www.nature.com/ejhg/journal/v23/n10/abs/ejhg2014284a.html worst-case, ~10% of SNPs are causal?

https://www.addgene.org/crispr/reference/ https://www.genome.gov/about-genomics/fact-sheets/Sequencing-Human-Genome-cost https://crispr.bme.gatech.edu/ http://crispr.mit.edu/ low, near zero mutation rates: “High-fidelity CRISPR-Cas9 nucleases with no detectable genome-wide off-target effects” et al 2016, /doc/genetics/editing/2016-kleinstiver.pdf ; “Rationally engineered Cas9 nucleases with improved specificity”, et al 2016 /doc/genetics/editing/2016-slaymaker.pdf Church, April 2016: “Indeed, the latest versions of gene-editing enzymes have zero detectable off-target activities.” https://www.wsj.com/articles/should-heritable-gene-editing-be-used-on-humans-1460340173 Church, June 2016 “Church: In practice, when we introduced our first CRISPR in 201312ya,19 it was about 5% off target. In other words, CRISPR would edit five treated cells out of 100 in the wrong place in the genome. Now, we can get down to about one error per 6 trillion cells…Fahy: Just how efficient is CRISPR at editing targeted genes? Church: Without any particular tricks, you can get anywhere up to, on the high end, into the range of 50% to 80% or more of targeted genes actually getting edited in the intended way. Fahy: Why not 100%? Church: We don’t really know, but over time, we’re getting closer and closer to 100%, and I suspect that someday we will get to 100%. Fahy: Can you get a higher percentage of successful gene edits by dosing with CRISPR more than once? Church: Yes, but there are limits.” http://www.lifeextension.com/Lpages/2016/CRISPR/index “A person familiar with the research says ‘many tens’ of human IVF embryos were created for the experiment using the donated sperm of men carrying inherited disease mutations. Embryos at this stages are tiny clumps of cells invisible to the naked eye. ‘It is proof of principle that it can work. They significantly reduced mosaicism. I don’t think it’s the start of clinical trials yet, but it does take it further than anyone has before’, said a scientist familiar with the project. Mitalipov’s group appears to have overcome earlier difficulties by ‘getting in early’ and injecting CRISPR into the eggs at the same time they were fertilized with sperm.” https://www.technologyreview.com/2017/07/26/68093/first-human-embryos-edited-in-us/ cost of the top variants? want to edit all variants such that: sequencing-based edit: posterior mean * value of IQ point > cost of 1 edit for blind edits: probability of the bad variant * posterior mean * value of IQ point > cost of 1 edit

how to simulate posterior probabilities? https://cran.r-project.org/web/packages/BGLR/BGLR.pdf https://cran.r-project.org/web/packages/BGLR/vignettes/BGLR-extdoc.pdf looks useful but won’t handle the mixture modeling

previous: et al 2015 “CRISPR/Cas9-mediated gene editing in human tripronuclear zygotes” https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4417674/ https://www.nature.com/news/chinese-scientists-genetically-modify-human-embryos-1.17378 et al 2016, “Introducing precise genetic modifications into human 3PN embryos by CRISPR/Cas-mediated genome editing” /doc/genetics/editing/2016-kang.pdf https://www.nature.com/news/second-chinese-team-reports-gene-editing-in-human-embryos-1.19718 et al 2016, “Programmable editing of a target base in genomic DNA without double-stranded DNA cleavage” https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4873371/ https://www.nature.com/articles/nature.2016.20302 “CRISPR/Cas9-mediated gene editing in human zygotes using Cas9 protein” et al 2017 /doc/genetics/editing/2017-tang.pdf : no observed off-target mutations; efficiency of 20%, 50%, and 100% “Correction of a pathogenic gene mutation in human embryos”, et al 2017 https://www.nature.com/articles/nature23305 no observed off-targets, 27.9% efficiency

legal in USA (no legislation but some interesting regulatory wrinkles: see ch7 of Human Genome Editing: Science, Ethics, and Governance 2017 ), legal in China (only ‘unenforceable guidelines’) as of 201411ya, according to “International regulatory landscape and integration of corrective genome editing into in vitro fertilization”, 2014 http://rbej.biomedcentral.com/articles/10.1186/1477-7827-12-108 as of 2015 too according to https://www.nature.com/news/where-in-the-world-could-the-first-crispr-baby-be-born-1.18542 loophole closed post-He Jiankui & made definitely illegal: “China officially bans CRISPR babies, human clones and animal-human hybrids” illegal in the UK but they have given permission to modify human embryos for research http://www.popsci.com/scientists-get-government-approval-to-edit-human-embryos? https://www.nytimes.com/2016/02/02/health/crispr-gene-editing-human-embryos-kathy-niakan-britain.html legal in Japan for research, but maybe not application? https://mainichi.jp/english/articles/20160423/p2g/00m/0dm/002000c legal in Sweden for editing, which has been done as of September 2016 by Fredrik Lanner https://www.npr.org/sections/health-shots/2016/09/22/494591738/breaking-taboo-swedish-scientist-seeks-to-edit-dna-of-healthy-human-embryos

Also, what about mosaicism? When the CRISPR RNA is injected into an even single-celled zygote, it may already have created some of the DNA for a split and so the edit covers only a fraction of the cells of the future full-grown organism. “Additionally, editing may happen after first embryonic division, due to persistence of Cas9:gRNA complexes, also causing mosaicism. We (unpublished results) and others (Yang et al 201312yaa; Ma et al 201411ya; Yen et al 201411ya) have observed mosaic animals carrying three or more alleles. A recent study reported surprisingly high percentage of mosaic mice (up to 80%) generated by CRISPR targeting of the tyrosinase gene (Tyr) (Yen et al 201411ya). We have observed a varying frequency of mosaicism, 11-35%, depending on the gene/locus (our unpublished data)… The pronuclear microinjection of gRNA and Cas9, in a manner essentially identical to what is used for generating transgenic mice, can be easily adapted by most transgenic facilities. Facilities equipped with a Piezo-electric micromanipulator can opt for cytoplasmic injections as reported (Wang et al 201312ya; Yang et al 201312yaa). Horii et al 201411ya performed an extensive comparison study suggesting that cytoplasmic injection of a gRNA and Cas9 mRNA mixture as the best delivery method. Although the overall editing efficiency in born pups yielded by pronuclear vs. cytoplasmic RNA injection seems to be comparable (Table 1), the latter method generated two- to fourfold more live born pups. Injection of plasmid DNA carrying Cas9 and gRNA to the pronucleus was the least efficient method in terms of survival and targeting efficiency (Mashiko et al 201312ya; Horii et al 201411ya). Injection into pronuclei seems to be more damaging to embryos than injection of the same volume or concentration of editing reagents to the cytoplasm. It has been shown that cytoplasmic injection of Cas9 mRNA at concentrations up to 200 ng/μl is not toxic to embryos (Wang et al 201312ya) and efficient editing was achieved at concentrations as low as 1.5 ng/μl (Ran et al 201312yaa). In our hands, injecting Cas9 mRNA at 50-150 ng/μl and gRNA at 50-75 ng/μl first into the pronucleus and also into the cytoplasm as the needle is being withdrawn, yields good survival of embryos and efficient editing by NHEJ in live born pups (our unpublished observations).” http://genetics.org/content/199/1/1.full

dnorm((150-100)/15) * 320000000 [1] 493,529.2788 dnorm((170-100)/15) * 320000000 [1] 2382.734679

if you’re curious how I calculated that, (10*1000 + 10 * 98 * 500) > 500000 → [1] FALSE sum(sort((rexp(10000)/1)/18, decreasing=TRUE)[1:98] * 0.5) → [1] 15.07656556

hm. there are ~50k IVF babies each year in the USA. my quick CRISPR sketch suggested that for a few mill you could get up to 150-170. dnorm((150-100)/15) * 320000000 → [1] 493,529.2788; dnorm((170-100)/15) * 320000000 → [1] 2382.734679. so depending on how many IVFers used it, you could boost the total genius population by anywhere from 1/10th to 9x

but if only 10% causal rate and so only 100 effective edits from 1000, and a net gain of 15 IQ points (1SD) then increases: IVF <- (dnorm((115-100)/15) * 50000); genpop <- (dnorm((150-100)/15) * 320000000); (IVF+genpop)/genpop [1] 1.024514323 IVF <- (dnorm((115-100)/15) * 50000); genpop <- (dnorm((170-100)/15) * 320000000); (IVF+genpop)/genpop [1] 6.077584313 an increase of 1.02x (150) and 6x (170) respectively

“To confirm these GUIDE-seq findings, we used targeted amplicon sequencing to more directly measure the frequencies of indel mutations induced by wild-type SpCas9 and SpCas9-HF1. For these experiments, we transfected human cells only with sgRNA- and Cas9encoding plasmids (without the GUIDE-seq tag). We used next-generation sequencing to examine the on-target sites and 36 of the 40 off-target sites that had been identified for six sgRNAs with wild-type SpCas9 in our GUIDE-seq experiments (four of the 40 sites could not be specifically amplified from genomic DNA). These deep sequencing experiments showed that: (1) wild-type SpCas9 and SpCas9-HF1 induced comparable frequencies of indels at each of the six sgRNA on-target sites, indicating that the nucleases and sgRNAs were functional in all experimental replicates (Fig. 3a, b); (2) as expected, wild-type SpCas9 showed statistically significant evidence of indel mutations at 35 of the 36 off-target sites (Fig. 3b) at frequencies that correlated well with GUIDE-seq read counts for these same sites (Fig. 3c); and (3) the frequencies of indels induced by SpCas9-HF1 at 34 of the 36 off-target sites were statistically indistinguishable from the background level of indels observed in samples from control transfections (Fig. 3b). For the two off-target sites that appeared to have statistically significant mutation frequencies with SpCas9-HF1 relative to the negative control, the mean frequencies of indels were 0.049% and 0.037%, levels at which it is difficult to determine whether these are due to sequencing or PCR error or are bona fide nuclease-induced indels. Based on these results, we conclude that SpCas9-HF1 can completely or nearly completely reduce off-target mutations that occur across a range of different frequencies with wild-type SpCas9 to levels generally undetectable by GUIDE-seq and targeted deep sequencing.”

So no detected off-target mutations down to the level of lab error rate detectability. Amazing. So you can do a CRISPR on a cell with a >75% chance of making the edit to a desired gene correctly, and a <0.05% chance of a mistaken (potentially harmless) edit/mutation on a similar gene. With an error rate that low, you could do hundreds of CRISPR edits to a set of embryos with a low net risk of error… The median number of eggs extracted from a woman during IVF in America is ~9; assume the worst case of 0.05% risk of off-target mutation and that one scraps any embryo found to have any mutation at all even if it looks harmless; then the probability of making 1000 edits without an off-target mutation could be (1-(0.05/100)) ^ 1000 = 60%, so you’re left with 5.4 good embryos, which is a decent yield. Making an edit of the top 1000 betas from the 2013 polygenic score and figuring that it’s weakened by maybe 25% due to particular cells not getting particular edits and that is… a very large number.